I recently made a photo post with my impressions of the yellow iPhone 14, which was completely shot on the iPhone 13 Pro.

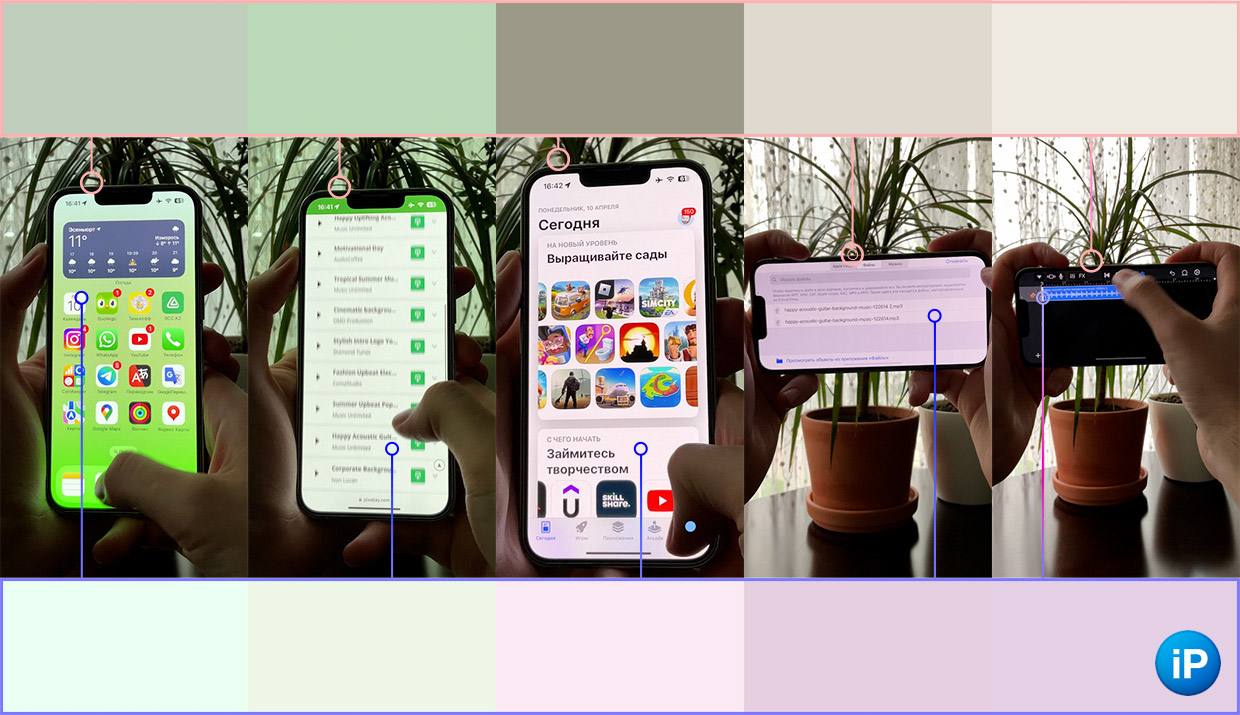

It’s terrible, but for processing in photoshop Togothat the smartphone issued, killed almost the whole day. And not because my hands are crooked. Aligning all the frames to one color game turned out to be too difficult.

In each new frame, the iPhone painted the tree, hands, and objects in random order. And that’s half the problem.

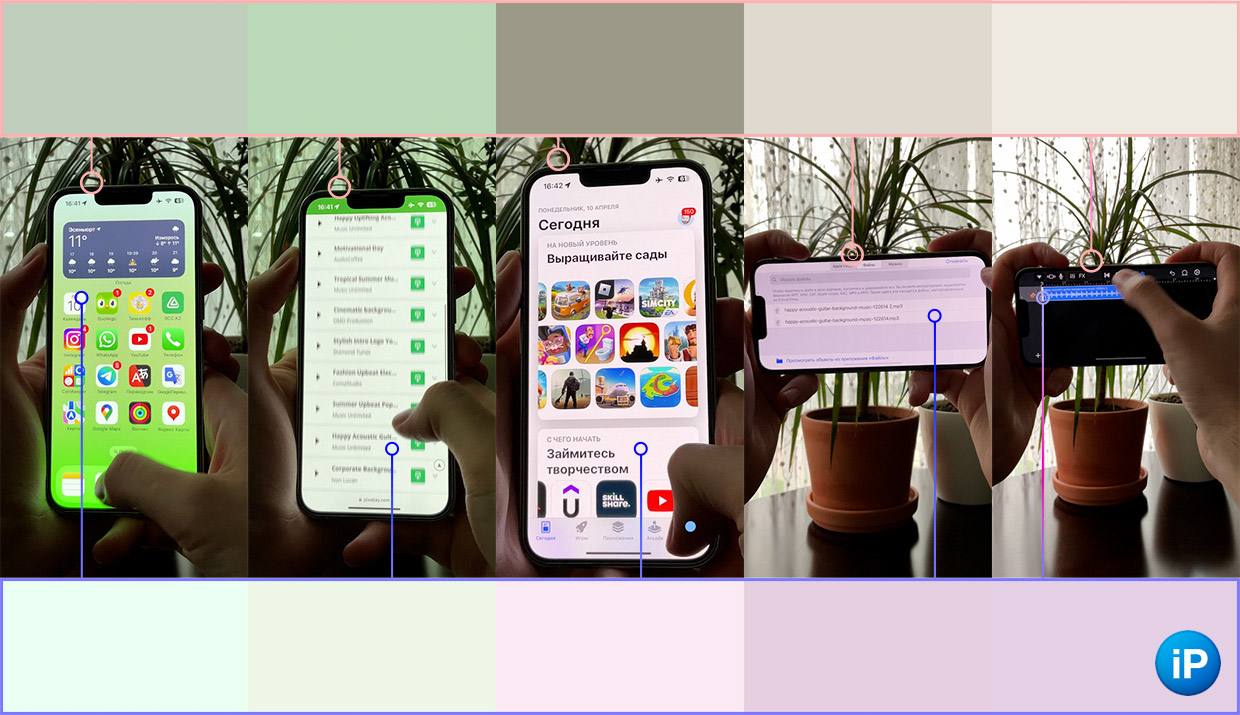

Below are some sample examples showing where the iPhone sags hard and Apple is just a couple of new tweaks that should lead to a new level of comfort and naturalness.

There is still no manual color fixing in the Camera

Third party apps don’t provide the same convenience

Since 2019, the top iPhone models have been released under the Pro brand. And the stories about the presentation: professional features are now being added that require three percent of buyers from strength, but make everyone want to buy.

At the same time, the settings inside the camera applications were frail for the fifth year in a row. Apple, of course, is trying to make them its own way, rather than mindlessly copying them from professional cameras. But because there is still no white balanceI want to score on mobile shooting in the corner.

White balance gradation. The lower the value, the more yellow

white balance responsible for making white look white and image color temperature application of popularity. Photo or video did not go away flashed and blue.

measured in Kelvin. The gradation is:

On conventional cameras, correct white means that all screens also begin to appear naturally.

In theory, the iPhone should also work this way, but two problems are revealed at once: you cannot fix the value manually, and even with the same indicator of this parameter, the color will be different.

The issue with the manual setting is most noticeable when you’re trying to capture a purple sunset and the iPhone is skinning it.

Two photos without processing, neither of them showed the real shade of the sky

In the photo above, the truth was somewhere in between. More red, but definitely not electric blue.

Sometimes, if it is important for me to preserve the real shade of the sky, I climb into the editor and process the photo right on the spot, referring to live removals.

It would be very easy to access the Camera app and use the slider to display the value to the one you want.

Back to the yellow iPhone 14. Below are two highlights: the end result after processing top and them originals below.

What I decided to add to the post is sustained plus or minus in the color scheme.

In auto mode, the background, devices and covers all the time received particles. At the same time, unfortunately, tightening the white balance was not enough.

For example, when the yellow color of the smartphone became payable in different photographs, for some reason the tree had a completely different shade. He preferred to align with masks, selective color correction, and so on.

One static video, and we have 50 lying on white. Made for a webinar

This problem gets even worse in video, when it comes to the hand in the frame, the background will be one color, and after another – Or when the dominant color in the frame changes, the reading algorithms instantly twist all other elements.

The only way out is the light on the subject, but along with the color, the focus and exposure are also fixed, which immediately limits the flexibility of shooting.

Why prevalence still not added? Over the past 10 years, Apple has automated color processing and differences in photos and videos too much. Half of all the work is now done not by iron cameras, but by the SmartHDR algorithm, which glues dozens of images into one frame.

Sometimes, taking and reprogramming it to manually listen to the white balance is expensive and difficult.

The problems don’t end there.

Artificial sharpness annoys everyone already

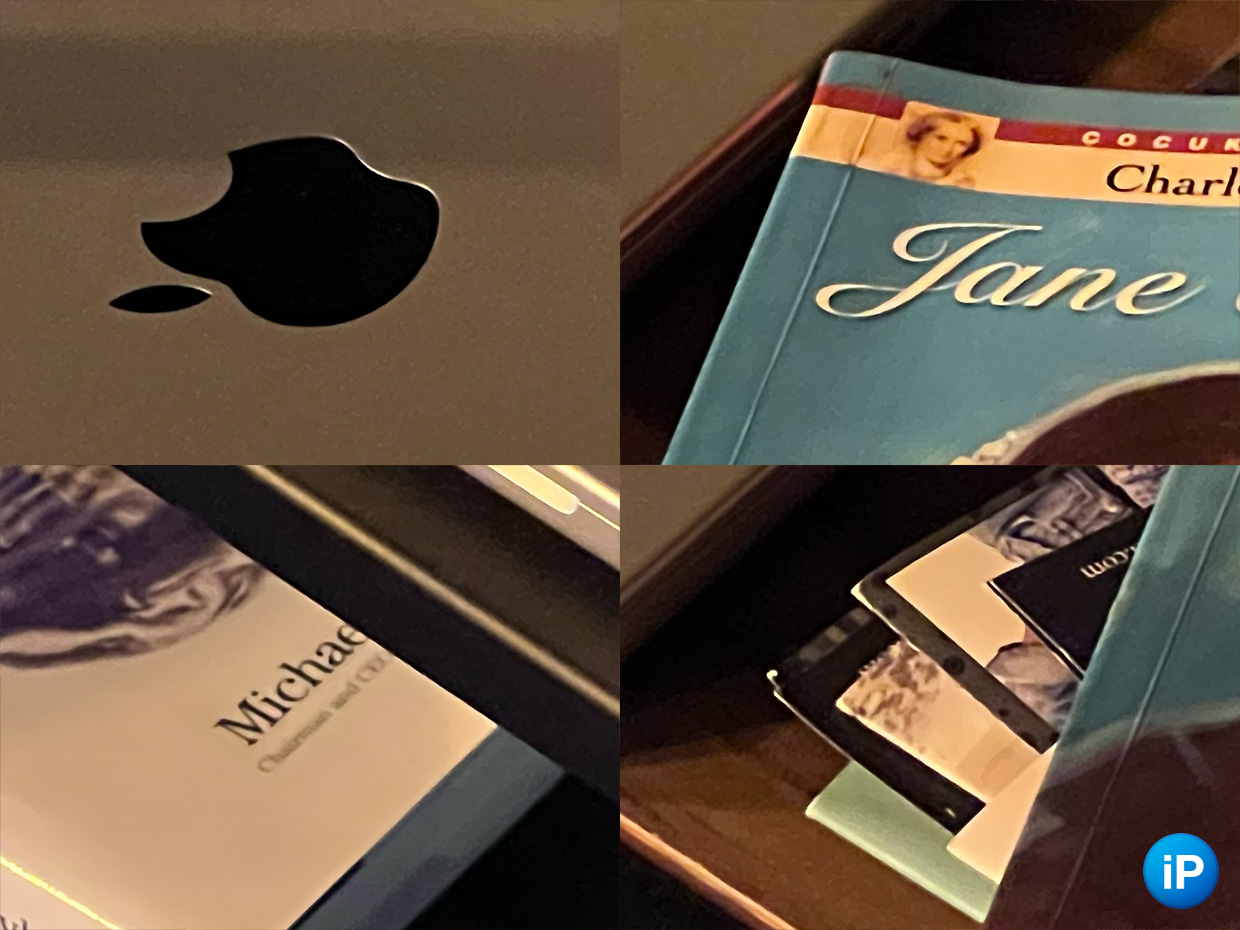

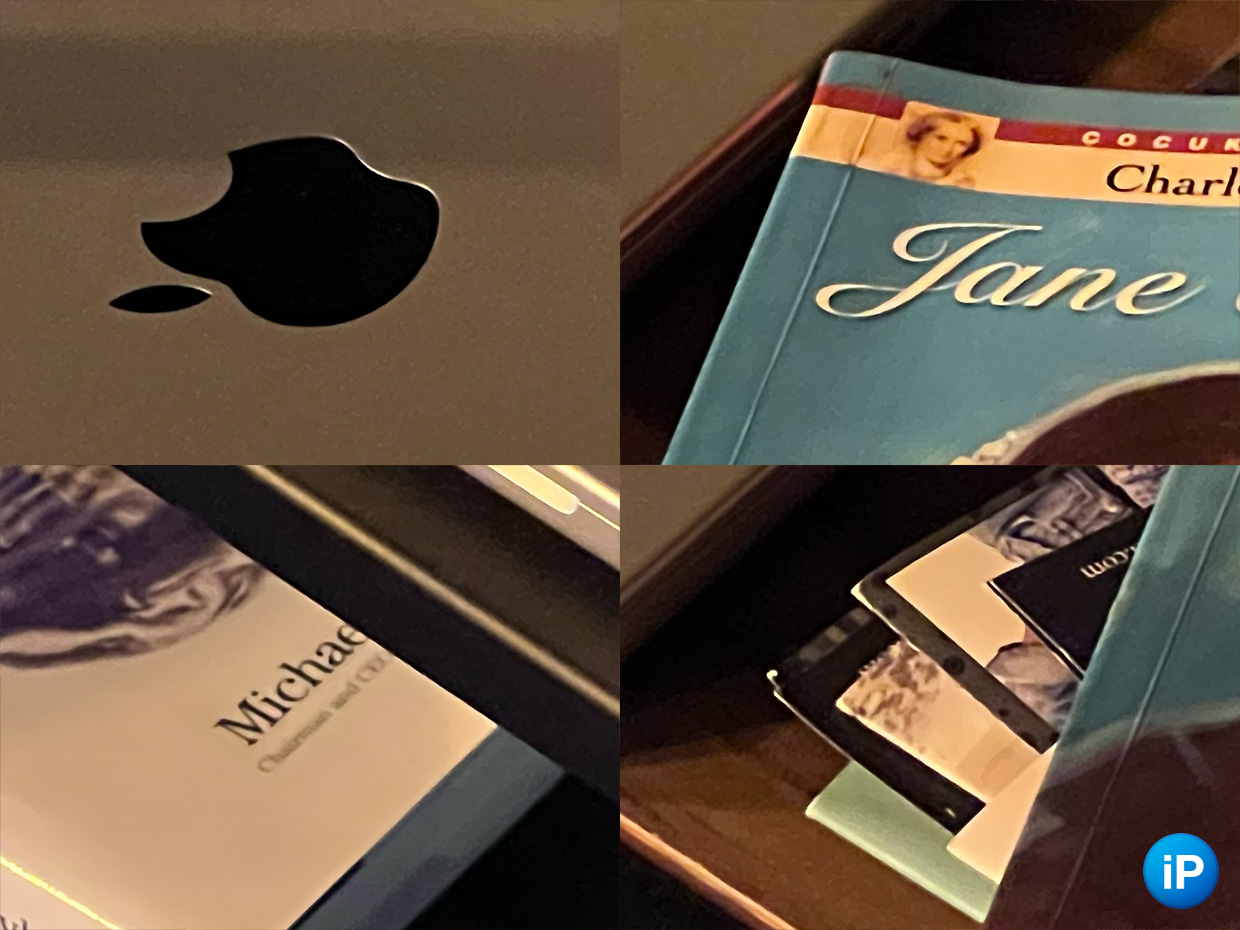

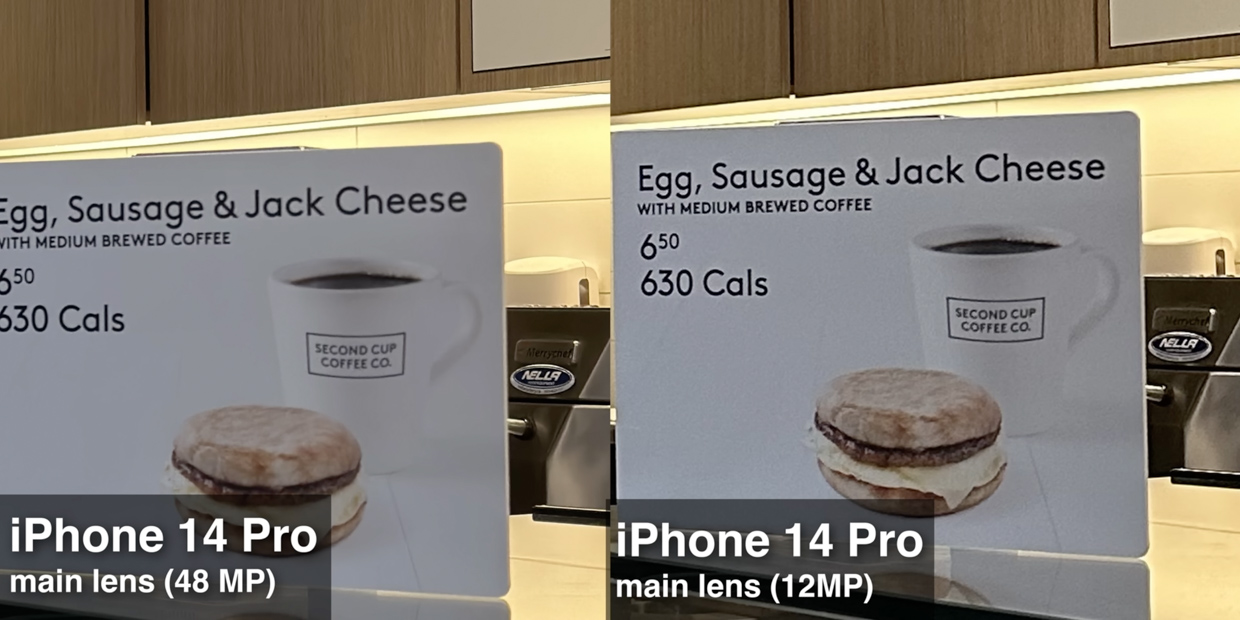

Above are examples of how, with artificial growth, algorithms turn into a drawing with paints.

When I’m at homeC grade” (or telephoto) and the light is not strong enough, one of two things happens: instead of optically zooming, the iPhone decides to give scrap with the main camera or proximity module includes an aggressive noise suppression and work with Deep Fusion, thus you give a very blurry picture with thick white stripes in contrasting areas.

iPhone with white stripes lifts up contrast

I can’t accept optical 3rd zoom, I can’t random noise reduction, I can’t forbid the algorithm to harden the contrast.

None of these barbaric methods can be used, no matter how “professional” smartphone is called.

I don’t see the need to port the best quality radial illusion photo that spills out when the first frame is assembled. The text, for example, remains legible even without white halos, but otherwise it is terribly harmful from the point of view of aesthetics.

Left photo before noise reduction, final direct shot

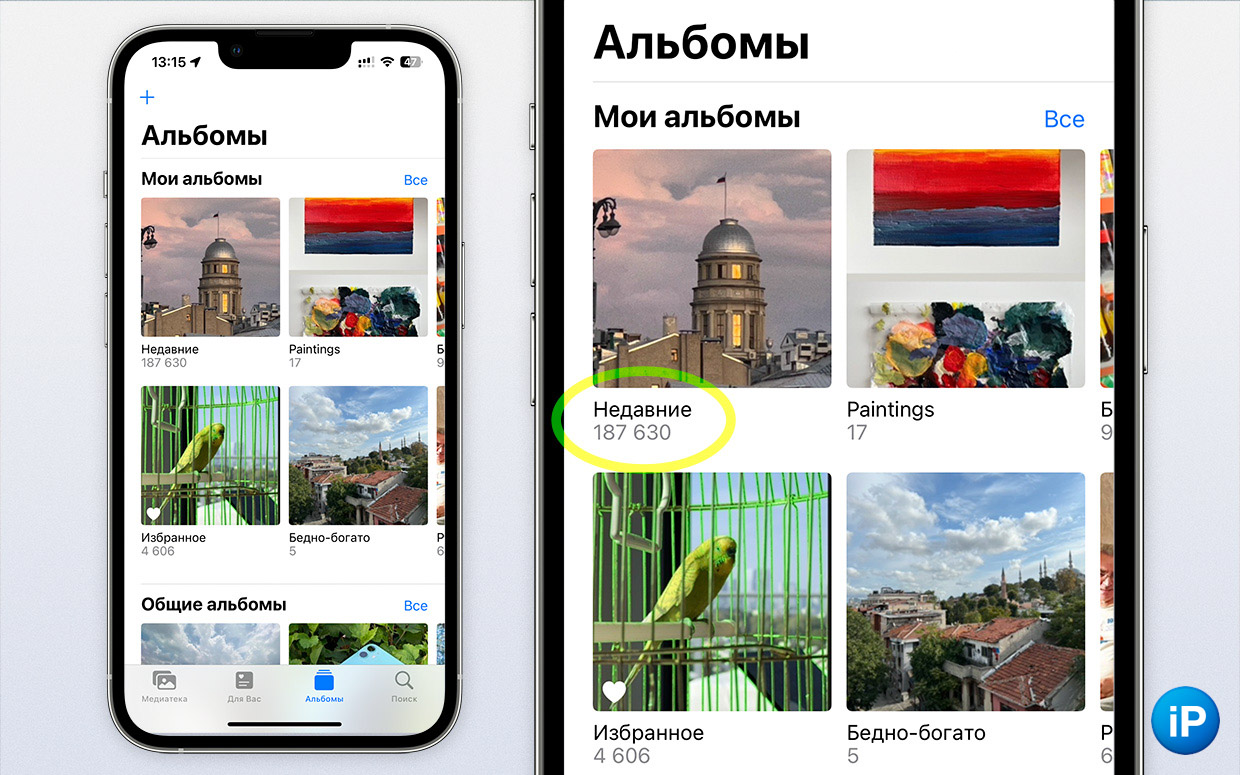

See the rise above. Sometimes, after taking a picture, the iPhone is not going to process the photo in space-time, so when you open Galleryyou can see the grain in recent photographs.

A matter of taste, of course, but I like this version of the frame much more than watercolor, which remains a second after processing by algorithms.

It is clear that not every frame should remain like this. But that wouldn’t hurt. Sometimes a beautiful noise is better than a mess of feeling.

Hope is near

At the beginning of 2023, I saw an opinion on various channels and on the site that the iPhone 14 does not take good pictures. At least, than competitors younger than the same class.

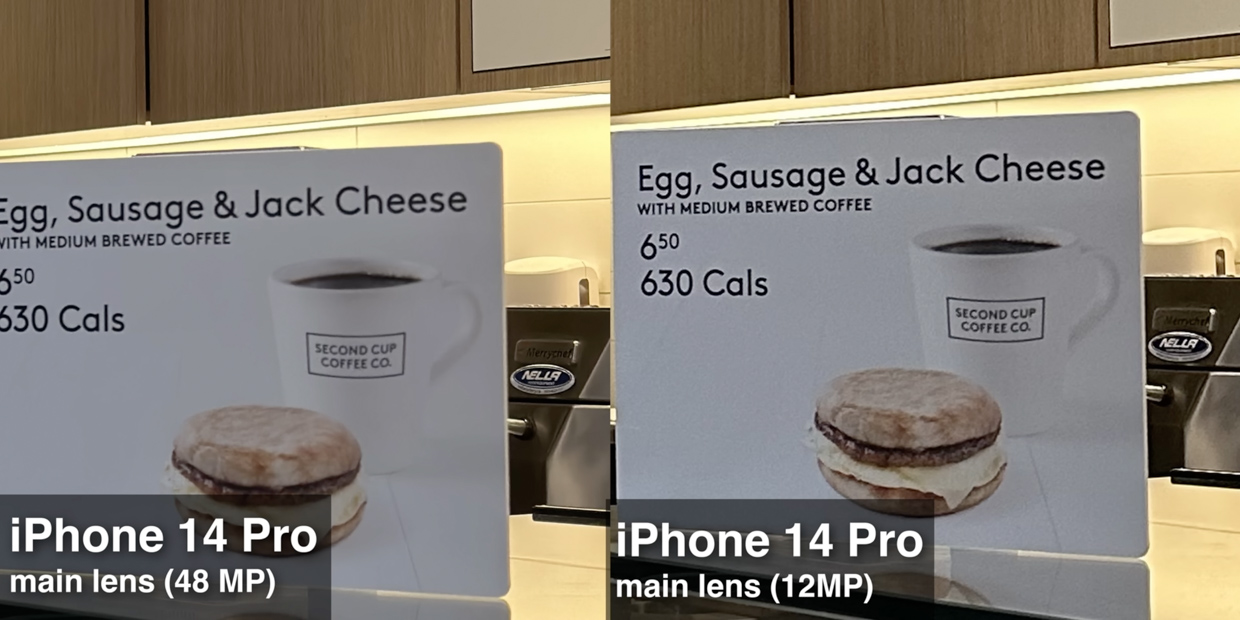

Many wrote that when shooting in 48 MP ProRAW mode on the iPhone 14 Pro, the extra contrast disappears. Text and other lines with a sharp transition of color begin to appear naturally, white color does not appear.

Question from white balance more difficult.

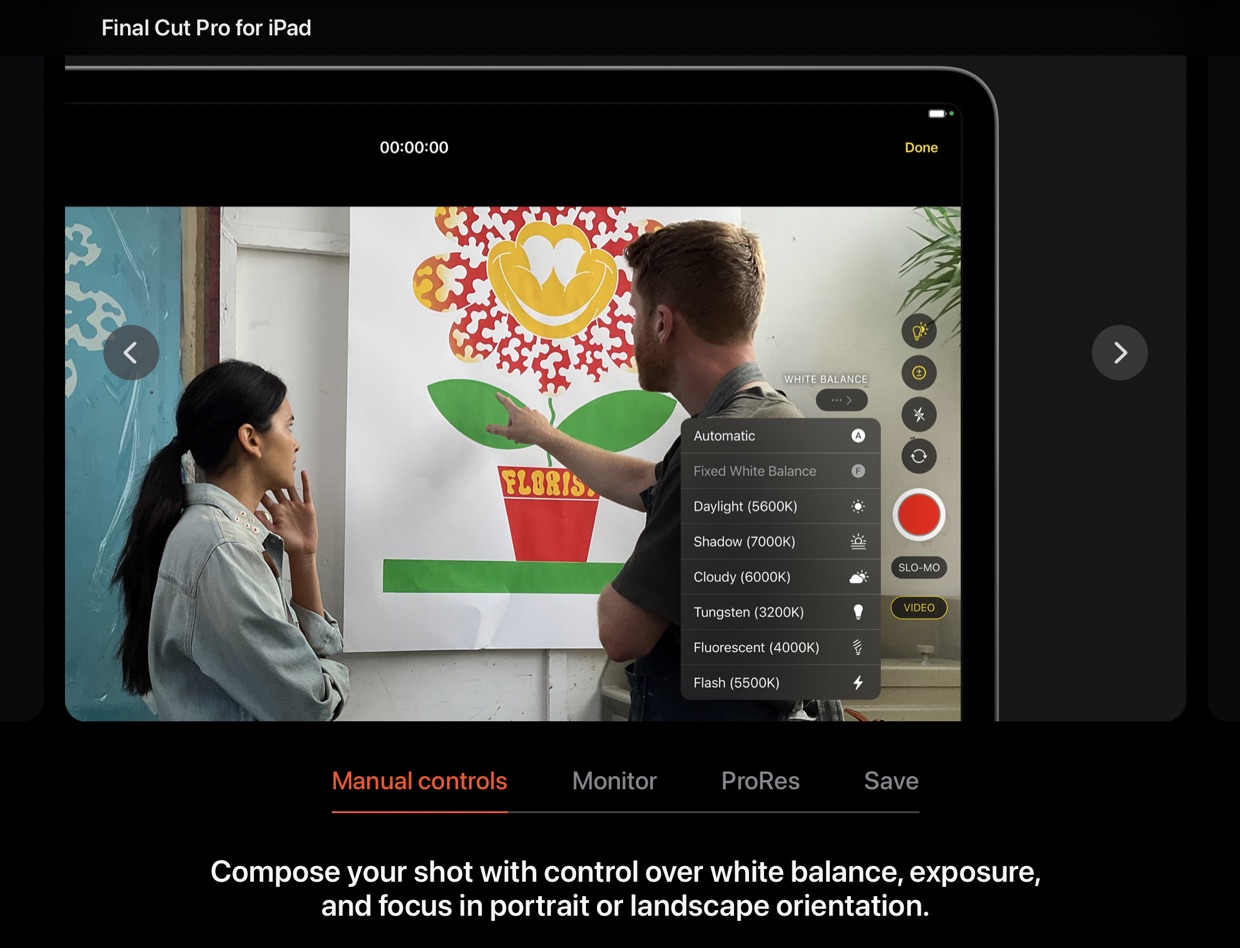

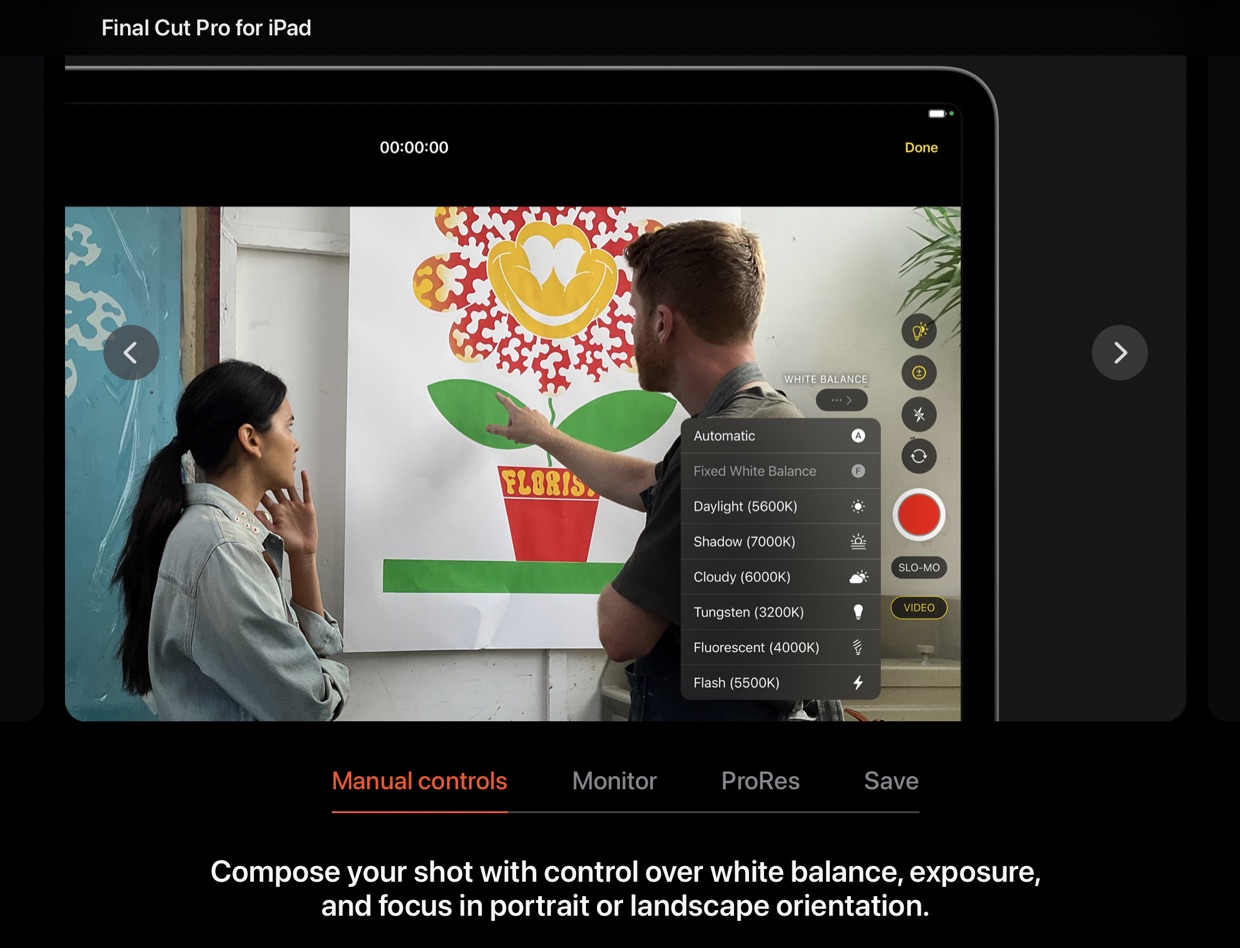

The iPad page for Final Cut Pro mentions the ability to “pro” use the tablet’s built-in camera.

In addition to all the usual settings first Officially, Apple will be able to adjust the white balance.

And although, judging by this preview, there will be no manual measurement, this is already a step forward. Apple in all 15 years of development of its cameras has never allowed to change the colors of the video balance before shooting.

Now there is a lot of “marriage” from the iPhone

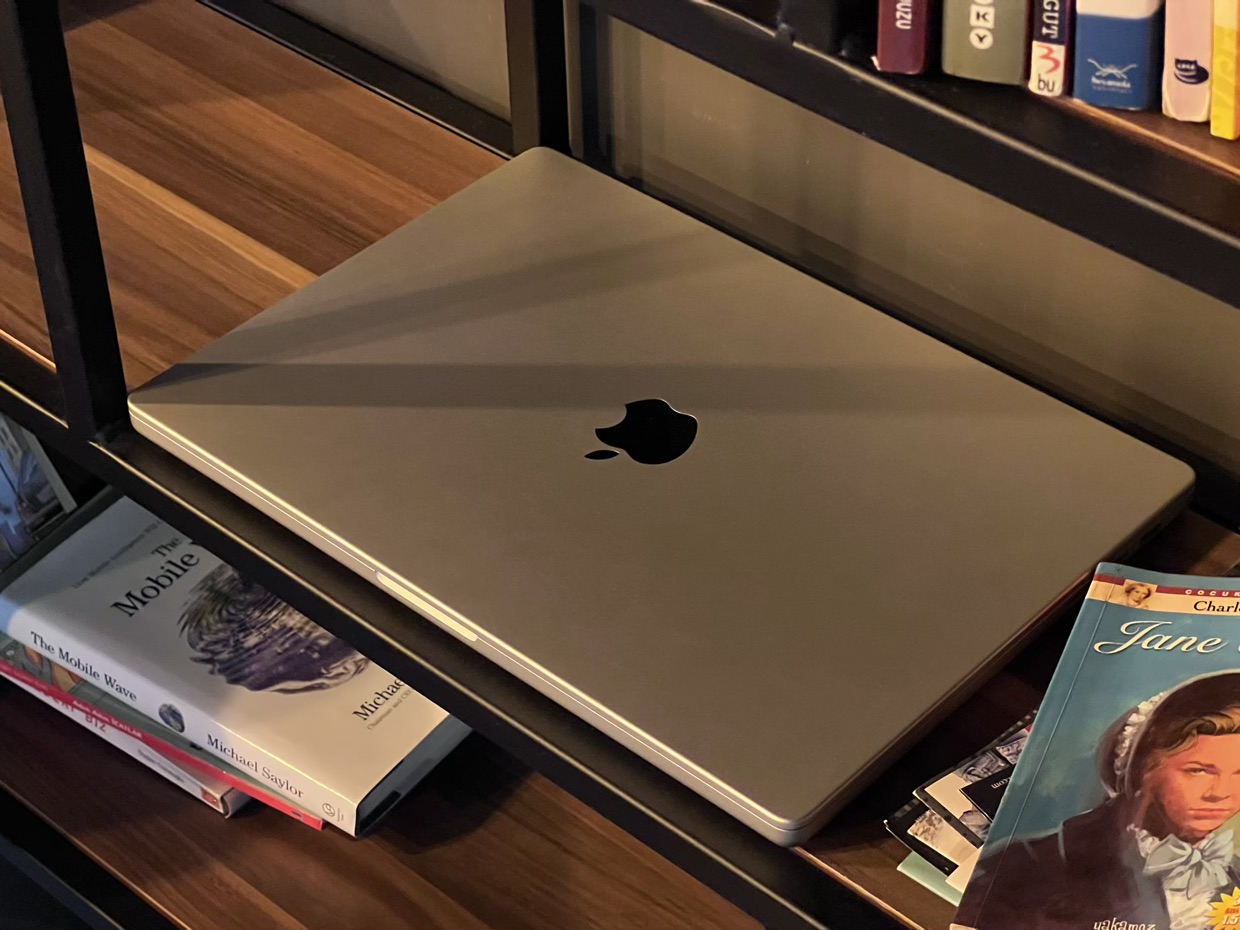

Over the past three years, I have used three cameras of different calibers for work, one of which was an iPhone.

Approximately half of all the photos in my articles are taken on it, and, in my opinion, the best of the mobile camera capabilities in the iPhone 13 Pro review a year later.

That’s just behind the shots that arranged for me, are tens of thousands (!!!) photos that I would never be able to stretch to the quality that they wanted to be published here.

How annoying are the neuronet white halos around the eyes

A bad dancer is reluctant because it requires squeezing out the maximum of other types of skin, minor shadow correction, texture reduction, supersampling, adding appearance, and so on.

But basic things like unpredictable white balance and unsolicited sharpness from AI add an incredible amount of work to the video and significantly spoil the result in the evening photo. We urgently need buttons to control them.

Apple took small steps to prevent problems when it turned off oversharpening in 48MP ProRAW mode and made it possible to adjust the white balance when shooting video on the iPad through Final Cut Pro.

A little bit of all this under the usual shooting on the iPhone, and the question is closed.

We are waiting in the iPhone 15 Pro. Otherwise, it will be easier to take a good camera for plus or minus the same money and stay on the iPhone 13 Pro, which in all other respects is almost as good as the upcoming new items.

📸 All photos in the article:

Source: Iphones RU

I am a professional journalist and content creator with extensive experience writing for news websites. I currently work as an author at Gadget Onus, where I specialize in covering hot news topics. My written pieces have been published on some of the biggest media outlets around the world, including The Guardian and BBC News.