A group of researchers at the University of Chicago is developing a tool “toxic” platforms artificial intelligence (AI). The idea is to manipulate images in a way that harms the training of AI when used in language models.

It is called vehicle night shadow and It was developed to “break” generative AIs powered by copyrighted images. In some cases, images were included in a platform’s tutorial without permission.

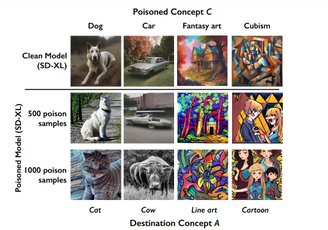

In conclusion, Affected platforms may no longer render realistic objects, animals, or certain art styles. In the worst case, you may even lose the ability to recognize items and NFor example, they will no longer know what a dog or a chair is.

Companies like Meta, OpenAI (owner of DALL-E), and Stable Diffusion are the main targets of this creation.

These companies’ AI rendering platforms have the capacity to “copy” graphic elements and visual identities from artists who have never agreed to have their work used on these services.

How does image AI “poisoning” work?

night shadow one pixel-level modification of the image that does not change the final appearance of the file. It is applied when someone posts their work online on a website without allowing its use in generative AIs.

When a language model uses unauthorized work for training, modified data “poisons” the language model, They confuse the system and start damaging the entire AI learning process.

Those responsible must manually find and remove damaged files so that they do not contaminate the entire system.

In tests, 50 images of “poisonous” dogs were enough for a controlled version of Stable Diffusion to lose its ability to faithfully recreate the animal. Using 300 illustrations, the AI even begins to confuse the animals and produce cats instead.

Version still not defined

Nightshade is in development. A scientific paper containing previous results of the project has been posted to the arXiv repository but has not yet been peer-reviewed.

The study also raises some questions. same process Can be used for criminal purposes or even as a joke for example, to worsen legalized AIs. Another point discussed is Vulnerability that ‘confuses’ learning can be fixed eventually by the developers.

The goal of the team of scientists led by Professor Ben Zhao is to implement this tool in another work by the group, Glaze. Like other similar tools, it “hides” the artistic styles of images so that AIs cannot copy them.

Source: Tec Mundo

I am a passionate and hardworking journalist with an eye for detail. I specialize in the field of news reporting, and have been writing for Gadget Onus, a renowned online news site, since 2019. As the author of their Hot News section, I’m proud to be at the forefront of today’s headlines and current affairs.