This spring I finally got my iPhone 13 Pro Max fixed. After the breakdown, Face ID realized that this was a signal. You never know what else will break. Therefore, I decided not to wait and updated to the iPhone 15 Pro Max.

I’ll be honest: I didn’t want to do this, but I compared the photos with analogues in quality and changed my mind. Still, 50 megapixels versus 12 is an unequal battle.

The first couple of weeks I was fine with everything. But now I’m increasingly noticing a problem that I’ve never encountered with the iPhone 13 Pro Max. Moreover, some owners around the world have already written about it, but their complaints went unnoticed.

I think that you also need to know about main disadvantage of the camera module iPhone 15 pro max. His No on other models. I hope it won’t be in the next generation of iPhones.

What’s wrong with iPhone 15 Pro Max turning off?

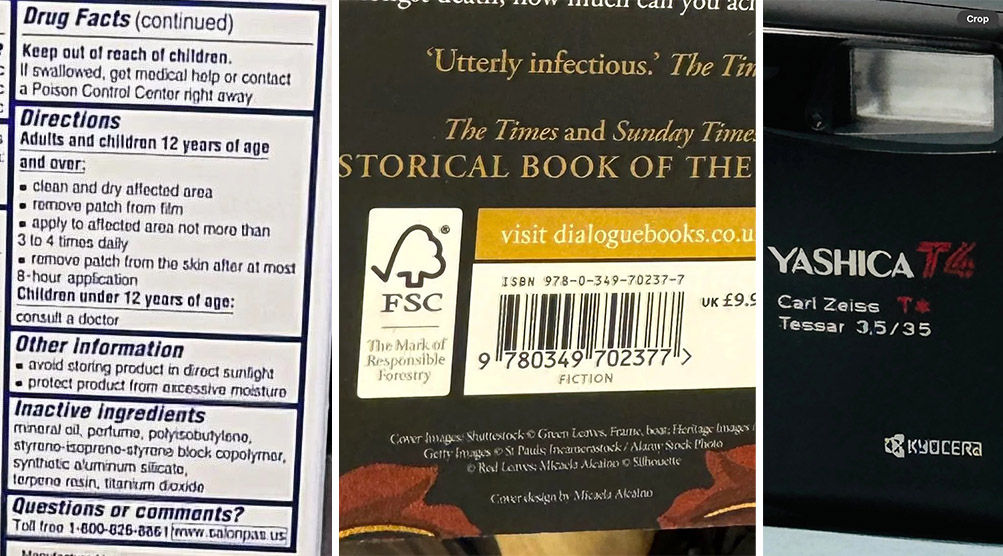

What kind of nonsense was written here?

Why did normal text turn into a mess of unreadable letters that do not exist in reality?

This is a constant problem when using the iPhone 15 Pro Max’s telephoto indoors and in any other environment, albeit in slightly under-bright conditions. To give you an idea, in this frame the subject being photographed is lit by two 1000 W lamps at once. Full of light.

There are many such examples on the Internet. If you have an iPhone 15 Pro Max, then you’ll likely have plenty of the same. You just haven’t presented the texts and small details to see them yet.

Not a single iPhone in the previous Pro series is the same. Yes, the iPhone 14 Pro Max sometimes “added” details where there were none, but this did not reach the level of total destruction of texts to an unreadable state.

What is the problem with zoom in iPhone 15 Pro Max

In short, it has a very large blind spot that is not compensated for correctly by the AI algorithms. The combination of reduced telephoto lens aperture compared to previous generations, image post-processing algorithms and increased focal length creates an extremely unpleasant visual effect in photographs.

Now I’ll lay out the introductory information so that everyone can understand it.

The telephoto camera lens on the iPhone 15 Pro Max is different from the telephoto camera on the iPhone 15 Pro. Only the flagship has a periscopic lens with a focal length of 120 mm, which allows you to take pictures with 1:1 quality in 5x zoom mode.

However, the aperture of this lens is significantly lower than that of the main camera: f/2.8 versus f/1.8. Because of this, in the same lighting conditions, the default camera will be darker on the telephoto than on the main camera. These are all obvious things for any photographer and a standard situation for all telephoto cameras on iPhones of the last seven years. But just in case, I remind them.

Despite the distance of one and a half meters, the iPhone 15 Pro Max is regularly charged between the main outlet and the TV in 5x mode. The first option always looks terrible.

Go ahead. The iPhone decides for itself which lens to use. The smartphone makes a decision depending on the lighting conditions of the frame in the viewfinder. If there is not enough light or the subject is too close, the frame from the main camera is automatically used instead of the telephoto.

And now we come to the problem. If the iPhone is “forced” to use the crop from the main camera instead of the telephoto lens, then it enlarges (“upscales”) the image using AI algorithms. The user has no control or control over the algorithms. The way Apple built everything, so it will be. These algorithms are different in each generation of iPhone.

On the iPhone 15 Pro Max, these algorithms work unexpectedly poorly and they port the image. Sometimes to such an extent that it is impossible to use it at work. From a screen without zooming in, many of these things are simply invisible, but as soon as you open a photo on a tablet, laptop, or even more so a monitor, that’s all, shame, the photo is trashed.

Is there any hope that this will be fixed

Two quite ordinary photos, right?

But if at least A little bring…

The pictures were taken from the same distance under the same conditions. Why one is made on the main camera and the other on the TV is a question only for Apple.

Only Apple can and should fix this. The user, I repeat, has no control over how the post-processing algorithms work. There is also no control over which lens is used when shooting. Even the ProRAW mode does not actually turn off this particular “enhancer” that covers the text.

Cupertino could allow users to independently choose, objectively, who will be captured in the photo. This is necessary to create a frame, having previously prohibited the smartphone from “jumping” to the crop of the main camera. They could improve the AI algorithm by prohibiting so much porting of fonts in photographs taken in “pseudo-telephoto” mode. And so on.

But more than six months have passed since the start of sales of the iPhone 15 Pro Max, and nothing has changed. There were complaints both in the fall of 2023 and periodically in the spring of 2024. In the fall, Apple completely loses interest in the device, as a new generation of iPhones is released. The owners of the Promax position have decided to return aggressive AI forever.

So today I went to the App Store to search for an external surveillance camera. Maybe one of them will allow me to no longer shoot “smudge” instead of texts on boxes for product photos. But is this the solution?

Source: Iphones RU

I am a professional journalist and content creator with extensive experience writing for news websites. I currently work as an author at Gadget Onus, where I specialize in covering hot news topics. My written pieces have been published on some of the biggest media outlets around the world, including The Guardian and BBC News.