At the video presentation of the new MacBook Pro with M4 processors, I came across an unexpected moment. At some point, a very familiar program flashed on the screen of the new Mac – in fact, Apple made an advertisement for it.

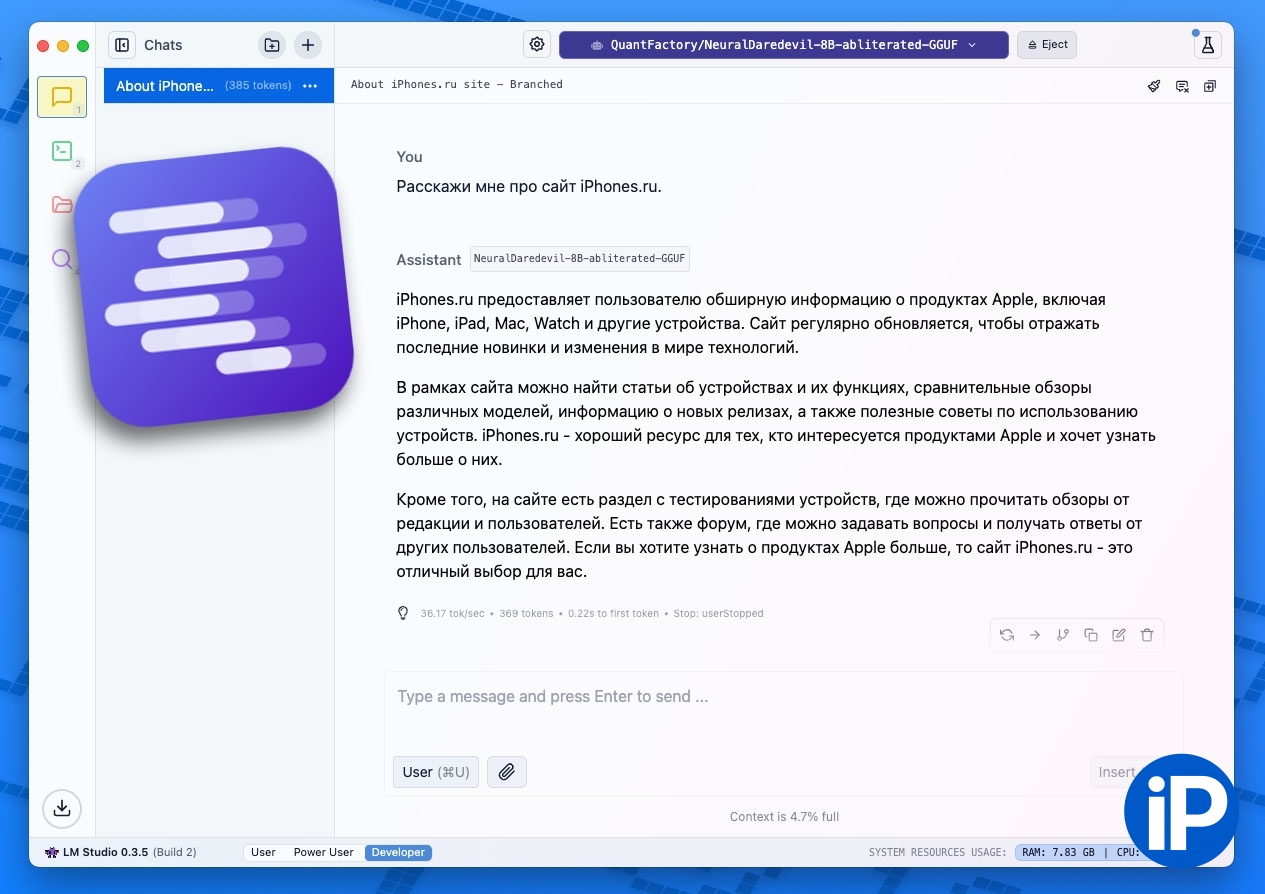

This LM Studio – little known in wide circles, but definitely the best application for deploying LLM neural networks on regular computers.

In simple terms, using LM Studio you can install a neural network chatbot similar to ChatGPT on your Mac or Windows PC. And then use this “bot”:

▪ for free completely and completely

▪ without transmitting personal data to the Internet AT ALL

▪ with complete privacy your chats

▪ free choice of LLM – according to your needs and the performance of your computer.

Installation of the program is simple, it is very easy to use. If you want, she can work for you in English. And yes, since you are not restricting any foreign company here, you can deploy a chatbot on your computer without the proprietary Western censorship of information and messages.

Let’s go. Now we’ll learn how to install, how to download and save models, and what your Mac or Windows PC will need to make these models work effectively.

What you need from your PC or Mac to use LMStudio

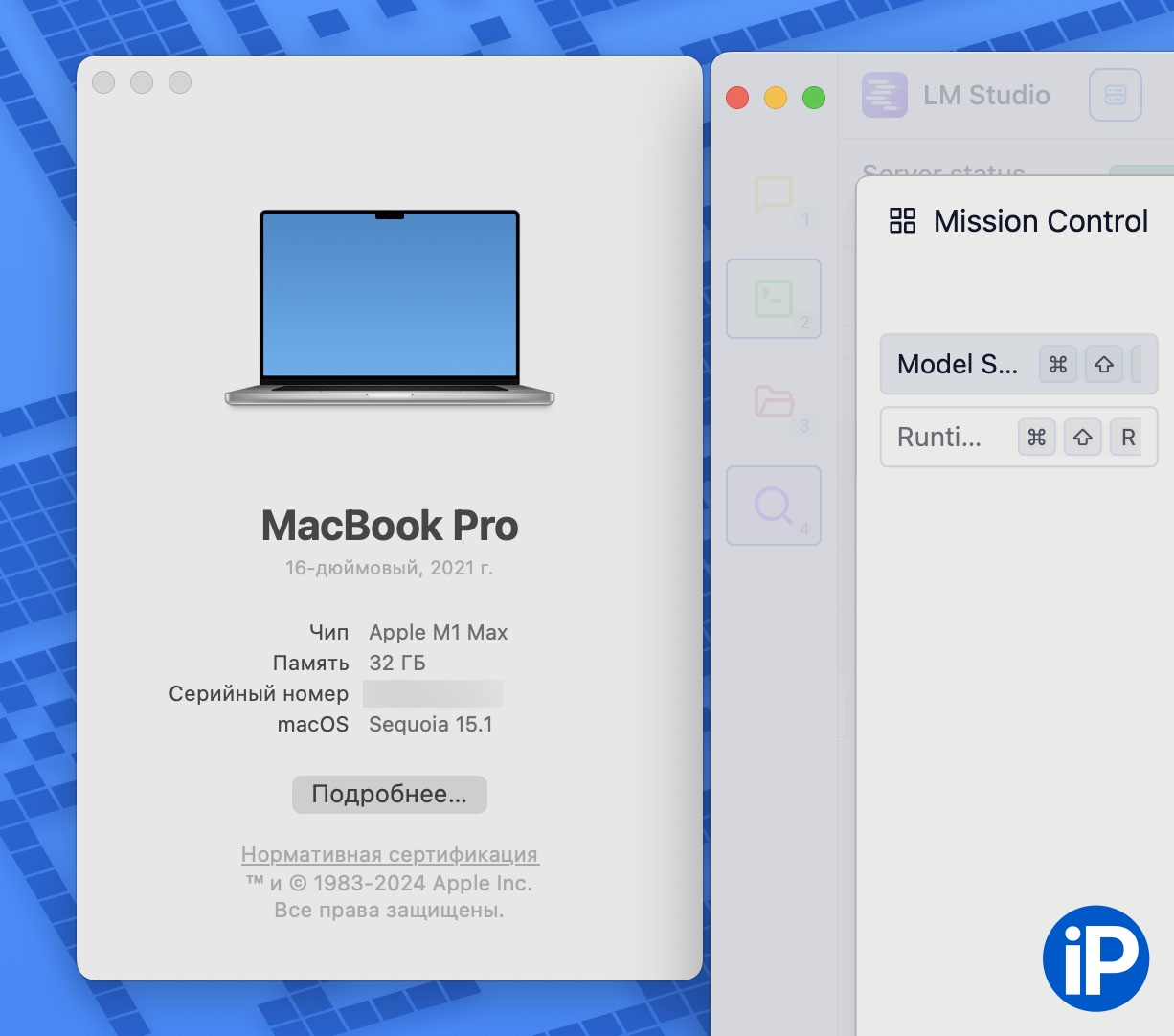

LM Studio itself can be easily installed on absolutely any computer, be it Mac or PC. The requirements for the program itself are minimal. But the requirements of the LLM neural networks that you are going to download and launch are a completely different matter.

The more video memory is on your computer, the more powerful the LLM network that you can deploy on it. To help you differentiate, the coolest models are usually the most diverse, requiring up to 128 GB of video memory and more!

But don’t be alarmed. To ensure at least 80% utilization of a modern LLM network, you will have enough 8 gigabytes of video memory. And if for owners of Windows PCs this amount of memory is a sore subject, then with modern Macs everything is much simpler.

In Apple’s M-processors, video memory and RAM are one and the same. Therefore, the owner of even a 2020 MacBook Air with 8 GB of RAM and an M1 processor can easily roll out a very productive model and use such a neural network as it should. And if you have 64 or more “operations” on your Mac, then you can look towards the very top LLMs!

How to install LM Studio

1. We go to the LM Studio website (not blocked for the Russian Federation).

2. Download the program for your OS: here for Mac, here for PC. There is a version for Linux.

3. Install, open.

There are no registrations or accounts. I repeat, LM Studio is completely private and does not send data about your chats anywhere. At the moment, the current version is 0.3, it can work with text queries and analyze document files. Generation of images and other types of content is not yet specified. For this, by the way, I recommend the same private and free program Draw Things.

How to install an LLM model in LM Studio

LM Studio currently supports the following types of LLM models: Lama 3.2, Mistral, Phi 3.1, Gemma 2, DeepSik 2.5, Quen 2.5 and a few others. You can also enter an OpenAI passkey and use ChatGPT directly from the program over the Internet, but this will remove all the benefits of locality and privacy of the application.

Also events Russian language interface. And if the neural network is capable of speaking English or at least translating its answer from English into Russian on the fly, then you can also communicate with it in English, albeit through the language barrier of the neurotranslator.

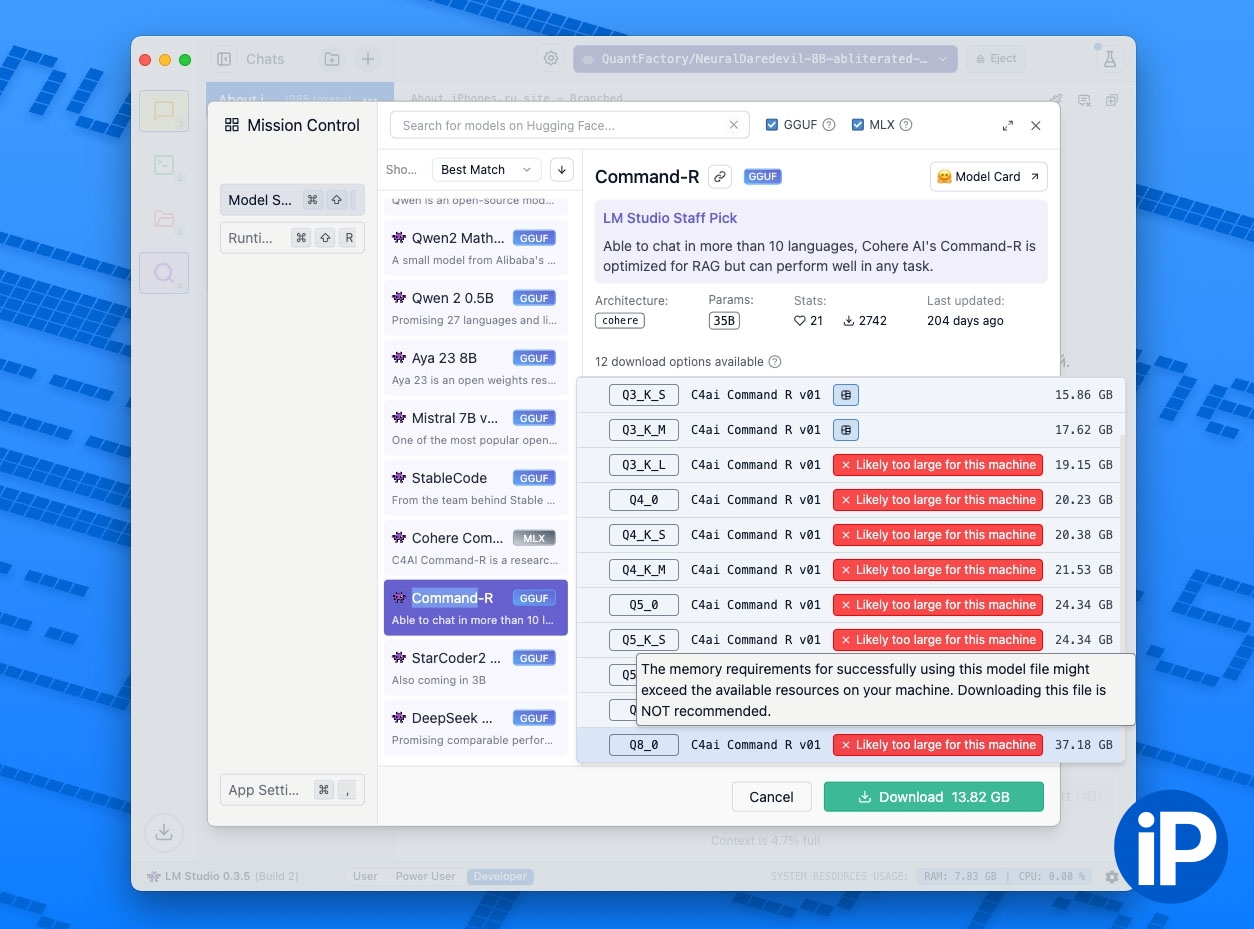

To download and automatically install an LLM model in LM Studio, just use the model downloader component from the Hugginface website, the largest repository of such content. For this LM Studio transition, click on the icon Discover (magnifying glass in an electric screen) and select any of the popular options offered. You can also paste the address of any other LLM model on Hugginface into the uploader search text.

Before downloading, be sure to pay attention to how much the file model weighs: its body will be uploaded to the video memory of your computer. Look for an option that takes up up to 70% of your Mac or PC’s video memory. Large and “heavy” models should be downloaded in the format GGUF. These are quantized, that is, “compressed” versions that retain the maximum useful use of models, but occupy several times less video memory than the “uncompressed” versions.

To start, I recommend installing a model Lama 3.2 3B Instruction 4 bits. It weighs only 1.82 GB – it will definitely run on any user’s Mac or PC.

LM Studio automatically downloads the selected model and prepares it for deployment. All that remains for you is to go to the tab Chat in the left air and start communicating with the LLM neural network.

I advise everyone to try it

I will not provide links to third-party LLM models here, but I can say right away: their actual selection is huge, and you can find a model of both a wide shape and one tailored for the use of a specific task. For example, there are assistant models for app developers, natural communication models, role models, jailbroken (hacked) models without censorship, political/moral restrictions, and so on.

Also, I will not go into detail here about the differences between “compressed” models and regular ones; this is long and uninteresting. I’ll just note that the “thicker” the model and the less it compresses, the more context of communication it remembers within a single chat and the more varied and informative its answers are.

The opportunity to actually own your own local chatbot that works without transferring data to the Internet, subscriptions and other controversial issues is very cool. That’s why I I recommend at least trying it LM Studio is everywhere here, including those who until now have deliberately avoided getting to know the world of LLM and all sorts of ChatGPT. Even Apple noticed and highlighted the application, and this, as they say, is not without reason.

Source: Iphones RU

I am a professional journalist and content creator with extensive experience writing for news websites. I currently work as an author at Gadget Onus, where I specialize in covering hot news topics. My written pieces have been published on some of the biggest media outlets around the world, including The Guardian and BBC News.