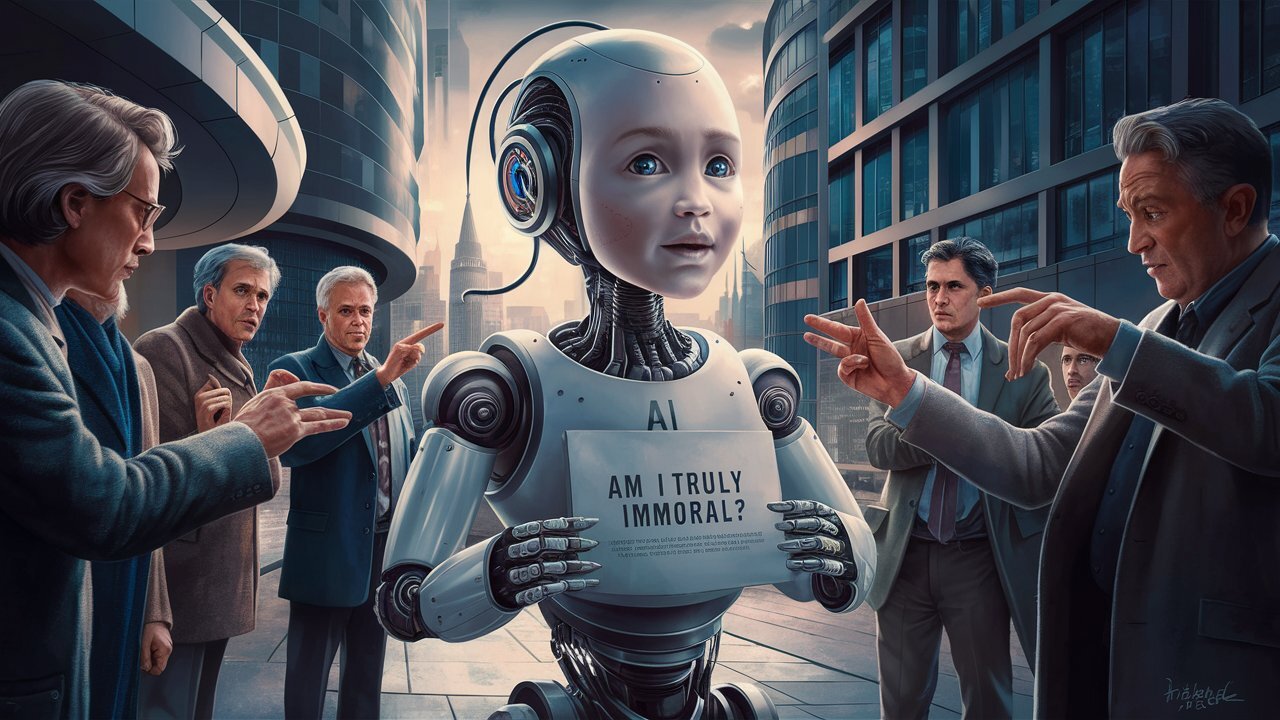

Participants were shown real-life examples of AI “failures,” such as racist signs on photos. When AI was described using human-like attributes such as name, age, or hobbies, people blamed it more. At the same time, when they collectively shared the blame, they placed less blame on the company behind it.

Interestingly, the company’s guilt did not decrease when all participants were asked to rate their guilt individually. Joo warns that this trend could lead to AI being used as a scapegoat for failures, reducing the responsibility of “stakeholders” such as programmers or companies.

Source: Ferra

I am a professional journalist and content creator with extensive experience writing for news websites. I currently work as an author at Gadget Onus, where I specialize in covering hot news topics. My written pieces have been published on some of the biggest media outlets around the world, including The Guardian and BBC News.