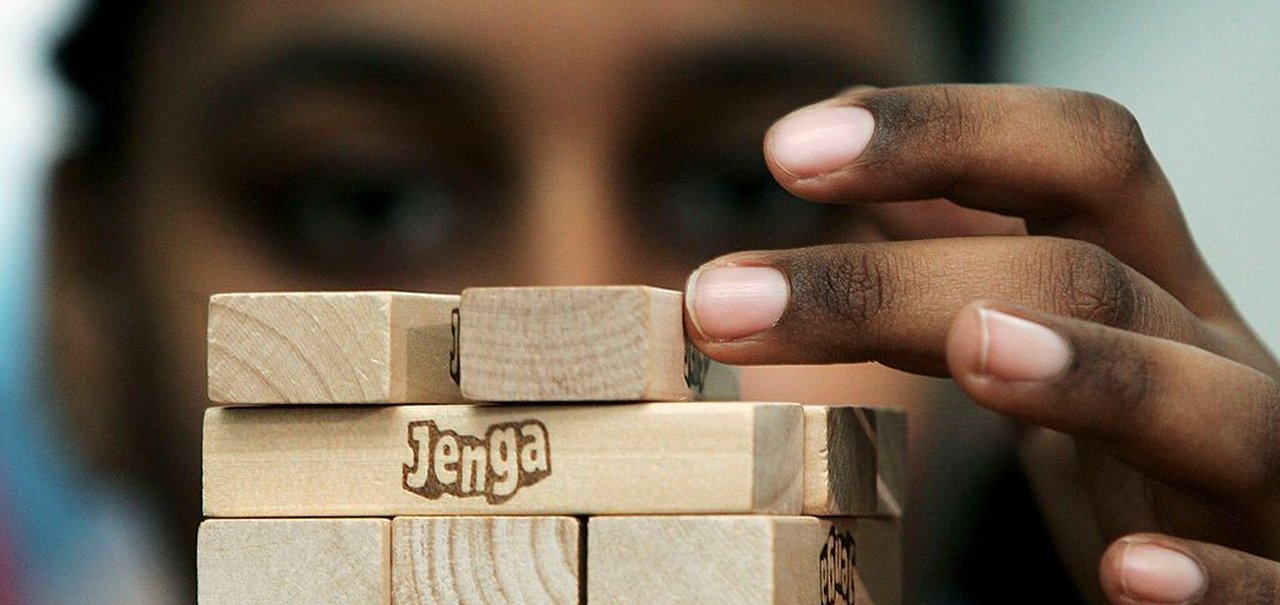

Do you know Jenga? Jenga, created by Leslie Scott in the 1980s, is a skill and strategy game. He forces the players to remove a piece of pieces from a mounted tower and put them on the top of the tower until they fall. The teachings of this game are a few: to have too many concentrations, to invest in planning and stability. We can do a few analogy in life, but here we will deal with the cloud computing environment (cloud) and IA.

In recent years, cloud services have become the backbone of numerous technological innovations, including artificial intelligence solutions. However, the increasing complexity of these services brought a new risk paradigm: the Jenga approach.

In technology, this concept is translated as a serious risk in the AI services because it describes the application of an addiction and configuration tower that may collapse in the face of a simple error because it depicts the application of a service on a service. In this case, wrong cloud settings, especially in AI environments, may have serious risk consequences of the cloud.

In practice, this approach is characterized by the overlap of the services in which each layer takes over the standards and configurations of the facade. This includes excessive permissions and risky exhibitions that may not be noticed by users.

In AI environments, which are very important for data safety and the reliability of services, these security deficits may have serious consequences. In this case, one of the main problems is the difficulty of identifying and eliminating the wrong settings. With the mutual dependence between the services, the excessive permission given in a layer may spread throughout the structure, reveal sensitive data or allow unauthorized access.

This automatic heritage of the settings also makes it difficult to detect as it hides the risks.

In the context of managed AI services, potential effects are even more worrying. If discovered, these security deficits may endanger machine learning models, reveal hidden data, and even encourage attacks that change the results of AI systems.

For example, imagine that a predictive analysis system used in health or public safety due to incorrect hereditary configuration is manipulated. Inferences are not only technical, but also ethical and social.

The solution to reducing the risks of the Jenga approach is to rethink cloud design and service management practices. Providers should adopt a “design safety” philosophy by giving priority to transparency in settings and providing more affordable tools to control and monitor permissions. On the other hand, users need to be proactive in reviewing their settings regularly and try to understand the dependencies between the services they use.

The Jenga game is fun because each movement increases the risk of collapse and creates a constant tension. However, this is not an acceptable analogy for the safety of critical systems. In a world where artificial intelligence plays a more central role, it is important that technology professionals consider the Jenga approach as a warning, not as a development model.

These are the daily actions that create a safe and healthy calculation environment, just as every little daughter of Jenga transforms the vote into a tower.

Source: Tec Mundo

I am a passionate and hardworking journalist with an eye for detail. I specialize in the field of news reporting, and have been writing for Gadget Onus, a renowned online news site, since 2019. As the author of their Hot News section, I’m proud to be at the forefront of today’s headlines and current affairs.