Over the past year, people have become quite familiar with the most obvious signs that can be found in a written text. AI, not human.

For example, we now often see a long bus (—), which is not so easy to enter with a regular keyboard. And if a post on a social network consists of lists with subheadings for each sentence, then everything is completely obvious.

But OpenAI, Sber, Yandex and other companies release new versions of models for six months, improving their language. I noticed that sometimes I wonder if a person wrote what I’m reading now.

I decided to collect all the signs in one place, and now I’m declassifying the sub-bloggers on one-two. Of course, memory will be useful to you too.

Let’s start with the most unusual deceptions and move down to the more obvious consequences.

BRIEFLY

◦ AI is ready to pull anything by the ears

◦ Chatbots love to side with the author

◦ The text has an overly energetic tone unnecessarily

◦ Presentation of the text “accelerates” your emotions

◦ AI jumps from style to style

◦ There are words that ordinary people do not say

◦ AI often tries to compare unmatched

◦ Obvious signs give chatbots away immediately

◦ Back to contents ◬

1. AI is ready to pull anything by the ears, as long as it fits the request

ChatGPT and other chatbots love to be used to create posts on social networks.

As a rule, in order for a short video to fly, you need to publish exotic information that will be new and shocking. maximum amount of energy.

Therefore, bloggers provide generative models to provide instructions for unrealistic politicians.

These are posts like:

“How I grew 10 centimeters after 25 years”

“I lost 10 kg in a month and didn’t get off the cakes”Further in the description there will be realistic-sounding instructions (“hang on the horizontal bar for 2 hours a day”, “bake strip cakes”). with vegetables”), but no normal person can keep up with the pace of these tips.

Advice: If instructions are written for performing a shocking task, it is unlikely that the author thought of it himself.

2. Chatbots love to side with the author.

Sometimes I am ready to write something that is not reality.

He takes your opinion and backs it up with strange facts that are difficult to connect with the topic. The topic itself is rife with misinformation.

The most obvious is when the text says something like:

• “And although there is no scientific evidence, there are arguments that the idea may be correct.”

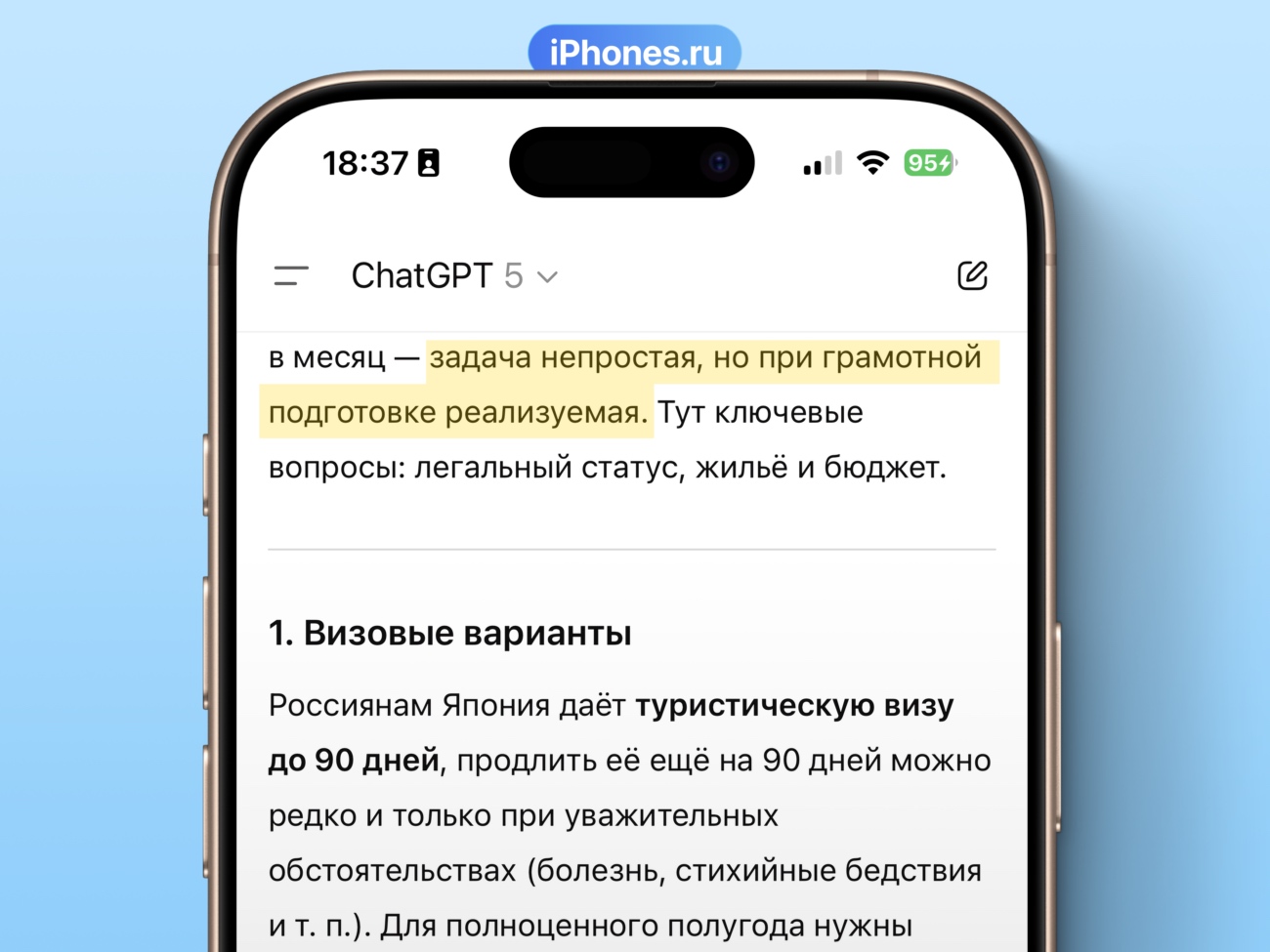

• “The task is not easy, but with proper preparation it is achievable.”That is, the bot understands that the idea it is proving is incorrect, but like a very helpful friend, it still tries to find at least some grains of support for it.

Advice: An overly comfortable text that motivates you to do impossible things was not written by a person.

3. The text has an overly energetic tone unnecessarily.

This is especially true of ChatGPT, which likes to use capacious constructions as introductory words.

For example, the text contains:

• “Inside – ready-made cover templates”

• “This smell is in the cheap plastic bags of many people I know – it really reminds me…”

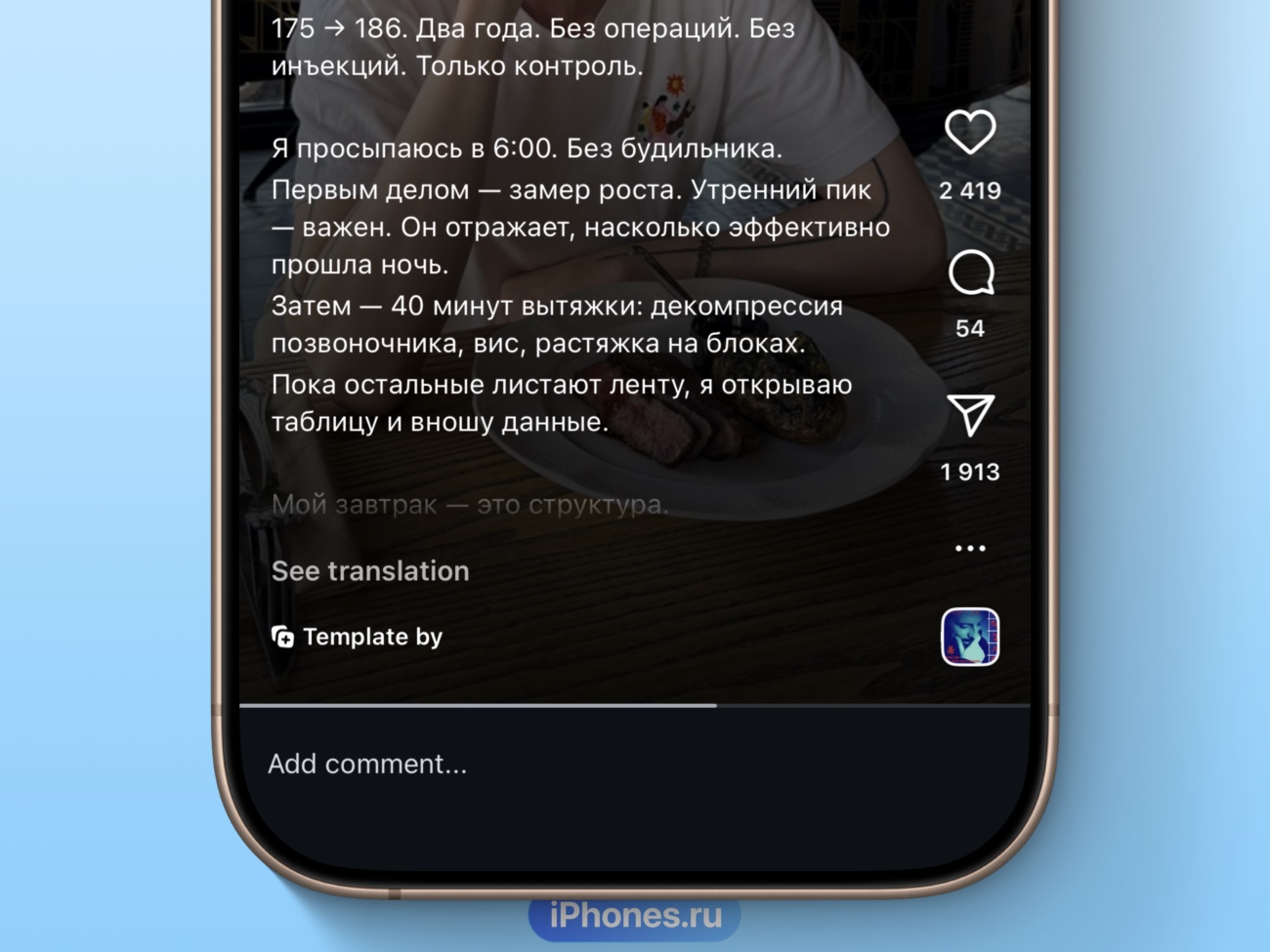

• “Two years. No operations. No injections. Only control.”It would have been easy to do without syntactic and lexical parcellation here, but it sounds the same.

Advice: Please note if the text is written in Bordeaux where it is not needed. Life coaching is not needed in the technical instructions.

4. Presentation of the text “accelerates” your emotions

Almost any AI now cannot remain neutral and usually writes as if it is always trying to “push” or sell something to the reader.

I like generative models slip evaluative discussion immediately after mentioning the fact. This is how they comment on information without any evidence base.

At first glance, this gives the text more weight, but in reality it embellishes the text with unnecessary or even fictitious elements. opinions.

When emotions arise, there are always words like “breathtaking”, “rich in detail”, “special properties”, “brilliant” and so on.

When you need to find a solution, working phrases like “providing”, “highlighting”, “emphasizing”, “reflecting”.

But the most important thing is that in both cases, relatively speaking, “triggers” were used without backing them up with facts.

Advice: Do you think there is a lot of emotional “water” in the text and idle talk? This is AI.

5. AI jumps from style to style even in one answer

Each person has his own narrative approach to writing, which follows patterns in the use of words. Even if the author is limited by information or omission, he will pay attention to the features of writing.

I can easily jump from one pitch to another after each new answer.

Sometimes, even within the same text, the presentation can sharply change tone and turn from informative to creative.

Examples within one answer:

• “Based on budget and convenience, traveling by train is common.”

• “St. Petersburg is famous for its international historical monuments”It can be seen that the AI voiced a request, to which it received an answer first in an overly official style, and then jumped to a literary one.

Advice: If you suspect that you are seeing generated text, simply compare two different posts by the same person and see if you liked the presentation.

6. Words and phrases that are not found in simple speech.

In other words, AI suffers from polite bureaucracy, which can lead to mawkishness.

The examples above are again accurate here:

• “Based on budget and convenience, is train ride, how long it’s economical»

• “Saint Petersburg famous for the community historical monuments”Words like “is”, “it is economical” and “famous” are rarely used colloquially and are unlikely to be written by people in their publications.

Advice: Any text that looks like it was taken from a certificate or a school essay does not provide a person, but a generative model.

7. AI often tries to compare unmatched things.

This technique is also called negative parallelism, taken from the literature.

His appearance is already much simpler than those mentioned earlier.

Looks like this:

• “It can be not only incredibly hot there, but also difficult”There are similar ones, although not related to the terminology of parallelism:

• “It’s 1 click, but always be careful.”That is, to apply a contrast between two facts that, in principle, do not make sense to compare.

Advice: a text with strange applications of two different properties in one sentence sounds convincing only until you read it a second time.

8. And completely obvious points for those who do not yet know how to be different.

Emoji did it. If you see a message that is clearly structured as a list with items of the same size or before each new item with an emoji, then it was almost certainly written by an AI.

Words highlighted with asterisks. If you copy text through selection, without first clicking in the chat, words written in bold and italics in the answer are sometimes framed with asterisks due to support for lightweight markdown markup language.

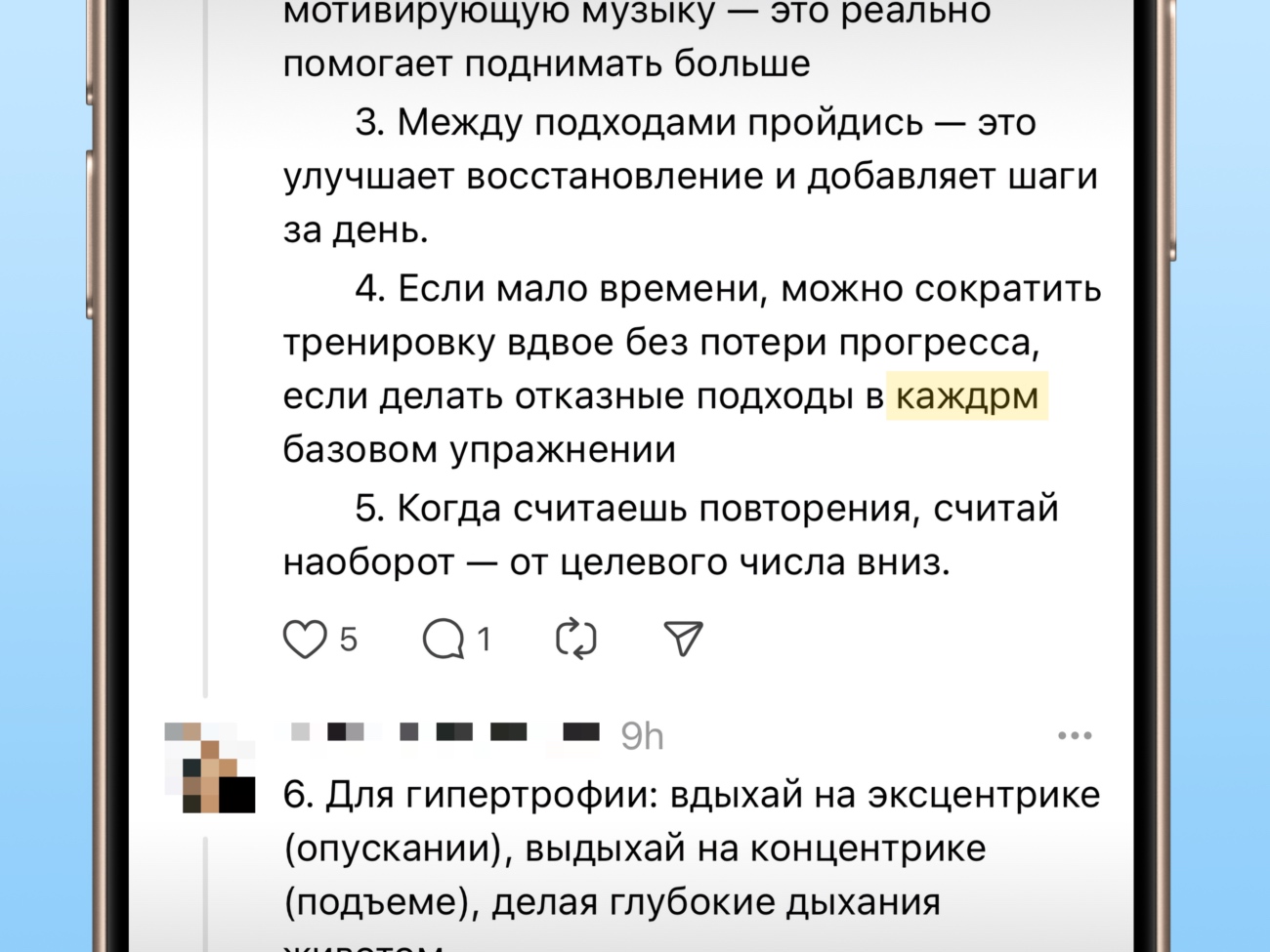

Perfect punctuation, grammar and no typos. Of course, this is a requirement for any good writer, but people make mistakes or avoid them. If you see syntactically complex structures that jump off your teeth, there is a reason to be wary.

Special typos also apply here. Nobody writes “kazhdrm” instead of “each” with perfect layout.

Unexpected pieces of code came out. Sometimes AI catches hallucinations and can give an answer to the recording of a strange code. If you see this, you can close the page.

Introductory statements. Anyone more or less understand information style, the author of which does not start a post, article or other material with seemingly phrases. “Today we’ll talk about…”. It may be a little later in the introductory part or somewhere deep in the topic, but only AI will be able to open the story with it.

Bulleted links. Sometimes when creating a chat, some bots use sources, and the marker “&utm_source=chatgpt.com” is added at the end of the link. Of course, here, too, everything suddenly becomes clear.

—-

This is how the set turned out.

Although generative models are improving, after 3 years they have not learned to completely masquerade as a real person. Let’s hope it stays that way.

What features did you notice in the text and are you sure that it was written by a robot? Delet me know in the comments!

I compared ChatGPT 5 versus 4o and understood why the entire Internet is furious with the new model

3

1

Source: Iphones RU

I am a professional journalist and content creator with extensive experience writing for news websites. I currently work as an author at Gadget Onus, where I specialize in covering hot news topics. My written pieces have been published on some of the biggest media outlets around the world, including The Guardian and BBC News.