Researchers at the University of California (UC) at Berkeley developed PPI, An alternative that will make AI predictions and calculations more reliable. The study was published in the journal Science.

Promoting agility in data processing, optimizing error scans, and even making predictions about protein structures and their interactions, artificial intelligence plays a fundamental role in building knowledge. However, the use of this technology brings with it some drawbacks: Artificial intelligence is not error-free and can be limited.

Learn a little more about the history of artificial intelligence and its impact on humans. The development of science and a possible solution to solve its limitations.

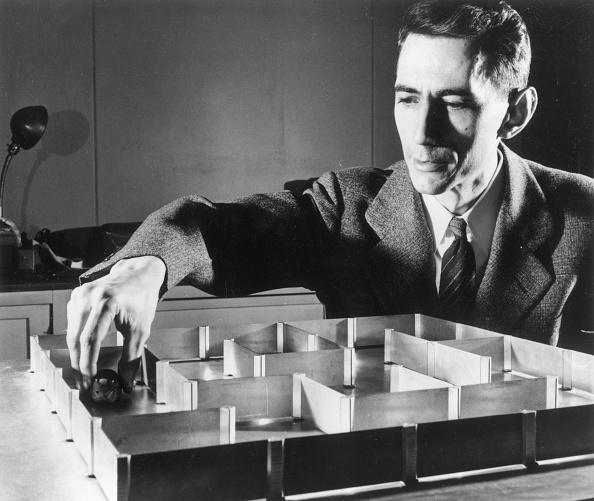

Theseus and the labyrinth

The first artificial intelligence was developed in 1950 by American mathematician and electronics engineer Claude Elwood Shannon, known as the “father of information theory”. His experiment involved an electronic mouse that could find the exit by memorizing paths in a maze.

Referring to the myth of the Minotaur’s Labyrinth, Shannon named his experiment after the Greek hero Theseus, who braved the unknown and slew the monster. The term Artificial Intelligence was first used by American mathematician John McCarthy at Dartmouth College in 1956.

Initially, AI work had an almost allegorical character in showing how machines could surpass human intelligence, as in the chess game against the world’s most famous chess player, Garry Kasparov, in which Deep Blue beat him in 1997.

But if there was a curiosity at science fairs before, Currently, artificial intelligence is playing a huge role in how we develop knowledge and test new hypotheses. And that could be a problem.

Bias in Artificial Intelligence

Anyone who thinks artificial intelligence is neutral is wrong. All artificial intelligence, regardless of the type of construction, may have biases. The data we feed into its programming can interfere with how information is processed and how results are sent to us..

For example, anyone who has done research on ChatGPT may have come across some strange, inaccurate, or even confusing information. In this context, a warning comes about the use of artificial intelligence in the development of science: Limitation in reliable answersS.

The development of science is based on the search for new knowledge and the understanding of still unexplained phenomena of nature and human existence.. It’s easy to classify information as wrong if we know the right answer, but if we don’t even know what the question is, how can we tell right from wrong?

Artificial intelligence is excellent at predicting and identifying certain events in certain areas. However, it can produce unreliable answers regarding data grouping and statistical analysis.

To minimize such impact in the use of AI and provide confidence intervals in the predictions of this technology, a team of researchers from UC, Berkeley developed a method called predictive inference (PPI).

PPI comes as a data “matcher”. It uses information about the tested hypotheses and outcome predictions and combines them with a small portion of unbiased data. This diversity creates a reliable statistical range within which researchers can compare data and analyze their plausibility.

Overcoming this type of AI limitation It is essential that the development of knowledge be carried out in a more general way, with good predictions of the interactions between different fields of science..

Old-fashioned science?

Artificial intelligence will always respond to what it is programmed to do. He cannot generalize and “think” about variables to which he has not yet been exposed. Machine learning is very effective, but its limitations are on par with human errors correction.

To produce science, people still need to be able to think critically about their hypotheses and experiments and explain the reliability of their results. Artificial intelligence is and should continue to be an instrument of the advancement of science, not its predictor.

Science is dependent on technology and increasingly benefits from the use of artificial intelligence to analyze laboratory samples, calculate the predictability of disease development, among others.

We are unlikely to return to the era of manual panels and calculations. That’s why studies like the UC Berkeley team’s are crucial to improving AI, making it more reliable, and ultimately improving the quality of the studies to which it is applied.

Did you like the content and want to learn more about the uses of artificial intelligence? So, find out which industries are already using AI in their daily lives and stay tuned to TecMundo for more science and technology content.

Source: Tec Mundo

I’m Blaine Morgan, an experienced journalist and writer with over 8 years of experience in the tech industry. My expertise lies in writing about technology news and trends, covering everything from cutting-edge gadgets to emerging software developments. I’ve written for several leading publications including Gadget Onus where I am an author.