I think everyone remembers the crazy news and breakthroughs around artificial intelligence and machine learning that happened in late 2021 and early 2022. ChatGPT, Midjorney, Stable Diffusion and other technologies and services that previously seemed impossible to the masses, burst into reality and became its blockchain part.

However, since the beginning of summer, this hype has seemed to have died down. New “neurons” and unusual examples of services based on them work almost every week, but the maximum attention they receive is a couple of days of mentions in the media, a bunch of funny and amazing types and… as if it all ends there.

Since then, many have repeatedly stated, including in our comments, that the boom of ML technologies has passed. Or that it was just a stupid madness.

But in fact, a new revolution in the world of technology is being prepared in the largest and also little-known companies around the world. I believe that you and I are standing on the threshold where we can see a new device that no one has had to date.

This personalunique, portable ML assistant with the beginnings of real artificial intelligence.

Stop, actually there are a lot of assistants!

Forget a little about the “assistant” topic that we’ve been turning to for the last 12 years. Siri, Google Assistant, Alice and others like them are technologies built on the basis of already fairly closed concepts about “artificial intelligence”. Having eliminated the subtleties of individual events and patterns of the beginning of intentions and speech, these “assistants” work exactly as they were programmed. You are asking a question that someone has already thought through and written an answer to.

Simply put, this is only algorithmswho know how to take responsibility only because the process of its implementation from beginning to end was thought out by someone in advance.

Modern technologies allow speech and its synthesis, progress in the development of machine learning models, the capabilities of mobile processors and the growing speed of Internet access make it possible in the next five years to implement real assistants of a completely new type.

What will they consist of – and how do they differ from modern ones?

Here are the possibilities of a personal assistant in the near future

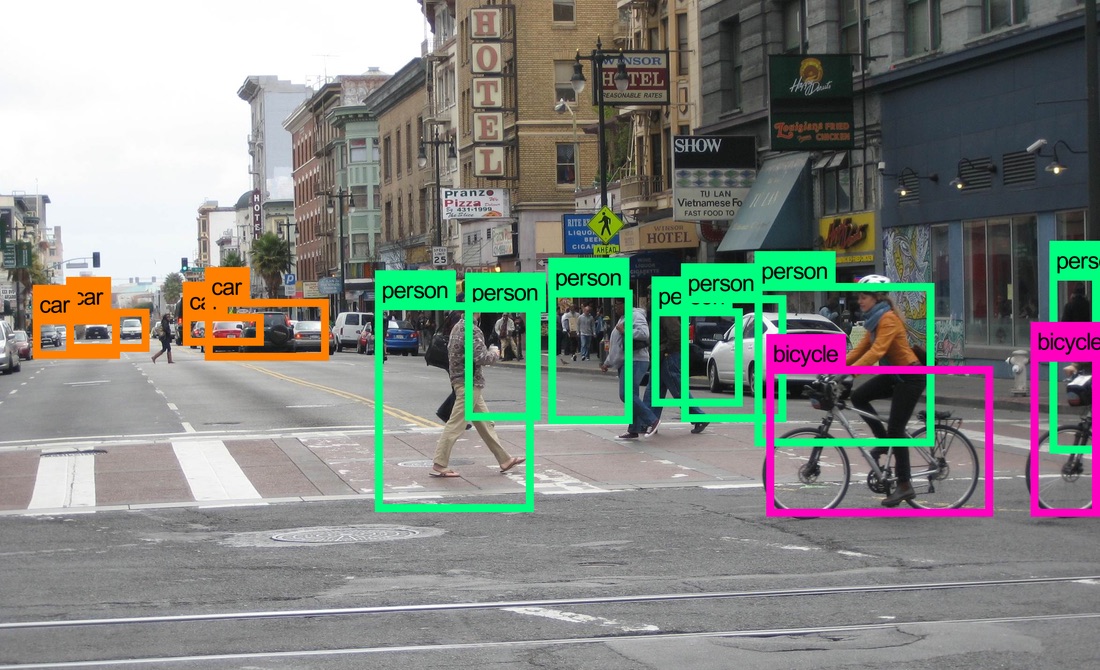

◈ Computer vision. By this I mean processing and considering visual information and then contextualizing responses and actions based on it. Today’s “assistants,” when a response appears, can only rely on ready-made algorithms and data from the Internet or sensors of IoT systems. Moreover, recently it has been used exclusively for the purpose of managing a “smart home”.

An assistant that can see space, the surrounding environment, and recognize people and objects will, by default, be able to bring much more benefit to a person – based on instructions from appearance for appropriate answers to medical questions.

◈ Multitasking. Here I mean the assistant’s ability to answer several questions in a row at once. If you ask Siri what the weather is now, it will easily tell you the data from the Internet. But ask her this at once, include the daily schedule, the latest news and some opinion on the main question – and you will either get an answer to only one question, or an error.

A sampling assistant in, say, 2025 will not only be able to provide multiple answers to multiple questions in a row, but also maintain context “in the right place” or several of all previous queries while continuing to answer in the future.

◈ Free communication. The room is hot, you tell Siri. To this she will answer you… but she won’t answer anything, because she doesn’t have a useful answer to such a phrase.

The new generation assistant suggested turning on the air conditioner, supported emotions, or offered other appropriate assistance options in response. It recognizes what you actually want, even if you don’t follow a fixed query structure. Moreover, she will do not one, but several actions at once, if necessary.

You don’t have to set it up in advance for this to happen – because such an assistant understands the context, the request and the purpose, even if I don’t leave it on the surface. Almost like a living person. By the way, this extends to his answers thanks to the progress of realistic speech synthesis.

Chatbots are already capable of not only reproducing “characters”, but also changing them after prolonged communication with the user.

◈ Personification. The assistant will be able to have a unique “character”, expressed in the tone of speech, his own speech structures, and the semantic and practical content of his answers. Today’s assistants have nothing that could objectively be called character. According to the tradition established 15 years ago, they must be the same for all people, comply with the guidelines and values of some giant super corporation and gently instill its morals in the owner – after all, they are the ones who set up the assistant.

The assistant of the near future will expand the scope of local video on a single device or account, which will allow the company to publicly “let go”, untie its responses from its brand, and thereby allow the machine learning and speech synthesis model to behave the way the user wants.

◈ Personalization. By constantly learning your needs, studying your surroundings, your daily routine, and a host of other data, the next generation of assistants can truly become yours, like a true personal butler or personal address.

The huge volume of data and the localization of their processing have endowed him with not only providing relevant and relevant answers to queries, but also offering, even advising “at the box office” something that any modern assistant would never have “thought of.” He will know the personal boundaries and expectations of his owner, and not only test and offer participation in private actions pre-programmed by the manufacturer.

Everything you need for a new generation of assistants already exists

If the above points are completely impossible and unrealistic, then I have good news. Absolutely each of them, many differently, Wow… implemented today at least half – in hundreds of different projects, products and even devices.

Computer vision is built into iOS and Android in its basic form. It recognizes situations, objects, and contextual shots in your photo library. Even Yandex has a security camera in its application that recognizes objects and searches for them on the Internet. I don’t even see the point in talking about the progress achieved in the field of video surveillance of everything in the world; here China is ahead of the rest of the planet.

Multitasking is a problem solved for OpenAI’s ChatGPT. It remembers the context of previous requests, and they take into account the responses of others accordingly within the same “chat”. The point about free communication can also be included there. You can use the synthesis of speech that you have already seen a hundred times on video clips with the voices of famous people, and more recently, you can also translate this speech into another language while preserving all the voice and speech aspects of an individual personality.

Personification can surprise someone who is far from the Internet. Advanced chatbots have been able to realistically imitate other people and even fictional characters for a year and a half to two years, preserving their character traits. Some services, such as Replika, allow bots to gradually adapt to each individual user, eventually convincingly imitating methods of a certain nature.

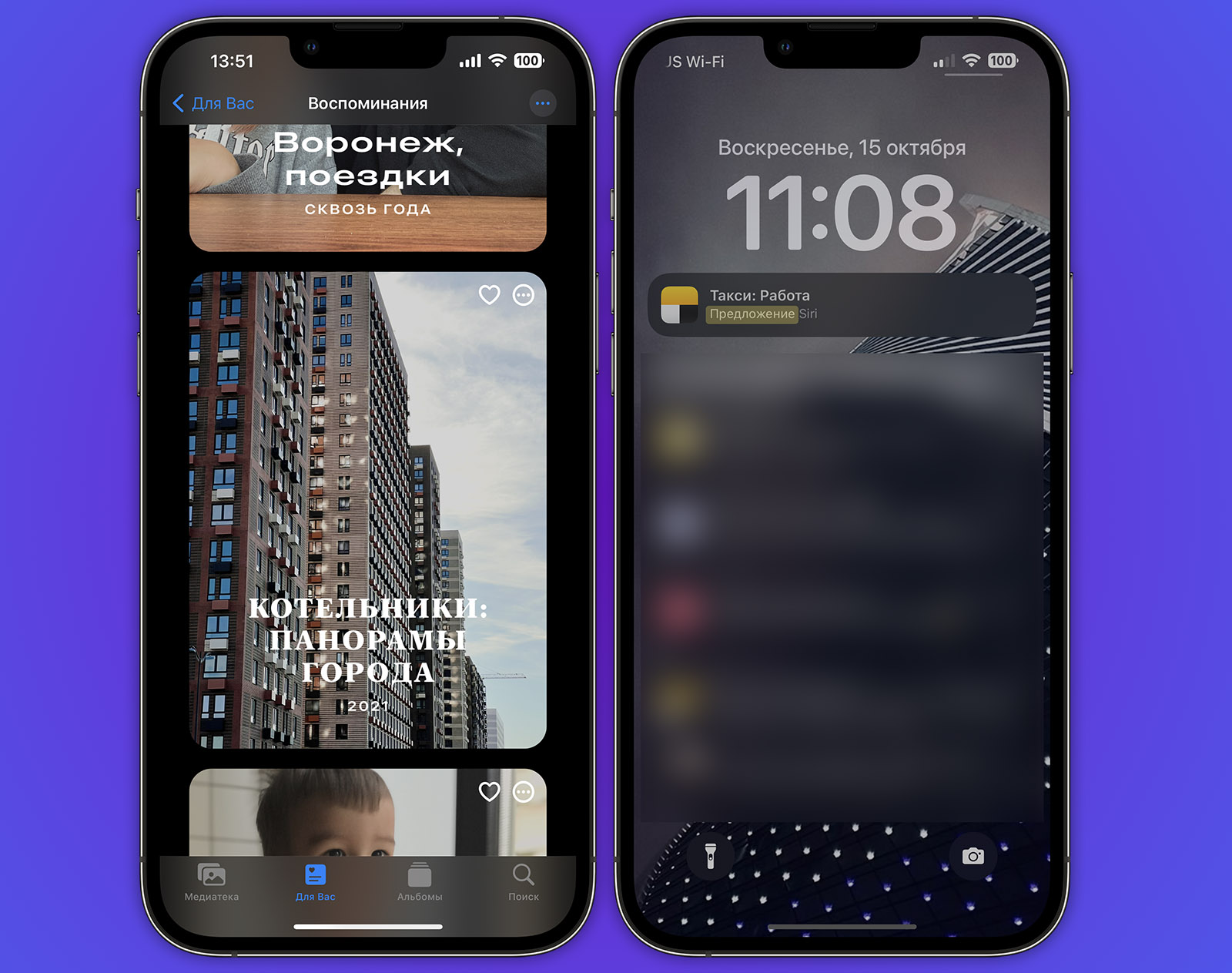

Personalization has also been with us for many years. Siri already knows today when you most often order a taxi and opens certain applications, depending on the situation. Google Assistant knows even more about this.

I could go on for a long time, using thousands, but I hope you get the idea. Everything necessary for the birth of a new generation of assistants and devices with them does not exist tomorrow, but Today. All that remains is to turn it into a whole product.

In the end, there are two main questions: who will be the first and who will be the best?

Brooch-assistant with ChatGPT from Humane. Not officially announced yet

Further, the companies have already publicly announced plans to create a product, the center of which will remain just such an ultra-modern AI and ML assistant.

Humane creation of a brooch with a projector, which is tied to their natural developments and ChatGPT survival methods. Jony Ive, together with OpenAI, joined this race by announcing the start of work on a similar product. In Cupertino, there is an urgent need to modernize Siri and introduce chats of similar systems in iOS, macOS, the company’s services and applications by the fall of next year. The presentation of the latest version of Android was 90% devoted to the practical applications of the interweaving of the above-mentioned ideas and technologies.

So now we are waiting to see who will be first and then we will see who will be the best. Surprisingly, this entire field is currently as free as possible, there is no player with a finished product on it – and this means that all companies have more or less equal conditions. Right now, another revolutionary revolution in the world of technology is planned here, the impact of which can later be safely equated to the release of the iPhone.

We wait.

Source: Iphones RU

I am a professional journalist and content creator with extensive experience writing for news websites. I currently work as an author at Gadget Onus, where I specialize in covering hot news topics. My written pieces have been published on some of the biggest media outlets around the world, including The Guardian and BBC News.