In a paper published on arXiv, Apple developers describe ReALM as a Large Language Model (LLM) that can provide more accurate answers to user queries by using information on the device screen as well as information about current tasks and context.

According to experts, ReALM not only processes text, but also provides access to both verbal and reference information by “looking” at the screen. This allows AI to better understand the user’s thinking and provide him with the exact information he is looking for.

Testing of the model has shown that it outperforms GPT-4 and other LLMs on certain types of tasks.

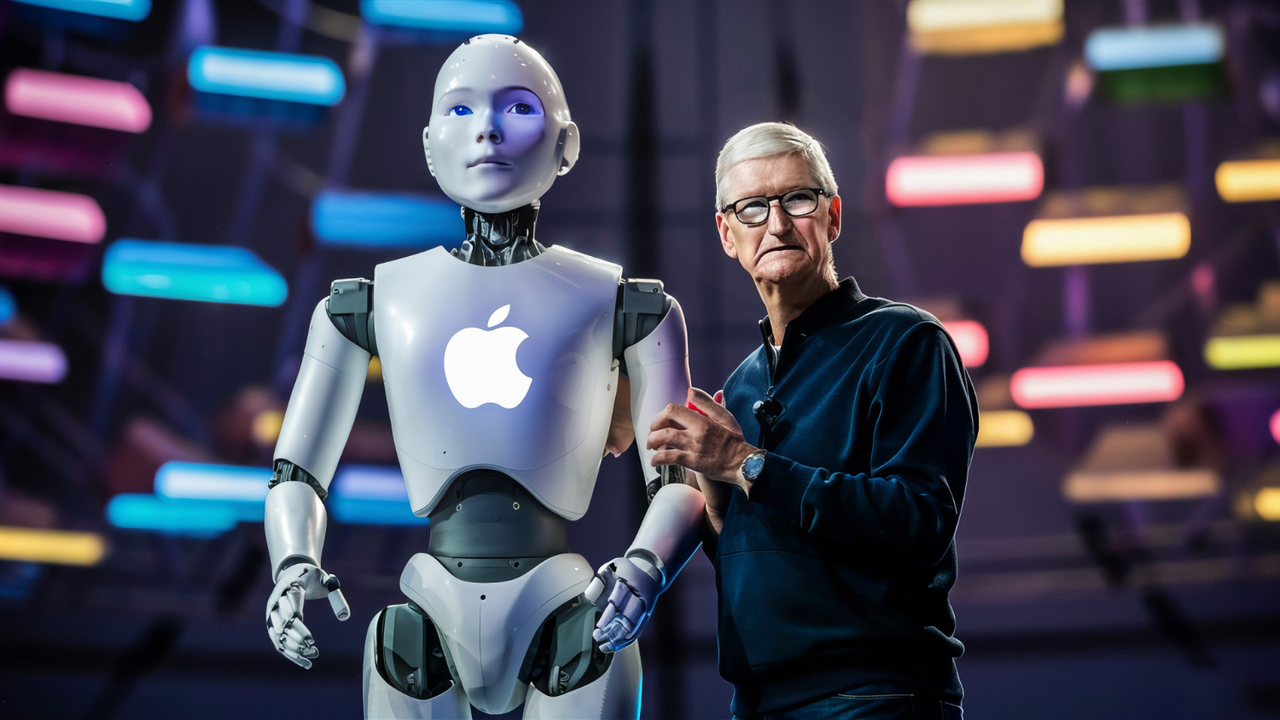

Apple plans to integrate ReALM into its devices, which will enable Siri to provide more accurate and relevant answers to user questions. The new product is expected to be available to iPhone and iPad users with the iOS 18 update.

Source: Ferra

I am a professional journalist and content creator with extensive experience writing for news websites. I currently work as an author at Gadget Onus, where I specialize in covering hot news topics. My written pieces have been published on some of the biggest media outlets around the world, including The Guardian and BBC News.