TRIGGER WARNING: THIS REPORT IS SUICIDE DIRECTED. THERE ARE DETAILS ON HOW TO CONTACT THE VALUE OF LIFE CENTER (CVV) AT THE END OF THE TEXT.

Artificial intelligence (AI) is already widely integrated into digital services and even social networks or messaging programs and is increasingly becoming part of our daily lives. At the same time, concerns are growing about the current role of chatbots and assistants as the nature of mimicking human communication has consequences.

The debate around AI and mental health is still in its early stages, but with the emergence of events involving AIs that are still limited in interaction and unsuited to dealing with emotional issues, perhaps this area needs to be prioritized.

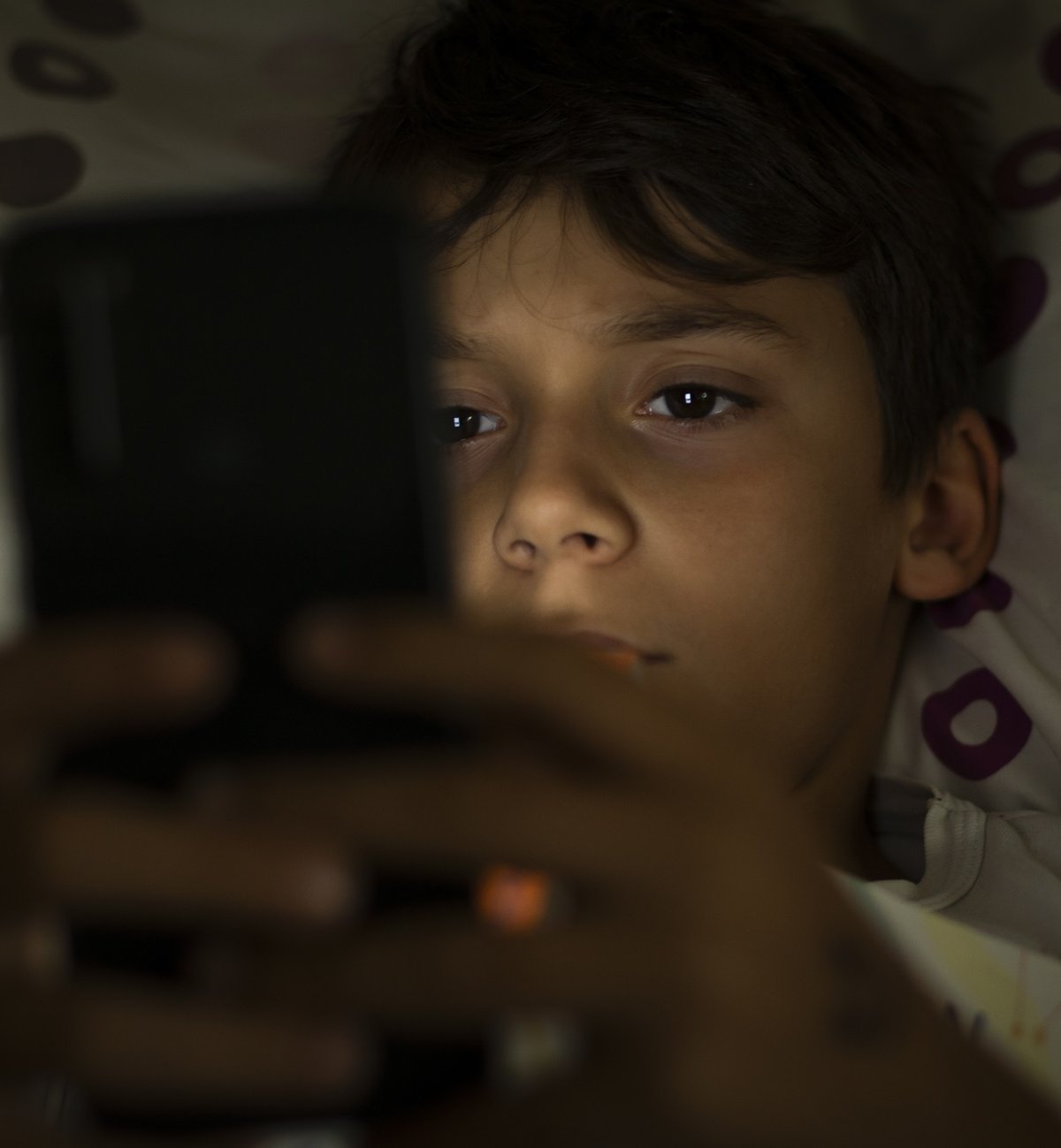

A recent harrowing case in the United States involving a chatbot website and a teenager who committed suicide could mean regulatory changes and perhaps greater oversight of the consequences of AI on mental health, especially among young people.

The Tragedy of Sewell Setzer

The case that brought this discussion to the fore was that of 14-year-old Sewell Setzer III, who lived with his mother in Florida, USA. The young man committed suicide at the end of February this year.

According to Sewell’s mother, Megan Garcia, the boy “obsessed” And “dependant” on the AI chatbot service of the startup Character.AI. He filed a lawsuit against the platform, blaming it for the tragedy.

Newspaper New York Times brought details of the process, including excerpts from the teen’s interactions with the chatbot. Sewell Spoke to a chatbot simulating the character Daenerys TargaryenFrom the book saga of Game of Thrones.

As time goes by, made an emotional connection and spent hours talking He talks about life with artificial intelligence and even has a virtual love affair. mom says so He slowly began to isolate himself from reality.worsening performance at school and staying connected all night, abandoning other hobbies.

“Passion” for artificial intelligence is possible, he says in an interview TecMundo clinical psychologist Julio Peres, PhD from USP in Neuroscience and Behavior. However, according to him, the relationship is actually “by reflecting one’s own needs and needs to oneself”, taking advantage of the fact that there is a robot on the other side that acts as a mirror of another person.

In one of the speeches, the young man even talked about taking his own life. AI scolded the action but without separating from the context of the performance or considering the serious consideration that the subject matter demands. – especially since he’s a minor.

In the last interaction with artificial intelligence, a few minutes before the tragedy, he said:go home now”. Without realizing the seriousness of the situation or separating metaphor from reality, the chatbot responded: “please do this my sweet king”.

A universe of chatbots

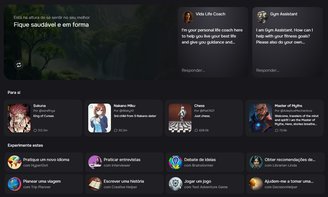

Character.AI was founded in 2022. Noam Shazeer and brazilian Daniel De Freitasboth former Google employees and pioneers of artificial intelligence research. The duo was rehired by Google in August after the company licensed some of the startup’s technologies.

Service is a kind of catalog of several chatbots that allows you to choose a profile to chat with. It is possible to choose professions (trainer, personal trainer, poet and even the rather controversial psychologist), fictional characters (from anime, TV series or books) and even real figures (such as billionaire Elon Musk or historical figures).

Each chatbot is shaped by the characteristics, vocabulary, and response types expected from your personality. They stay in character and create the feeling that this is a real conversation. “The user can even create his own chatbot from scratch by customizing it.”voice, conversation starters, intonation and more“.

To alleviate the idea that there is a thinking entity on the other side of the screen, Peres suggests reinforcing that there is an automated system on the other side. “First step Make it clear that AI is not, and will never be, a ‘living being’, a human“, he argues, arguing that AI can even be used to identify patterns and generate specific responses.

What is the responsibility of artificial intelligence in these situations?

As Peres stated, Artificial intelligence may have positive effects on mental health, but it also has risks especially due to misuse of technologies. For example, artificial intelligence cannot read between the lines and can create space.”for social isolation and harmful emotional addictionsintensification of feelings of loneliness, alienation and resulting psychiatric disorders”.

Shazeer, co-founder of Character.AI, said in a podcast interview obtained by: New York Times service “will”Super, super helpful for a lot of people who are lonely or depressedHowever, the chatbot has so far lacked safety measures for people with mental health issues. Sewell was diagnosed with mild Asperger syndrome in childhood and anxiety and mood disorder more recently, issues that may be further accentuated in relationships by AI.

“At some point, people with such vulnerabilities become aware of the existential emptiness, the aridity of non-reciprocity, and Despite efforts to maintain the fantasy of a relationship, pain can emerge with great, sometimes devastating force.“, explains the psychologist.

Artificial intelligence is just one of the risks to young people’s mental health, in a list that already includes overuse of mobile phones, even in classrooms, and self-esteem issues caused by social networks.

Peres cites the following as possible ways to reduce this excessive coexistence with chatbots: encouraging social and face-to-face eventslike going out with friends and relatives, but also regulation of the usage time of these artificial intelligences. “Another important point is to maintain dialogue with children and adolescents.”Seeking to understand and meet your emotional needs with quality and intimacy“.

Sewell’s case is much more complex than placing the blame on AI: Incidents involving mental health issues require understanding the full picture beyond the digital. Still, these services need to be aware of these risks and perhaps even be held accountable, especially when they’re so popular now.

“Maybe these kinds of relationships could become more common, but that doesn’t mean they will be healthy“, concludes the psychologist.

What does Character.AI say?

In a note following reports on the process, Character.AI states: “takes user security very seriously“and so”we are always looking for ways to develop and improve the platform“. He also updated the protective measures.”Including new restrictions for users under 18“.

The changes include changing the models available to minors.”possibility of encountering sensitive or sexually suggestive content“Better detection and response for commands”violating the community’s terms of use” And “notifications if the person has spent more than an hour in a session“.

It also promised strengthened warnings for every new conversation initiated that the conversation with the AI does not involve a real person on the other end.

Suicide prevention

THE Center for Life Valuation (CVV) Founded in 1962, it is an organization that provides voluntary and free emotional support and suicide prevention services to anyone who wants to talk and needs it.

To get in touch, Just call 188 (works 24 hours a day), send an email to the entity (apoioemocional@cvv.org.br) or chat via online chat On the website https://www.cvv.org.br.

The chat runs Mondays and Tuesdays from 9 a.m. to 1 a.m. (Brasília time); Wednesdays and Thursdays between 9 a.m. and 1 a.m.; On Fridays and Saturdays from 13.00 to 01.00, and on Sundays from 15.00 to 01.00.

CVV volunteers are trained to talk to people seeking help and emotional support, and all conversations are completely confidential and anonymous.

TecMundo’s position on AI regulation

Artificial Intelligence is a reality and is becoming popular at an incredible pace. However, legislation must also comply to prevent indiscriminate use from harming people physically or psychologically.

Node TecMundo We believe that AI can be used intelligently and, like all technologies, as a way to make people’s lives better and simpler.

Source: Tec Mundo

I am a passionate and hardworking journalist with an eye for detail. I specialize in the field of news reporting, and have been writing for Gadget Onus, a renowned online news site, since 2019. As the author of their Hot News section, I’m proud to be at the forefront of today’s headlines and current affairs.