We are limited in access to Western neural networks, ChatGPT. Well, let’s download the analogue ourselves and use it on our own computer.

The progress in the development of neural networks, especially open ones and separated from global commerce, cannot be stopped. Why pay someone or give your correspondence to the Internet is unclear, if you can have your own neural network chatbot?

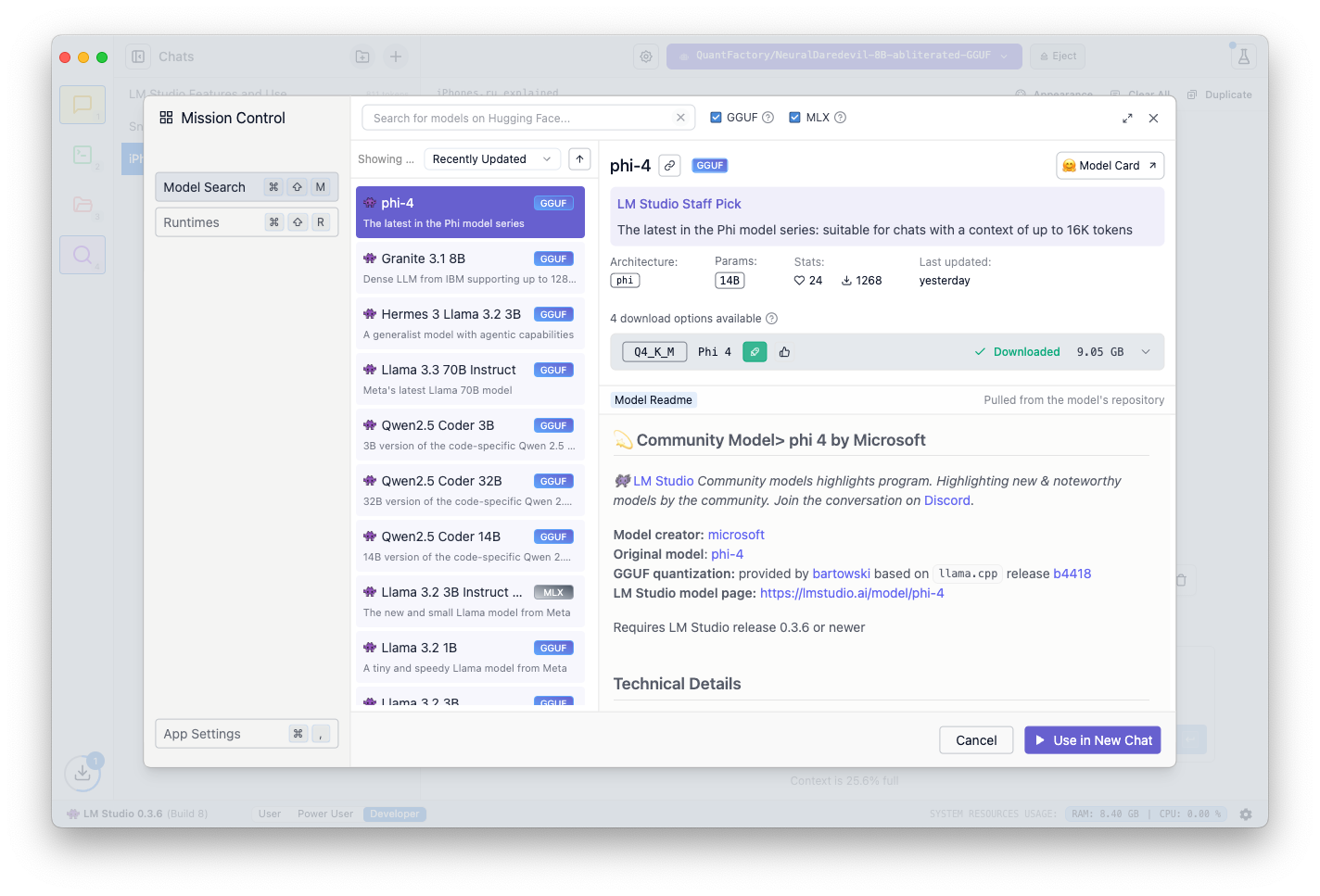

Previously, I talked about LM Studio and the capabilities that this program provides to almost all Mac and PC owners. In the comments you asked for a little more examples of neuro-bots – do that in this article.

What is LM Studio, briefly

LM Studio is a free, open-source application that runs neural network chatbots directly on your computer. It was even mentioned by Apple in its presentation of Macs with M4 chips.

In LM Studio, all actions, correspondence and other data are stored only on the user’s device. Don’t go online Nothing. This will be 100% your own AI assistant, for which you will not have to pay – unlike ChatGPT and many other online neural networks and chatbots.

You simply download the LLM model (a file with an already trained neural network-chat), launch it in LM Studio and use it as you please. More precisely, as much as possible to increase the power of your computer. There is a Russian interface, some models even use the Russian language.

What is needed for LM Studio

To get started:

1. We go to the LM Studio website (it may not open from the Russian Federation, I will post the version for Mac in our Telegram channel).

2. Download the program for your OS.

3. Install, open.

You can run LM Studio and install the simplest AI chatbot even on a basic Mac with an M1 chip and 8 GB of RAM. However, to ensure speed and better memory of correspondence history, it is recommended to use models with 16 GB of RAM and up to… infinity.

With PCs, the situation is different due to the fact that the RAM capacity of their holders is much less limited. Here it is really comfortable to use useful bots, either to upgrade a video card from Nvidia with at least 8 GB of its own memory, or as much regular RAM as possible. Truly, LM Studio is capable of loading at least 100, at least 500 GB of RAM – it all depends only on the degree of advancement and demands of this “neural network”.

How to install the model: in LM Studio click on the icon Discover (magnifying glass in an electric screen) and select any of the popular options offered. You can also paste the address of any other LLM model from the Hugginface website into the uploader search text.

Let’s look at 5 good and widely useful models that help solve various problems on basic and average Mac and PC configurations.

1. phi-4

New from Microsoft, released in December 2024. Created through collaboration with OpenAI and ChatGPT developers. Its goal is to achieve maximum response quality with minimal model weight.

Strengths: Mathematical problems and calculations in general. Commercial use permitted. Requires at least 12 GB of RAM.

🤖 This model is on Hugginface

2. Qwen2.5 encoder

A popular model for programmers, designed for generating, analyzing and debugging code. One of the best among open LLMs, she is constantly praised and recommended on forums.

It is also good because it has a huge number of modifications: the simplest one, Qwen2.5 Coder 3B, runs even on a PC with 6 GB of RAM. And the advanced one, Qwen2.5 Coder 32B, requires about 24 GB of RAM.

🤖 This model is on Hugginface

3. Lama 3.2 3B Instruction 4bit

One of the smallest models. Capable of launching and running quickly on almost any PC and absolutely any Mac with an M1 processor or newer.

Addition for asking general questions and giving her simple tasks. Ideal as a preliminary model to familiarize yourself with the capabilities (and typical limitations) of local LLM chatbots. Requires only 4 GB of RAM.

🤖 This model is on Hugginface

4. NemoMix Unleashed 12B or ArliAI RPMax 12B

The two least effective “gaming” models for a wide range of queries. Suitable for RP, communication on broad topics, answering questions and just entertainment. This huge memory reserve contains the history of correspondence within the chat: more than 100 thousand tokens in each.

Effective operation of this model requires at least 12 GB of video memory or RAM, and when setting the memory capacity above 12 thousand tokens for correspondence, hardware no weaker than the RTX 3080/M4 Pro is recommended.

🤖 NemoMix Unleashed 12B on Hugginface

🤖 ArliAI RPMax 12B on Hugginface

5. Neural Daredevil 8B destroyed

One of the fastest among wide-spectrum models. The speed and quality of generating responses within the limit of 4 thousand tokens are suitable for most of the above models. Suitable for general form answers.

Works well with requests and responses in English. Works well offline with 12 GB of RAM or more.

🤖 This model is on Hugginface

PS. There are already more than two hundred LLM models, and it is impossible to protest everything properly. If you’re already using LM Studio, please recommend your favorite models in the comments.

Source: Iphones RU

I am a professional journalist and content creator with extensive experience writing for news websites. I currently work as an author at Gadget Onus, where I specialize in covering hot news topics. My written pieces have been published on some of the biggest media outlets around the world, including The Guardian and BBC News.