Researchers at Apple and MIT may have discovered that Deepseek is much more energetically efficient than competitors: the use of the phenomenon of “freedom”. Last Saturday (25) in a study published in Arxiv, experts in the sector explain how the technique allows models to give good results, but how it is without the need for too much calculation.

“Freedom” (“Freedom”) is a known approach to optimize artificial intelligence based on machine learning. The term assumes different methods, one of which is a kind Deactivation of all parts of the neural network that should not interfere with the expected result “It looks like Deepseek’s example.”

Deepseek models can open and close the large parts of the nervous network, However, without affecting the quality of the outputs. One of these sections is the so -Called “parameters olan, the component of the nervous networks that convert inputs into text. The more parameters are used, the more the processing demand increases.

https://www.youtube.com/watch?v=nq2j5rb2ubu

“Parameters, etc. FLOPS: Scholarship Laws for Optimal Exile for the Mix-EX-EXPERTS Language Models, ”Apple and MIT researchers solve how model performance can change by exploring similar sparse technique.

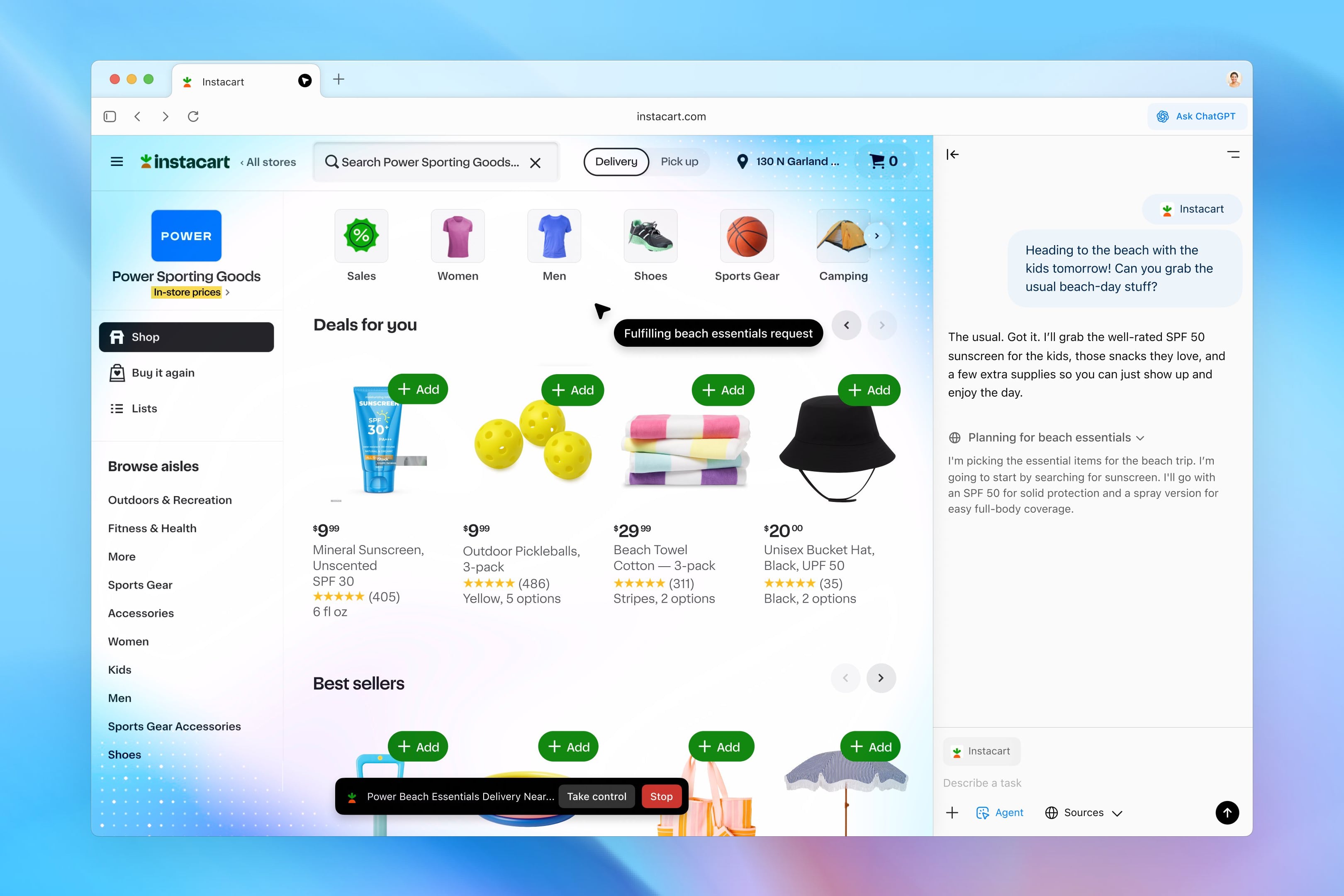

Deepseek says that the new Deepseek-R1 can match Openai’s O1 in different logical reasoning and programming criteria.

. paper Try to find out if there is an ideal sparse point for productive models such as Deepseek. This big point will serve as a maximum balance between the calculation demand (energy and processing) and the quality of the results. The document reaches the result Possible.

Although it is 2025 publication date, The study was conducted in the code libraries published in 2023 Microsoft by researchers from Megablocks, a group of professionals from Google and Stanford. However, the theory can be applied to the latest technologies such as the Chinese model.

Freedom is not new

Sparseness is not a new concept and It has been implemented in the technology sector for years. One of the companies that adopt the concept is Intel who perceives the phenomenon as the key to being successful in the sector for years.

In the case of Deepseek, the glaze method is well applied. As Professor Tongliang Liu stands out on Sydney University website, Deepseek adopted a new technique to delete the total parameters of the model And only ongoing development with this data.

“In conclusion, [os modelos da DeepSeek] They needed much less education than a traditional approach. ”

It has a wide variety of applications, including the implementation of progress in review studies, cheaper and lower calculation costs. In the case of Deepseek, it may have allowed this model to be more efficient than Western colleagues, but with the results, it is interesting and useful for the end user.

You can check the entire broadcast in the Arxiv warehouse.

Source: Tec Mundo

I am a passionate and hardworking journalist with an eye for detail. I specialize in the field of news reporting, and have been writing for Gadget Onus, a renowned online news site, since 2019. As the author of their Hot News section, I’m proud to be at the forefront of today’s headlines and current affairs.