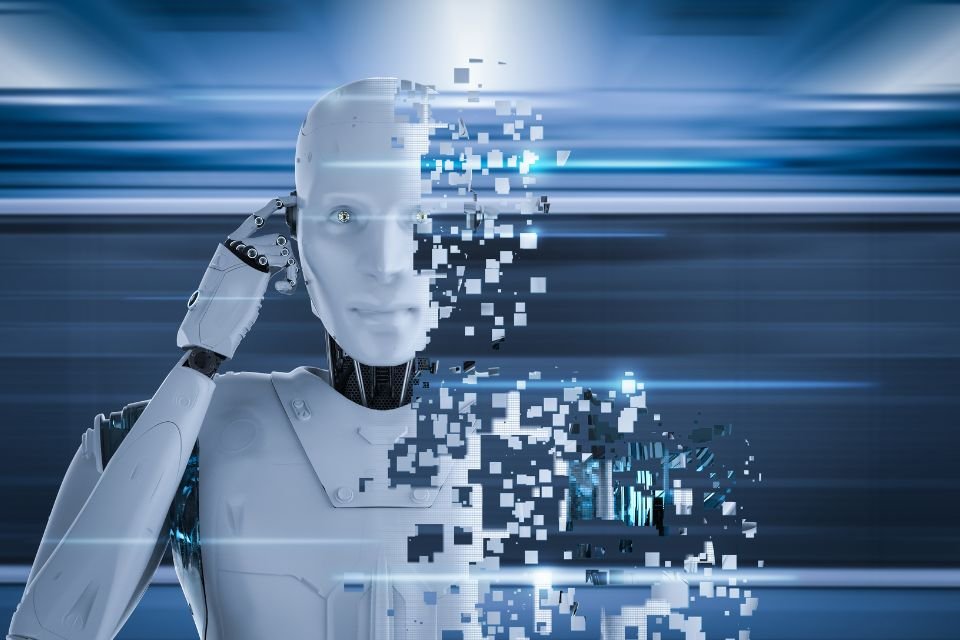

artificial intelligence It is gaining more and more space among internet users and is used in different fields and tasks, from the simplest to the most complex. But did you know that?It’s possible for AI to ‘hallucinate’?

This is no longer a uniquely human phenomenon. Considering that AI almost perfectly replicates human features such as speech, facial recognition, decision making, and others, some negative parts are also inherited, such as the hallucination part, which can lead to false conclusions or even dangerous consequences.

It is important to understand what is meant by “.hallucination“ in the context of artificial intelligence. Hallucination refers to the AI’s ability to produce results that don’t fit the reality or context in which they were trained. In other words, AI can produce results that look right but are actually completely wrong or even ridiculous.

What are the most common mistakes in AI?

There are several reasons why AI may hallucinate. In most cases, this is because AI trained with a limited dataset or in a limited environment. For example, if an AI is trained to identify animals, but only with images of cats and dogs, it may have difficulty identifying other animals such as birds or fish. It could also be that the artificial intelligence identifies something as an animal when there is no animal in the image.

The most common mistakes

Learning from missing or biased data

If the data used to train an AI model is incomplete or biased, the model may begin to produce conclusions about patterns in the training data, not reality.

Poor feature or algorithm selection

If an algorithm is poorly adapted to the problem at hand, it can start producing results that don’t make sense, which is a kind of artificial intelligence hallucination.

Lack of transparency and interpretability

Some complex AI models can be difficult to understand and interpret. As users may not have a clear understanding of how the model produces results, this may result in results not being as expected or artificial intelligence hallucinations occur.

Identifying signs

It may seem difficult to tell when the AI is ‘hallucinating’ because some results may even appear reasonable in situations and therefore go unnoticed. But there are some steps that can help. To determine the occurrence of AI hallucinationsCheck out:

- Ensure that the input data used by the AI model is correct and relevant;

- Verify that the assumptions underlying the AI model are correct;

- Check if AI model outputs match reality;

- Perform rigorous testing to evaluate the accuracy and reliability of the AI model, including validation, cross-validation, precision and robustness testing;

- Make sure the AI model can explain its outputs in a clear and understandable way.

In general, recognizing AI hallucinations requires a systematic approach and a clear understanding of the data, algorithms, and assumptions underlying the model.

What to do when AI starts hallucinating

One option is to improve the training of AI so that it has access to a larger and more diverse dataset.

Fine-tuning the AI parameters can also work, as it can help reduce the likelihood of hallucinations. For example, AI can be trained with a smaller dataset or trained with more iterations so it can generalize better.

Another approach is to use cross validation, which involves splitting the dataset into different pieces for training and testing. This helps ensure that the AI trains on a different dataset and is therefore less prone to hallucinations.

The right thing to do is to watch the AI model closely for any signs of hallucinations. This may include regularly checking the results generated by the AI and performing manual checks to make sure they are accurate and reliable.

It is important to remember that artificial intelligence is a constantly evolving technology and hallucination can be a natural part of this process. However, with proper care and oversight, AI can be an incredibly powerful and useful tool in a wide variety of industries.

Source: Tec Mundo

I am a passionate and hardworking journalist with an eye for detail. I specialize in the field of news reporting, and have been writing for Gadget Onus, a renowned online news site, since 2019. As the author of their Hot News section, I’m proud to be at the forefront of today’s headlines and current affairs.