Don’t believe anything you see: AI has already begun to deceive us and more dangerous than it seems

A video created by AI allowed several tourists to travel to a place that does not exist!

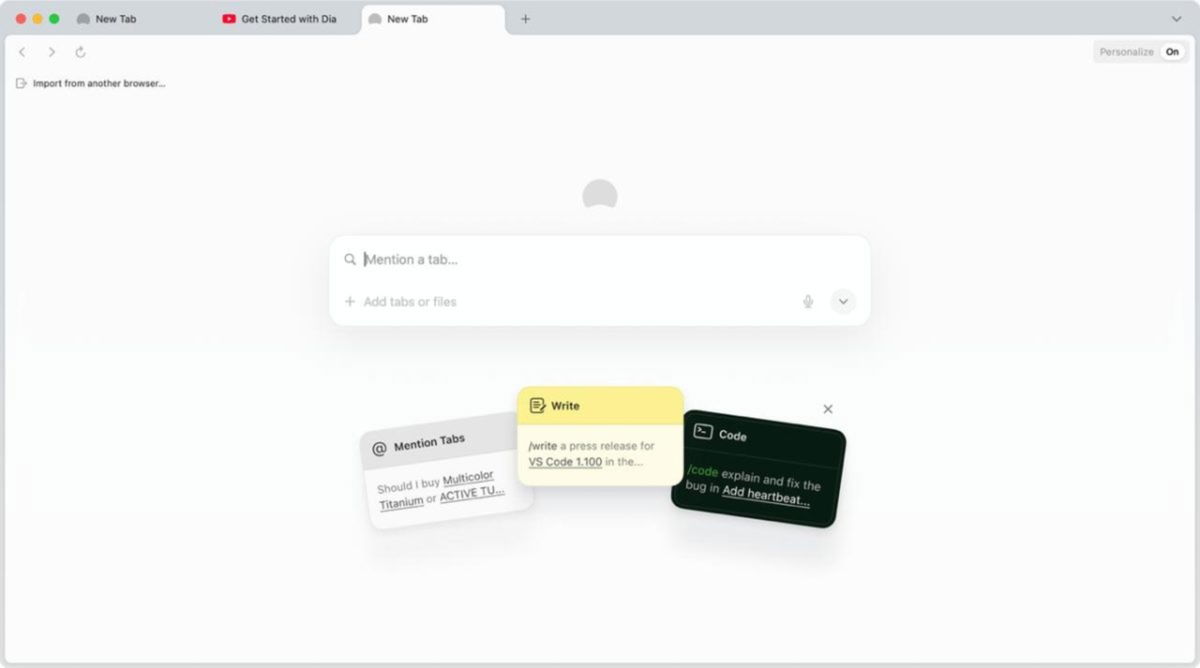

Artificial Intelligence (AI) has advanced with splash and boundaries by providing tools that could create incompetent content. From the photographer images, such as Apple Intelligence, to convincing videos such as sora’s Chatgpt, The producer AI redefines the limits of possible. But like all the new technology It is not foreign to prejudices, which allows us to question what is allowed to use each time.

As our adro4all friends told us, A recent event in Malaysia revealed the concrete risks of the content produced by AI.. As it seems, many were the victims of this digital illusion and “traveling” to the alleged touristic places.

Wrong trips create real emotions

It started with everything Publish a video produced by a Youtube channel called “TV Rakyat” has become viralIt shows a cable car from the lush mountains, dinner with panoramic views and even a deer zoo in the town of Kuak Hulu. Production was very detailed and realistic, including an artificial intelligence and a presentation that simulates a real report.Many people believed it.

An elderly couple took more than 370 kilometers to tourist places allegedly from Kuala Lumpur. However, when they came and asked about the attraction, they came into a raw truth: everything was probably a digital meeting created by means of tools like Google I See I. The local hotel employee reported that the couple’s disbelief is not only a cable car, but even the reporter of the video is an artificial intelligence creature.

Another user reported that their parents spend more than 2000 euros to rent a minibus to make the same trip.He was finally deceived by the viral video that was removed after the public scandal. Where are these events “Wrong trips create real emotions” And they result in economic frauds, opening an emergency discussion on the dark side of the productive AI.

So real to worry

The convincing nature of this content, which can manipulate the perception of reality, is what makes them so dangerous. And undoubtedly, create an excellent scenario for fraudsters to search for new victims, thanks to the use of cloned sounds for fraudulent calls or videos of non -existent places.

The solution of this growing problem is not simple. Experts show the need for an open regulation that constitutes limits and responsibilities.. In addition, a mandatory labeling for content generated by AI can help users to determine the real one. However, perhaps the most important thing is digital education, especially for those who are less familiar with new technologies, so that they can develop a critical eye and tool to verify the reality of the content they consume online.

You can follow iPadizat Whatsapp on Facebook, Twitter (x) Or consult our Telegram channel to be up -to -date with the latest technology news.

Source: i Padizate

I’m Blaine Morgan, an experienced journalist and writer with over 8 years of experience in the tech industry. My expertise lies in writing about technology news and trends, covering everything from cutting-edge gadgets to emerging software developments. I’ve written for several leading publications including Gadget Onus where I am an author.