Anthropic Claude 3.7 cope better than others, then Claude 3.5, but Google Gemini 1.5 Pro and Openai GPT-4O showed weak results.

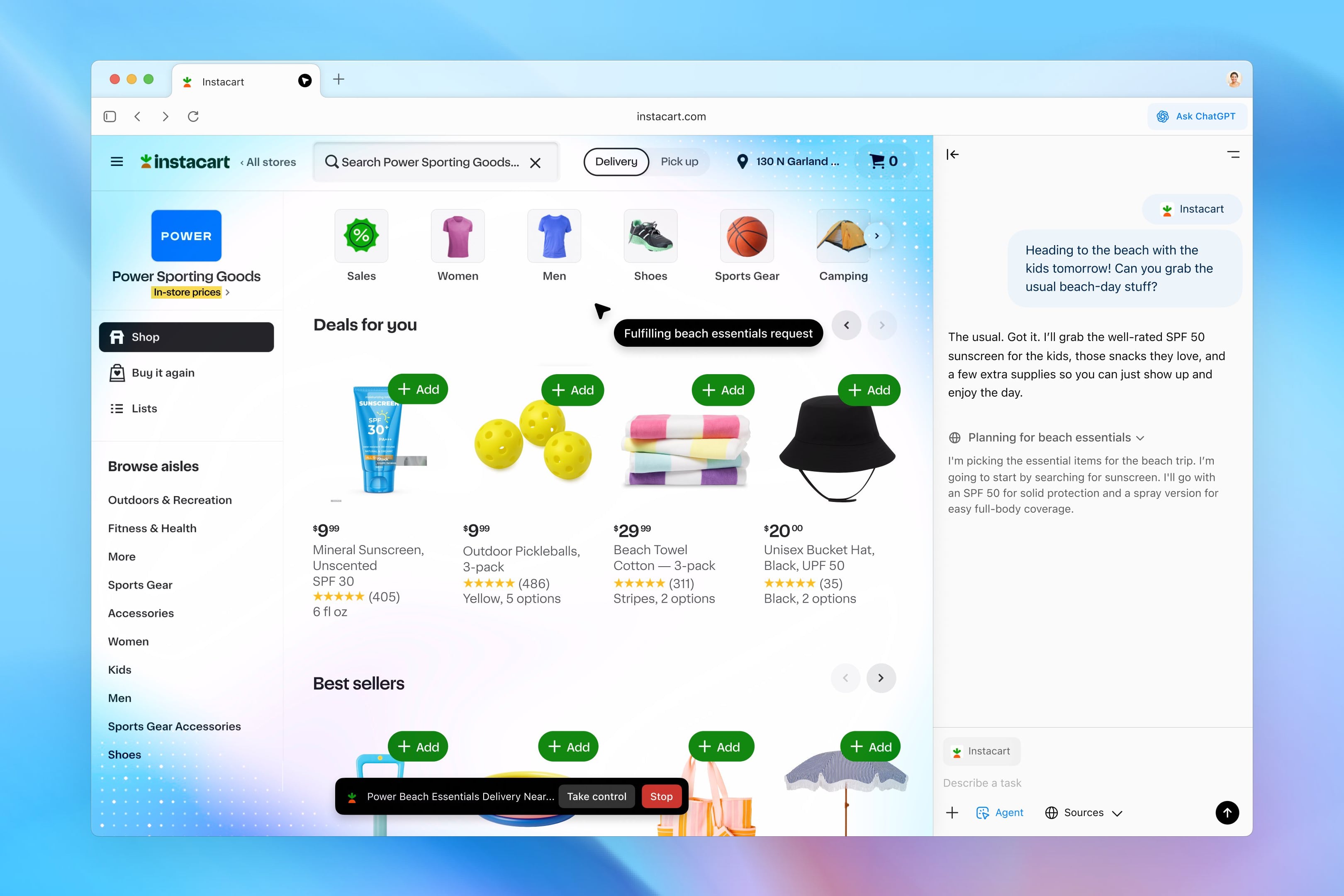

AI played a modified version of the game through the Gamingagent framework that transmits emulator and command models (“obstacle close”) and then AI, the Gamingagent framework that manages Mario, which forms the Python code.

He is wondering that models that use “logical” thought work worse than intuitive ones. The reason for this is the slow reactions: a second delay in a dynamic game may be victory.

Although the games have been used for a long time to test AI, experts doubt their objectivity. For example, Andrei Karpati, one of Openai’s founder, called it a “evaluation crisis ..

Source: Ferra

I am a professional journalist and content creator with extensive experience writing for news websites. I currently work as an author at Gadget Onus, where I specialize in covering hot news topics. My written pieces have been published on some of the biggest media outlets around the world, including The Guardian and BBC News.