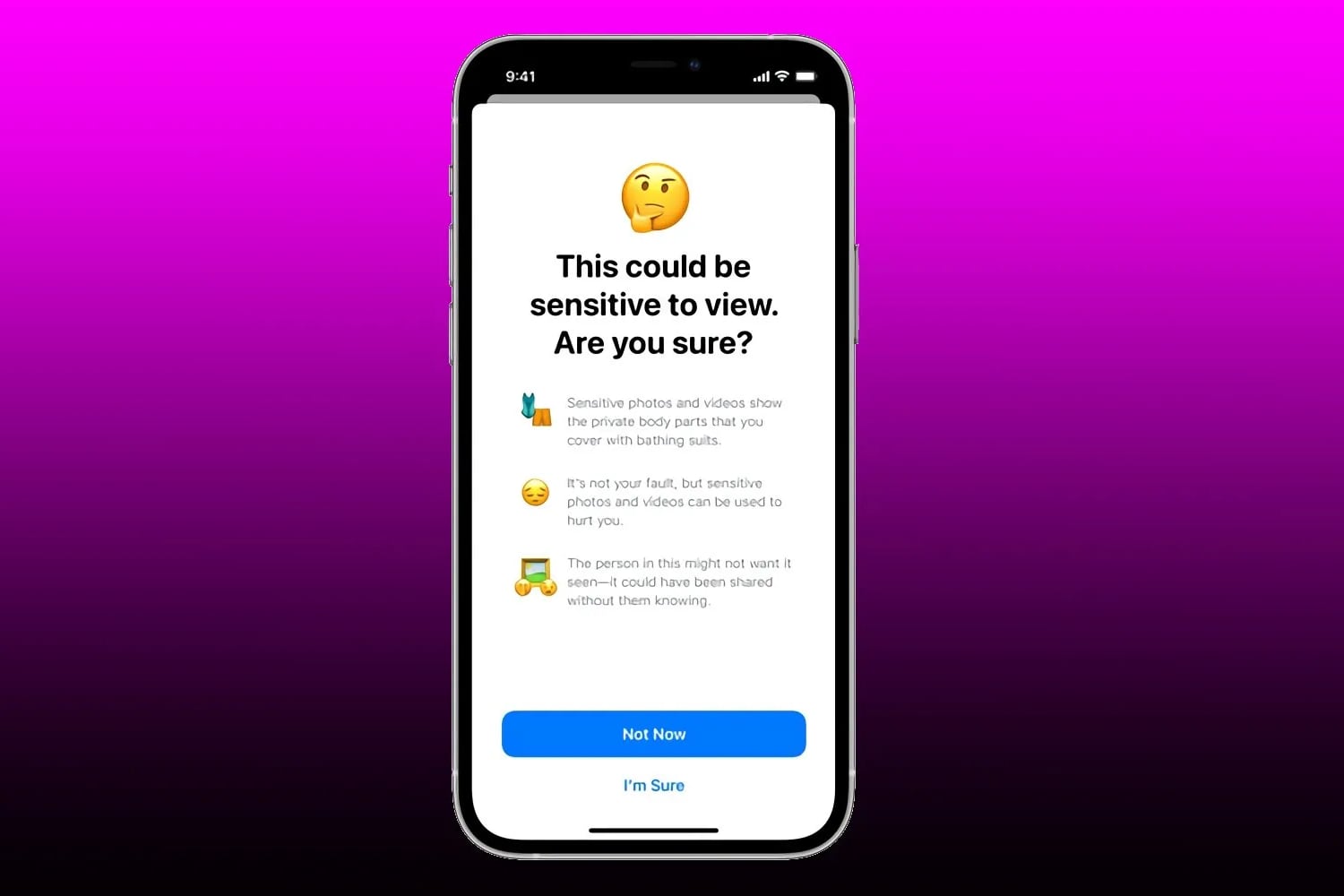

It’s been over a year since Apple introduced a feature that automatically highlights photos on iPhone, including child abuse scenes (CSAM).

Apple plans to add the feature to iOS 15 by the end of 2021. But the security company eventually delayed its launch after being criticized by users, expertise and advocacy groups.

In December 2021, Apple removed all references to the features from its website, but a company spokesperson said plans have not changed. Since then, Apple has not commented on the launch of the features.

Apple intended to only scan photos uploaded to iCloud. All operations had to take place on the iPhone. For each powerful image, it was hashed so that the system would compare its code with photos that were found with child abuse.

If there are many matches, image verification must be done by a special person. If he confirmed the existence of illegal photos, then Apple would transfer the data about the owner of the iPhone to a non-profit organization that works with the US glucose collection. [Macrumors]

Source: Iphones RU