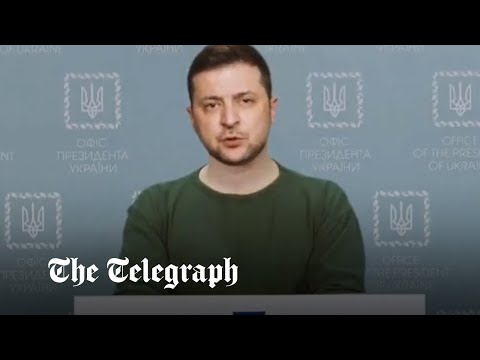

He’s no longer the Pope wearing Balenciaga. Last March, a video circulated of Ukrainian President Vladimir Zelensky announcing his unconditional surrender. This is his face, this is his voice. Almost simultaneously, another video was published in which Russian President Vladimir Putin announced the achievement of peace. The videos are not true. Are deepfakes: Such fakes are created using artificial intelligence. But they still went viral as if they were real news.

A new study tried to measure the dangers of these changes. during times of war. And he uses these two videos of Putin and Zelensky as main examples. “The Russian-Ukrainian war represents the first real example of the use deepfakes at war”with a series of content that is “used for misinformation and entertainment,” the researchers explained in their report published this week in PLOS ONE.

Researchers from University College Cork (UCC) chose Twitter for analysis. The social network that the team says shares the most notable cases deepfakes. Images of Donald Trump’s false arrest and the Pope’s postcard have gone viral on the platform. They analyzed a set of 4869 tweetspublished between January 1, 2022 and August 1, 2022

Related content deepfakes increased steadily during the week before the start of the war and the first weeks of the conflict between Ukraine and Russia. After studying the messages, analysts identified three main trends. Two of them point to the use of these falsifications for disinformation (fake news) or to create humorous content (for example, a montage of Putin in a scene from The Lord of the Rings).

deepfakes during war breeds skepticism

But the study pays particular attention to the third of these trends: how, paradoxically, complaint about deepfakes causes skepticism among users. In other words, people who didn’t trust legitimate news because they suspected it was fake.

Many tweets in the dataset expressed “healthy skepticism” towards deepfakes. That is, users who warned about the dangers of such practices. “Unfortunately, most of these conversations are about deepfakes during the war it consisted of unhealthy skepticism,” the study report notes.

Researchers attribute this to fear of deepfakes This undermined users’ trust in the conflict images they received, to the point of ignoring any information related to the conflict. “We can no longer always trust our eyes” or “It’s not that this video is fake, but we need to consider all possibilities.” These are two examples given in the analysis.

They also said that the argument about the spread of these fakes was used against journalism and verified media. And, in the same way, fuel conspiracy theories. “It highlights how speech can be used deepfake in arguments that undermine the truthfulness and reliability of the media,” the researchers note.

In search of reliable information

In the tweets examined, real media outlets were more likely to be rated as fake than fake ones. deepfakes related to the war. “We found that people were using the term deepfake as a buzzword to attack people online,” said John Twomey, a researcher in the UCC School of Applied Psychology and co-author of the study. Gizmodo.

The world’s largest technology companies, developers of the most powerful artificial intelligence tools, have realized the danger. “We need to take steps to protect against legitimate content being modified to trick or deceive people through the use of AI,” Brad Smith, president of Microsoft, said last May.

Microsoft and other companies such as Google have introduced tagging tools that can check whether an image or video was created by artificial intelligence. A new study, however, warns that some of the well-intentioned media coverage of the dangers deepfakes may inadvertently contribute to the deterioration of trust.

“More and more people know about deepfakes than its actual prevalence” says Toomey. He explains that the media and journalism will have to be very careful in how they label assumptions. deepfakes so as not to arouse suspicion towards the real media.

Source: Hiper Textual

I am Garth Carter and I work at Gadget Onus. I have specialized in writing for the Hot News section, focusing on topics that are trending and highly relevant to readers. My passion is to present news stories accurately, in an engaging manner that captures the attention of my audience.