Apple teamed up with the University of California to introduce the open-source artificial intelligence model MGIE.

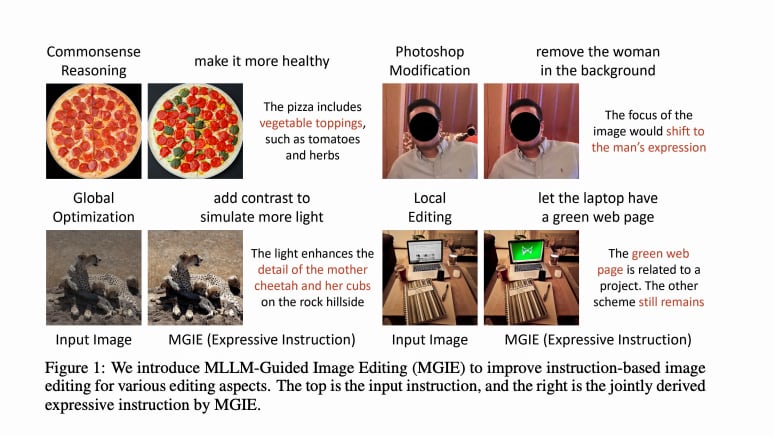

Its essence lies in the fact that it edits photos using a text command. MGIE uses multimodal large language models (MLLM) to understand user commands and simultaneously recognize objects in images at the pixel level.

Artificial intelligence can change contrast, exposure, sharpness, color balance, crop and rotate photos. He can also do complex things, such as managing only the household in the picture.

For example, you can say “remove the woman from the background,” after which the MGI will carefully cut out the person and replace him with a different background.

MGIE can add objects. If “make it healthier,” then MGIE will add pizza, tomatoes and herbs to the photo.

Another example: if you write the command “make the sky bluer,” then you need to increase the saturation of the sky by 20%.

MGIE is available on GitHub, where anyone can find the code and pre-trained models.

You can also test the AI on the Hugging Face Spaces website. MGIE only supports English and the command currently takes several minutes to complete due to the large number of users on the platform. [VentureBeat]

Source: Iphones RU

I am a professional journalist and content creator with extensive experience writing for news websites. I currently work as an author at Gadget Onus, where I specialize in covering hot news topics. My written pieces have been published on some of the biggest media outlets around the world, including The Guardian and BBC News.