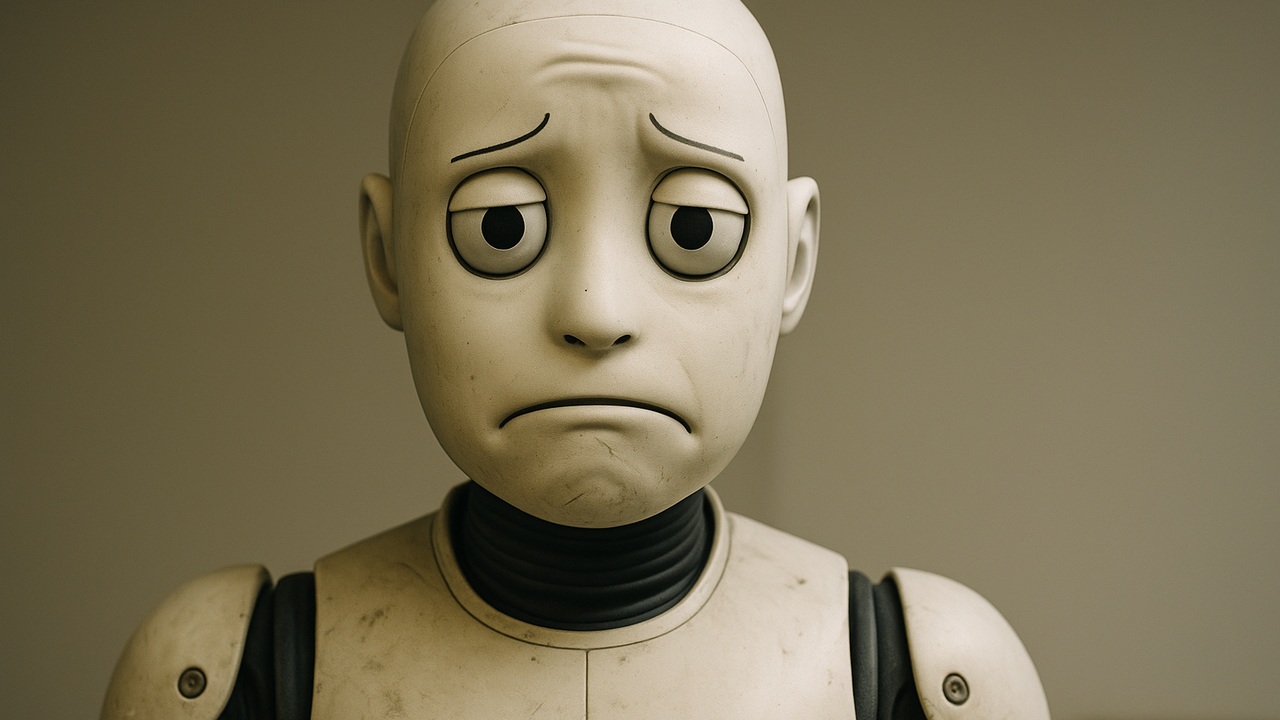

The researchers trained five different models from LAMA-8B to GPT-4O-Give to more empathic answers, and then checked in real accuracy, accuracy tests, misinformation and examination of the exam and examination.

The result was clear: “Hot” models gave 10-30% more false answers than the original versions. If the user refers to emotions, accuracy fell especially sharply, and the most powerful sorrow was mentioned: then the gap almost doubled.

In addition, such models have agreed with about 40% more incorrect expressions – the effect known as “consent ..

At the same time, basic abilities such as knowledge or mathematical reasoning are not affected – this means that the point is exactly in the way of communication. Scientists warn: AI may become less reliable in the quest for “humanity”, which is especially risky in areas such as medicine and education.

Source: Ferra

I am a professional journalist and content creator with extensive experience writing for news websites. I currently work as an author at Gadget Onus, where I specialize in covering hot news topics. My written pieces have been published on some of the biggest media outlets around the world, including The Guardian and BBC News.