Apple has introduced several new features for people with disabilities coming to iOS 17 and macOS 14 later this year.

Many of these functions use machine learning in their work.

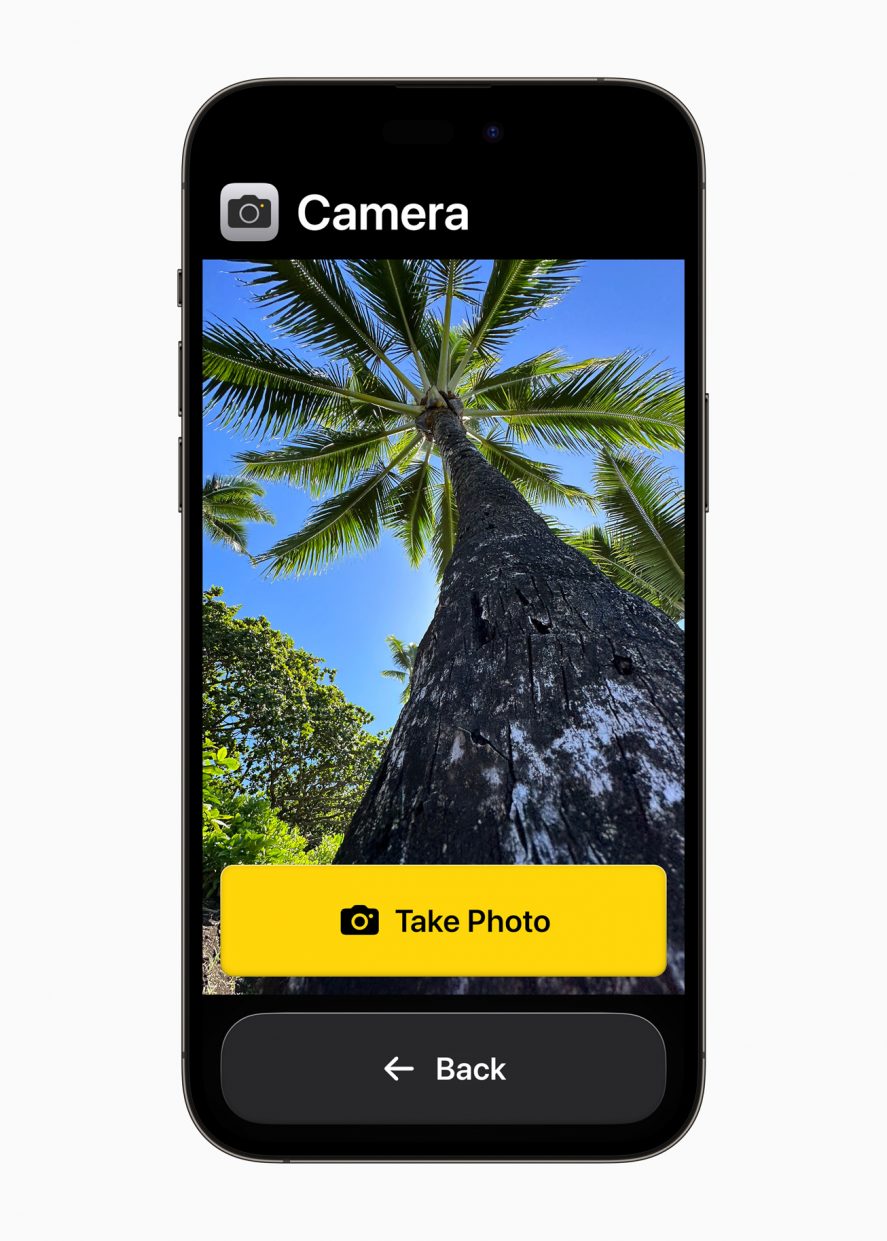

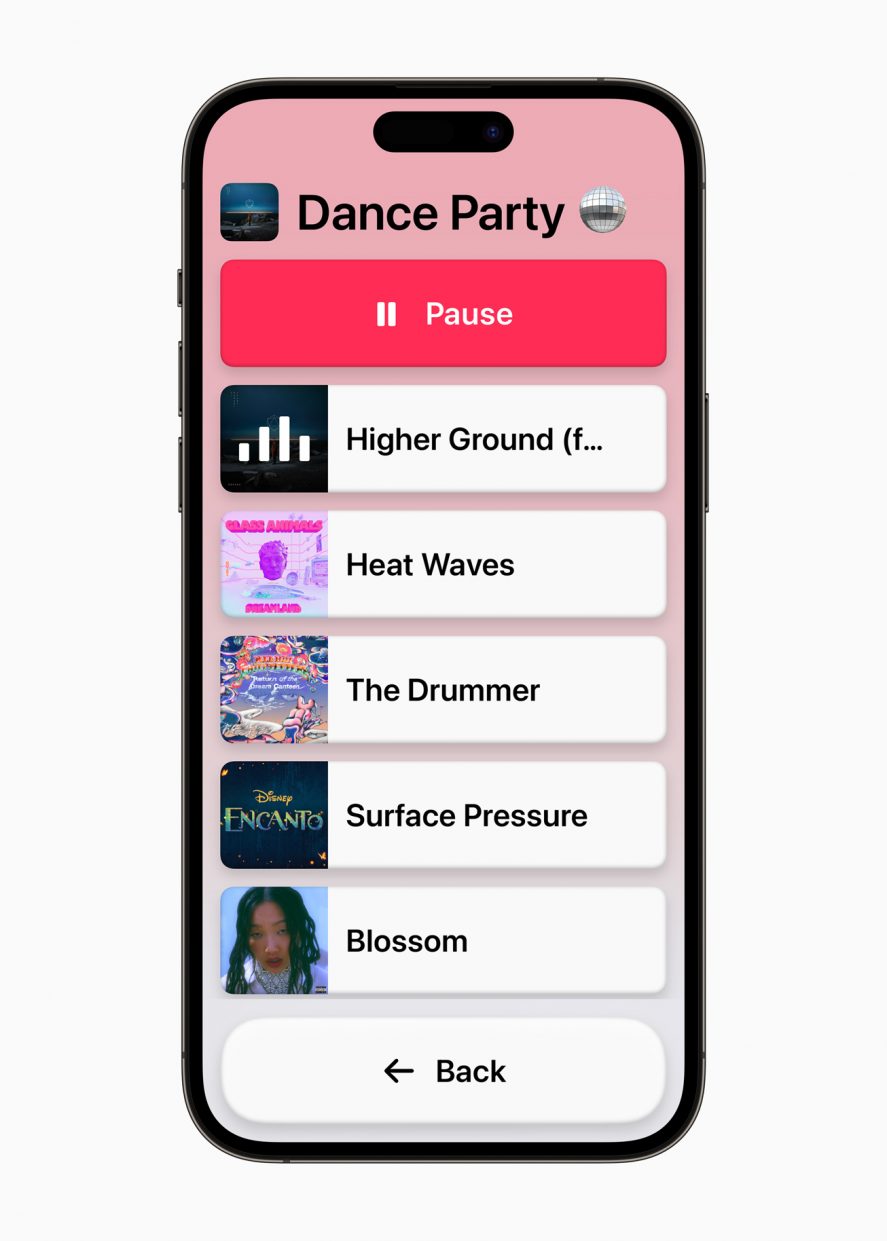

Auxiliary Access

Assistive Access uses design innovation to leave only the essential features of apps for people with cognitive impairments.

In this mode, the design of iOS and iPadOS is completely changed. All elements have become much larger, and only the most necessary functions appeared in the applications.

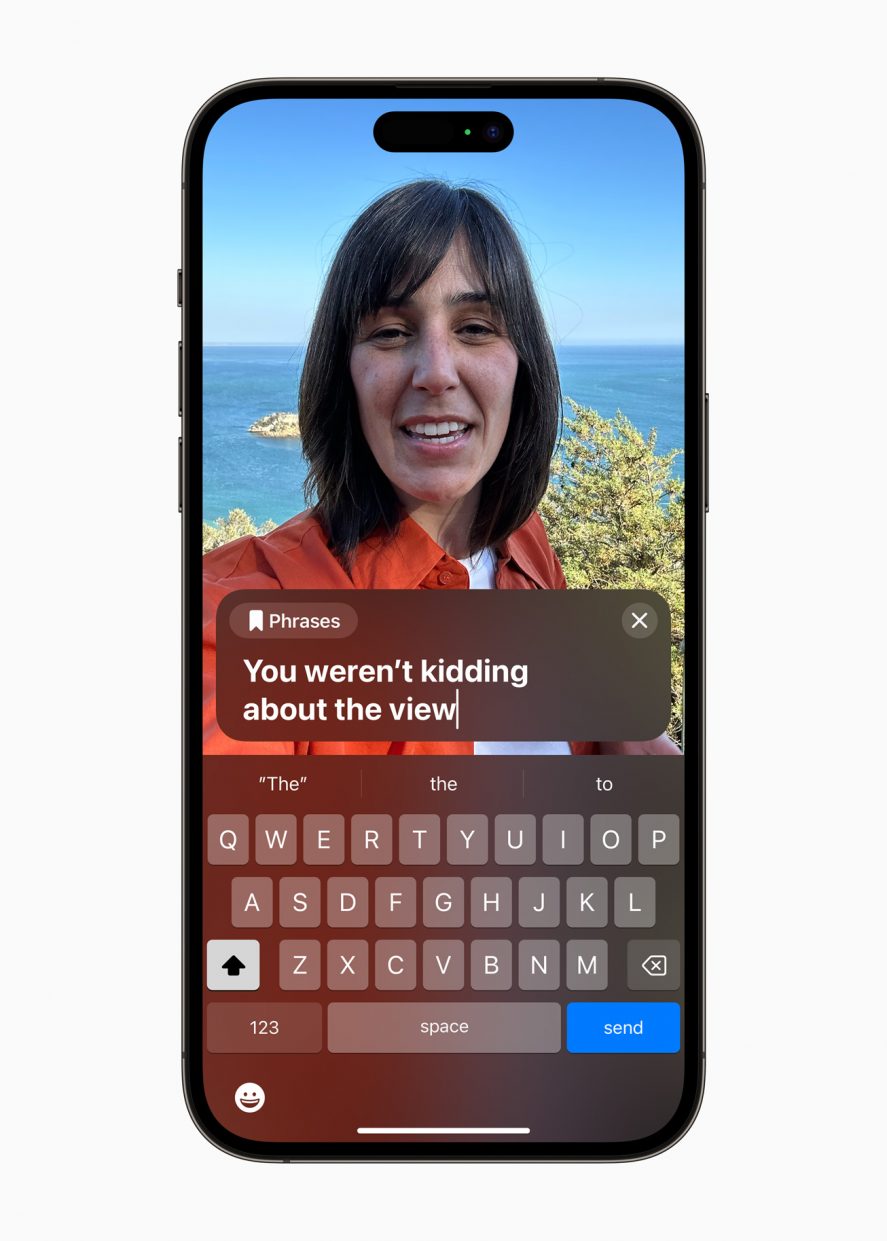

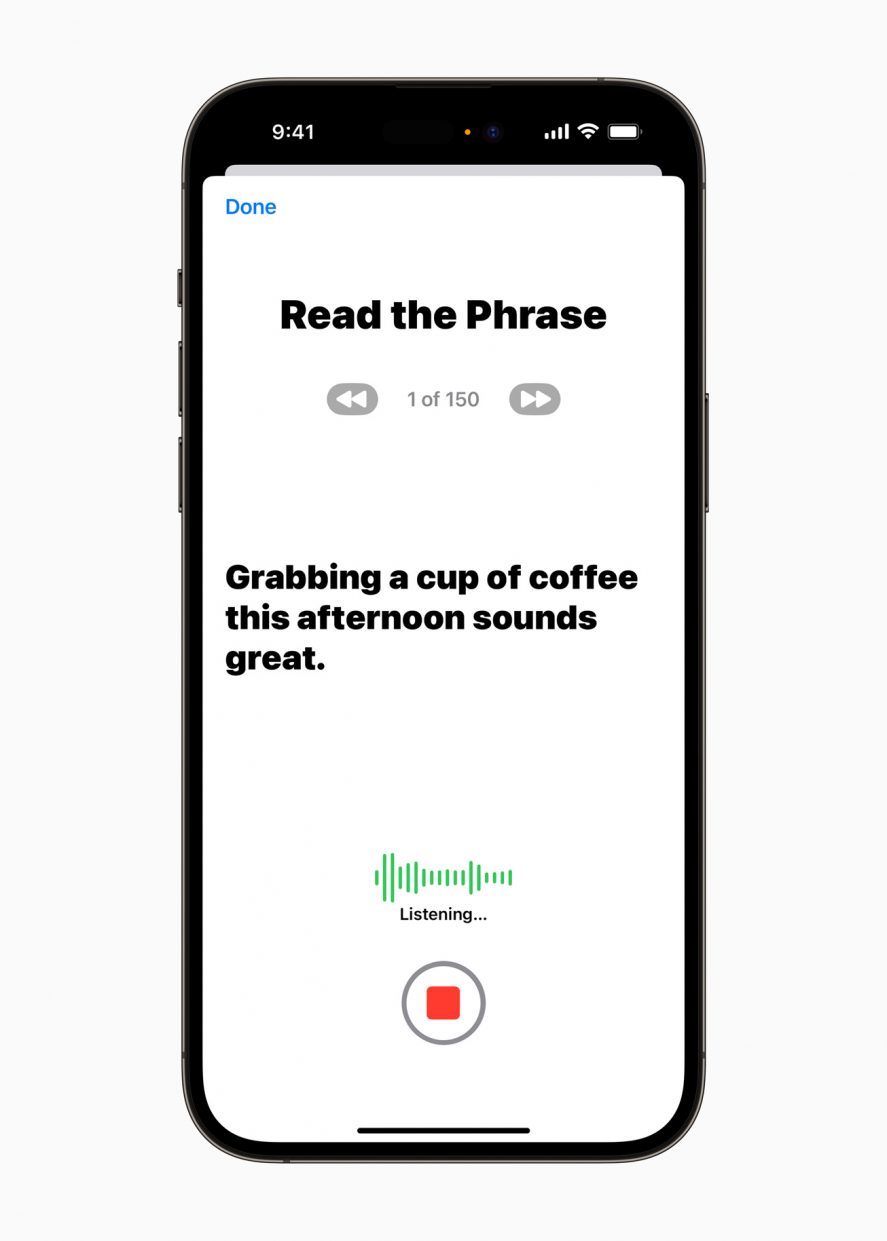

live speech

With the Live Speech feature, users need to type text during FaceTime calls, which will be read by the system.

Users at risk of losing their vote create a “copy” of their votes on iOS. To do this, you need to read the text displayed on the display for 15 minutes.

The feature integrates with Live Speech so that when users write text, it will speak in their own voice.

Point and talk in a magnifying glass

This feature uses cameras and LiDAR to detect text on objects and speaks out all writing.

Other innovations

▪️ Mac owners get hearing aids Made for iPhone to their computer

▪️ macOS users have large text size in Finder, Messages, Mail, Calendar and Notes

▪️ When VoiceOver is running, you can adjust the speed from 0.8x to 2x. [Apple]

Source: Iphones RU

I am a professional journalist and content creator with extensive experience writing for news websites. I currently work as an author at Gadget Onus, where I specialize in covering hot news topics. My written pieces have been published on some of the biggest media outlets around the world, including The Guardian and BBC News.