You can buy a fake person’s profile for just a few euros. You adjust the view according to your need. Age, gender, even ethnicity is up to you. Thousands of simulated profiles circulate on the Internet, and behind them are all kinds of people who want to remain anonymous. But if you are careful, you may still see a fake profile.

Buy your new camera on Bol.com

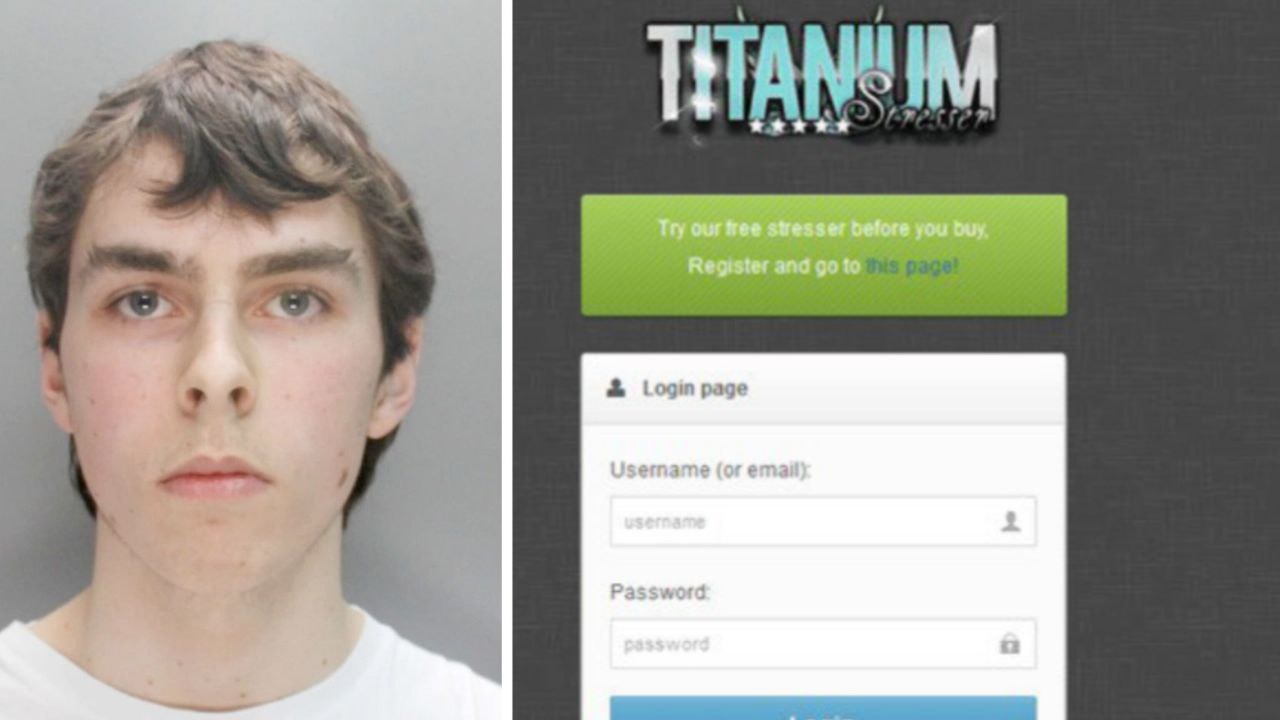

The Facebook group Convoy to Ottawa 2022 is one of the social media initiatives earlier this year that called for the Canadian capital to be occupied by trucks to protest the corona measures. One of the admins of this Facebook group is Jason Shoeaddict. Possibly a pseudonym, this is odd for someone who claims to work for National Geographic TV, an organization with no trace.

What’s even weirder is that Shoeaddict’s profile picture also appears on another profile, Pavel Zakirov, someone impersonating the hiring manager of a Russian website for freelancers. We have no idea if Shoeaddict is a Russian troll provoking Canadian activists. What we do know, by the way, is that the photos of Jason and Pavel are the product of deepfake technology. Even though they look like your neighbor, there is no one in the world with the head you see in their profile photos.

GAN

Fake profiles have long been no exception on social media, dating sites, and recruiting platforms. They are used to give the impression that an organization has a large following. Fake profiles not only use fake names, they also like to put faces on them for the sake of credibility. Sure, you can steal an existing person’s photo, but nowadays you quickly stumble upon the lamp. That’s why people prefer to use deepfake photos.

These photos were compiled by a GAN (Generative Adversarial Network). Such a productively contradictory network is a class of machine learning algorithms in which two neural networks constantly compete with each other. Such a GAN works with a neural network for the generator and a network for the discriminator on the one hand.

The generator takes as close as possible the actual images it is trying to emulate. The discriminator learns to distinguish between fake images and originals. StyleGAN is a productively contradictory network that was introduced as a resource by Nvidia researchers three years ago.

One person per click

If you enter a large amount of images into such a network, the system may generate new images of a similar type. Meanwhile, GANs are also used not only to produce art independently, such as panoramas of huge cities, idyllic sunsets or Escherian structures, but also to produce music. Over time, GANs will be able to allow images of humans to speak and act naturally.

Feed a large amount of portraits to a GAN, and the tool will show you A’s nose, B’s lips, C’s eyebrows, etc. begins to create new faces on its own. Phillip Wang’s This Person Doesn’t Exist website constantly creates new faces of people who don’t exist. You can download any photo. By the way, there is a parody of this site for cat lovers: www.thiscatdoesnotexist.com.

communication weapon

What once started as an innovative concept is now used as a communication weapon. Dark things happen with fake profiles. For example, according to William Evanina, director of the US National Counterintelligence and Security Center, fake profiles on LinkedIn are a risk-free way to recruit people for espionage. “Instead of sending a spy to a US parking lot to recruit a target, it’s more efficient to sit in front of a computer in Shanghai and send friend requests.”

Fake profiles are used to influence the public. As early as 2016, Russian trolls tried to disrupt the political debate in the US elections. Since then, Twitter has been actively tracking fake accounts to get them suspended. This effort has reduced the number of users of this platform by nine million.

The best-known example in the low countries is Eduard van Vlugtenstahl, who is currently circulating on Twitter as Mr. Panini. He was the one who tweeted in the midst of the Covid crisis: “There was a bad post today. My ex-father-in-law died suddenly after a second shot. Please do not ask about this. Draw your own conclusions.”

The message was immediately retweeted by a Dutch politician. It soon became clear that Van Vlugtenstahl never existed and that his profile picture was created by a GAN.

learn to detect

Producing photos of people who don’t actually exist is a by-product. Initially, the purpose of the AI face generator was to recognize fake faces and faces in general. It’s startling how realistic the results look.

Even though deepfakes are getting better, a neural network still glitches. You can discover them by examining them very closely, because humans’ visual processing systems are still more powerful than a computer’s. You can tell it’s a fake from a strange hair coming out of his forehead, the suddenly disappearing edge of a spectacle frame, artificial stains in the background, repetitive incisors…

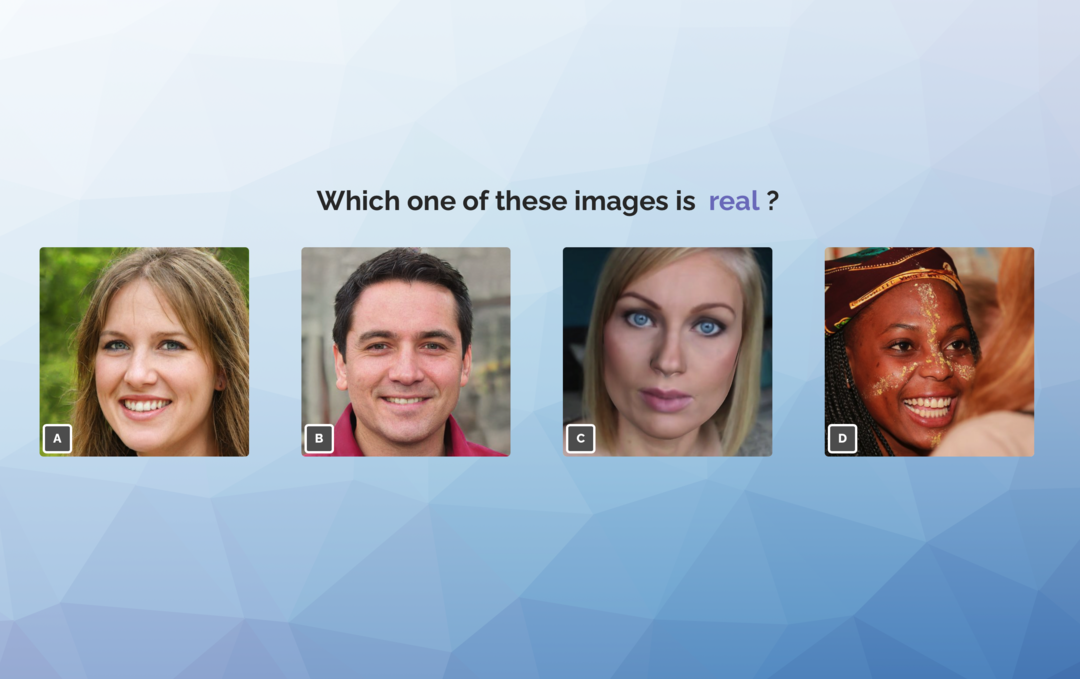

These visual errors are called glitches. You can train yourself to spot glitches in Which Face Truth, a project by two scientists at the University of Washington. You will always see a photo of No This Person next to a real photo. It’s up to you to decide which photo shows one of the flesh and blood.

You’ll find it won’t be long before you score 9 out of 10 on recognizing fake photos. You can also hone your skills as a deepfake detective in a test at Deepfact.3duniversum.

watch students

People in fake photos don’t look perfect, they’re not movie stars. It could be colleagues. However, you can usually see with your own eyes that this is a created photo. Regardless of the position of the head, the students are almost always in the same place. If you mark the eyes with gridlines in an image editing program like Photoshop, you’ll notice how unrealistic the pupils are all the time in the same place.

There are also regular errors of symmetry in the ears, or someone’s shoulder is smaller and you see half a head or part of someone else’s arm in the background. There is a tracking site: www.sensity.ai. It used to be free, but now it only works with a payment model. This service can show with some degree of certainty whether a photo is fake or not.

produce it yourself

A fake profile is not always malicious. Sometimes it is about people who want to be involved in political or social debates, for whom no one can hold them accountable. Yet this remains a dubious form of anonymity, as these people do not admit to being anonymous.

For certain projects and presentations, designers need photos of free, diverse and rights-free people. That’s why Icons8 has built a photo library on Created Photos containing 2,681,746 people, all created by AI. You are allowed to download these photos without copyright.

would you choose Browse photos, then you can always specify the background, gender, age, ethnicity, emotion and hair length of the first thirty photos in the grid. The online tool instantly responds to all photos when you move the sliders.

If you want to download a 1024 × 1024 pixel jpg, it will cost $2.99 per photo. Or you pay $19.99 to get 15 photos per month. As mentioned, you can also download the photo for free, but then you cannot use it for commercial purposes and the resolution is limited to 512×512.

face generator

Instead of browsing pre-created photos, you can also create faces yourself in face generator. Then you create a portrait yourself based on the settings in the left bar. You can edit the properties of this person in detail. This is possible if you want to turn your head a little to the left or up, or if you want the person to wear sunglasses or reading glasses. And again, you determine age, emotion, skin color and hair color. It is even possible to apply make-up to the eyes and lips. When you’re ready, you can drop the photo into the shopping cart.

Compare eye reflection

Not only are deepfakes getting better, but the tools to expose them are also getting more effective. A promising project is currently being developed by computer scientists at the University of Buffalo.

This is a new AI tool that recognizes GAN photos with 94% accuracy. The system detects fakes by analyzing the reflections of the eye’s corneas and comparing left and right. In a photograph of a real person, the reflection in both eyes will be the same because the eyes see the same thing. Deep fake images synthesized by GANs cannot produce exactly the same reflections. Deepfakes often show inconsistencies such as different geometric shapes or mismatched reflection positions.

The work is now complete, but the application is not yet available to the public. The system proves particularly efficient with the images on the No This Person website, as these are portrait photos that always show both eyes. If an eye is not visible, the method will not work.

reverse lookup

Going back to our first example: Jason Shoeaddict. How did the validators know that the profile picture of a key person in their Facebook group is the same as that of a recruiter for a Russian freelance agency? It started with healthy doubt. A reverse photo search revealed that the profile photo was used twice.

One of the most popular tools for such a reverse image search is TinEye, a service with an extension for the Chrome, Firefox, Edge and Opera browsers. Drag the suspicious photo into the search box or paste the web address into the search box.

After installing the extension, right click on the image and select the command Search for Images on TinEye. with function Compare to TinEye You can quickly switch between search and result images for even better comparison.

MatchEngine

TinEye also supposedly MatchEngine Considering the payment model, it is mainly aimed at companies. By subscribing to it, you will get your own web tool. With this, TinEye finds duplicate and almost duplicate photos. It also includes special features such as fraud detection to find damage photos submitted multiple times for insurance claims.

MatchEngine supports visual inspection and image verification for dating and social media platforms. TinEye shows the most modified, oldest, and newest versions of a photo. This allows you to see when the photo was first published and when it was used and modified more.

Source: Computer Totaal