Thanks to the leaps and bounds in the development of neural networks, today no one will be surprised by the ability to generate a picture from any text. We reviewed the two highest systems – DALL-E 2 and Midjourney.

However, recently they have a competitor that is already capable of repeatedly replacing both neural networks.

stable diffusion (page on GitHub) – the most discussed neuromodel in recent weeks generates images from a text query. Some already call her pertim release in this area for the whole of 2022. She is a page so powerful that she was even banned from Twitter for fear of crime.

This is because stable diffusion absolutely free, is easy to set up and can run on almost a large computer. You don’t have to beg to be added to the waiting list (with public ip don’t add!), as with DALL-E 2. And you do not need to use other people’s sites and servers, like middle of the road.

This whole night went well with the installation of Stable Diffusion on my Mac with an M1 processor. Won at 5 am. Tested and, to be honest, shocked still. Never before have such advanced neural networks been so common to the people.

How to install Stable Diffusion on your Mac with M1 for free

Yes, this is what Stable Diffusion looks like on the next computer.

What you need to install Stable Diffusion:

▪ Mac with an M-series processor

▪ 15 GB free disk space

▪ 16 or more GB of RAM is highly desirable (at 8 GB, generation will be very long)

Almost all installation actions, as well as playback of neural networks, are performed in Terminal (Terminal.app). There are no applications with a graphical interface in the neural network yet. But it’s a matter of time.

How to set stable diffusion? We go to project fork page and everything that is written there follows.

What, did you expect step-by-step instructions? Understand. I don’t specifically translate all the instructions into Russian, otherwise I don’t translate people to such an experience, they just waste time. If you think there is strength and something will frighten you (English language, setting Home, python and other packages in the Terminal, entering commands in English only), it’s better not to even start.

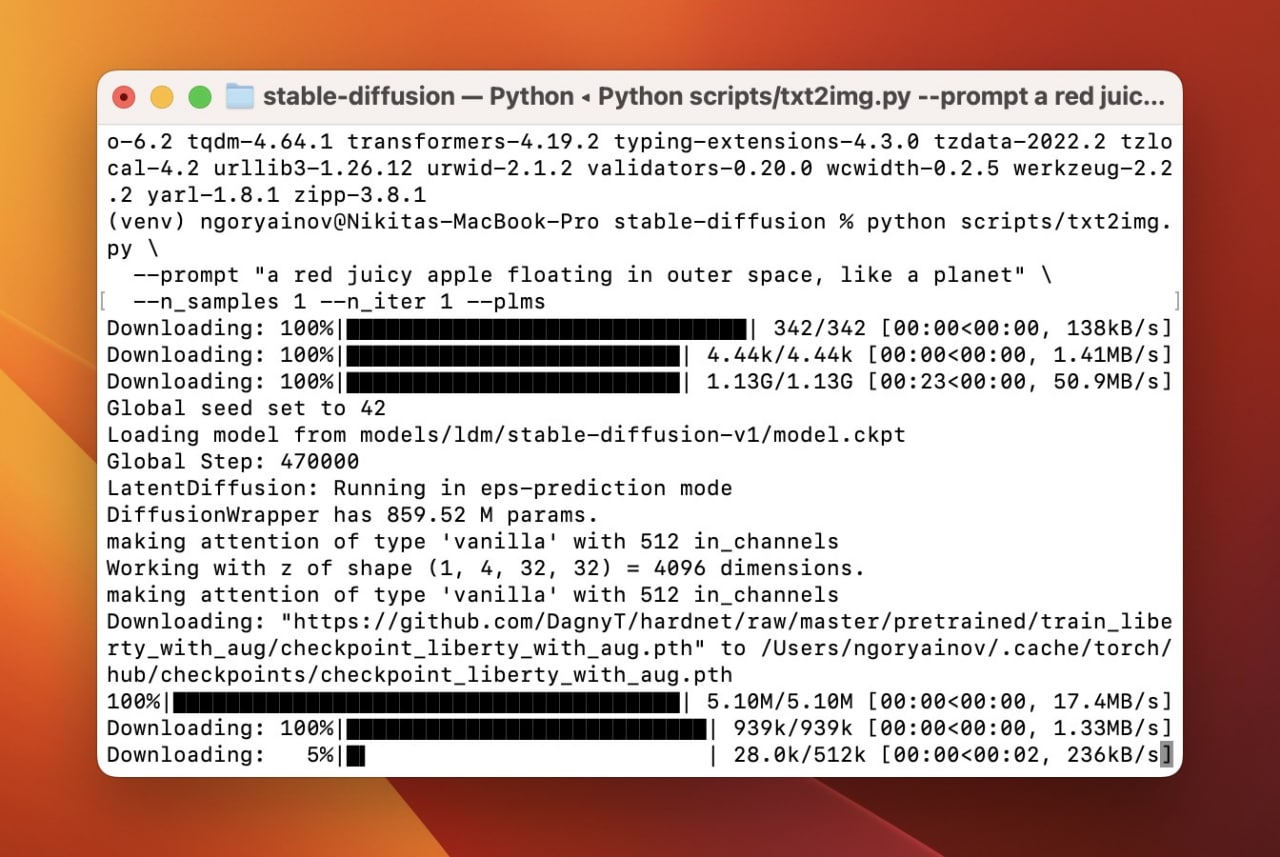

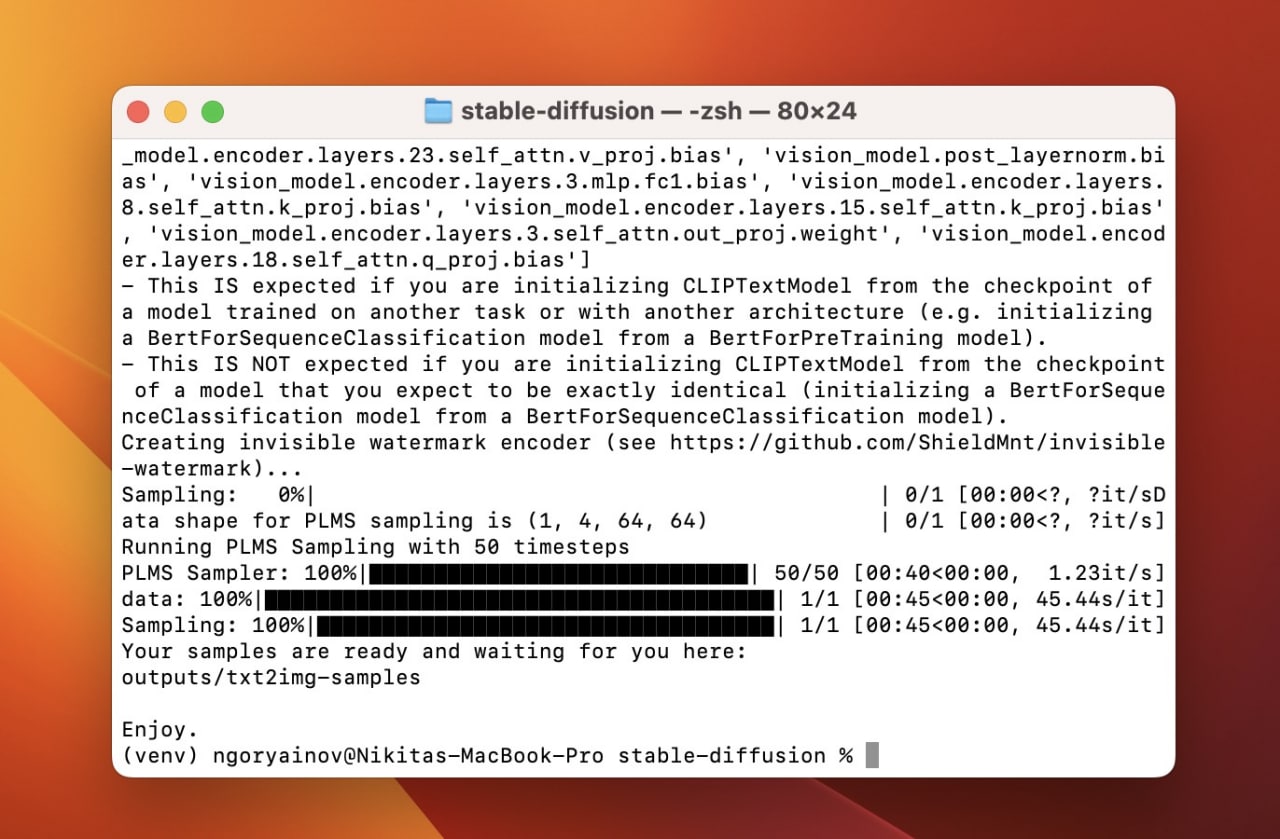

Stable Fusion generated an image from my text request and found it in the folder.

From myself to the instructions, which should be read very carefully, I will add only three things. First, immediately after installation pythoninstead of later, enter the command:

brew install Cmake protobuf rust torch

Secondly, before invoking the neural network itself (a step with the command git clone in the instructions on the link) go to the Finder along the path usr/local/opt/ and delete the folder python3. folder only python3.10.

Finally, if you have any problems with the installation process, especially on this wave “pip install -r requirements.txt” – go to the Discord project and look for a solution there. Name the error in this article, I will try to help.

The process is developing at first glance, but the main stability is only in the development of the English language and eliminates the occurrence of their mistakes.

Once you manage to get all the final instructions, you will be able to work with the Stable Diffusion neural network.

How to use stable diffusion

one. We open Terminal (Terminal.application) in macOS.

2. Sequentially enter and execute (Enter key) the following command:

cd stable-diffusion

source venv/bin/activate

3. Enter a text request to generate an image (entirely):

python scripts/txt2img.py \

--prompt "ЗАМЕНИТЕ ЭТОТ ТЕКСТ НА ТЕКСТОВЫЙ ЗАПРОС, НЕ УБИРАЯ КАВЫЧКИ" \

--n_samples 1 --n_iter 1 --plms

four. After 30-90 seconds of neural network operation, we take the results to the folder: /stable diffusion/output/txt2img-samples/

Difficult? If yes, then it is better to either wait for the release of the version with a graphical interface, or try this neural network online, in someone else’s cloud.

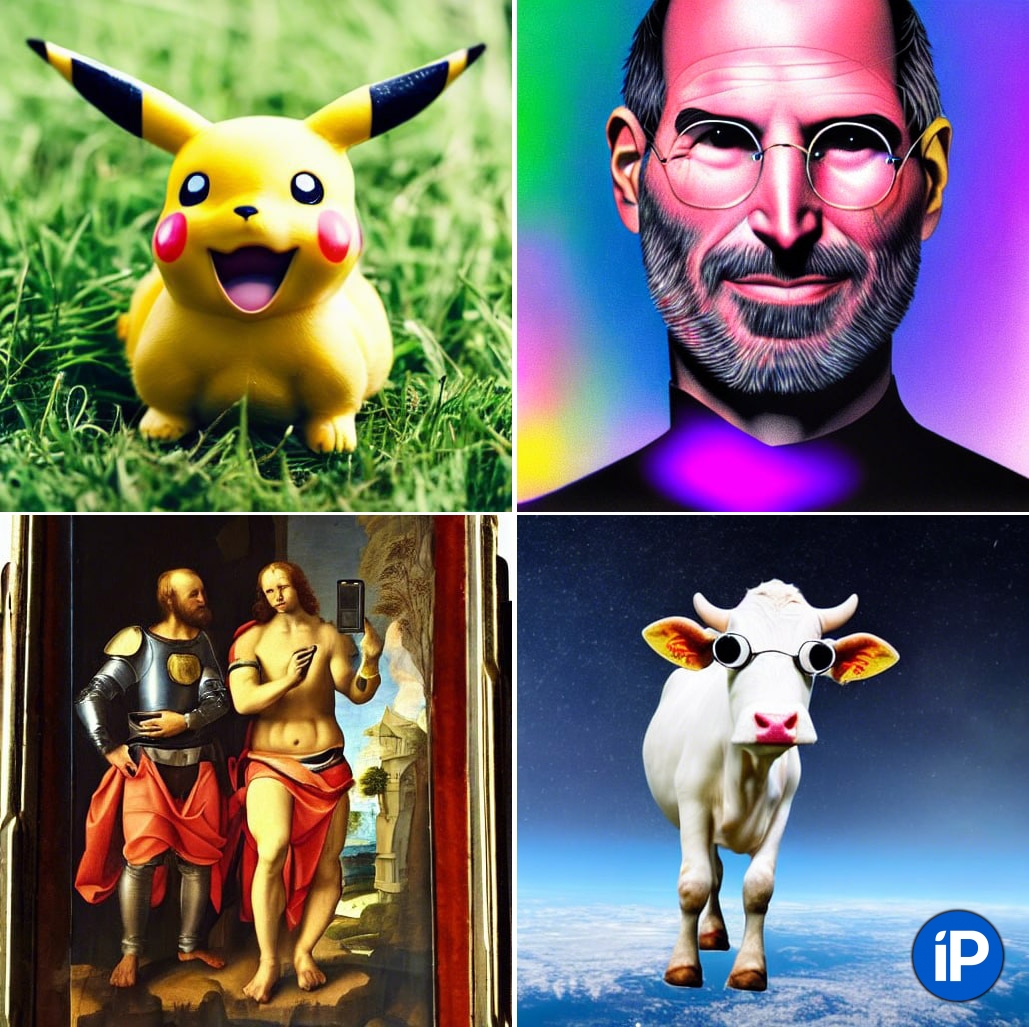

Sample images from text sources that I created with Stable Diffusion

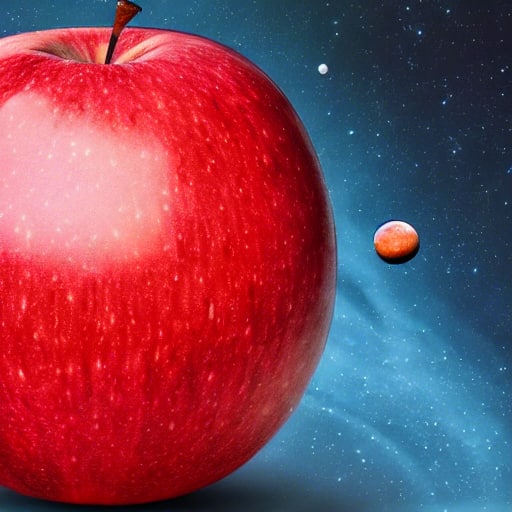

red juicy apple floating in outer space like a planet

I will say right away: below there will be very insipid results, immediately after five hours of the neural network, the fantasy does not work very well.

Creating files to generate images is considered a professional skill. If you want to get something specific, you need to work hard and try a lot of descriptors – words that direct the fantasy of the neural network in the right direction.

a bipedal robot dog firing an AK-47 at a training ground (reference)

realistic iphone made of cookies

realistic pikachu smiling and standing in the grass in the forest with bokeh effect

cat sitting on sofa memphis style colorful cheeky kitsch

renaissance painting of two men in medieval armor protecting an iPhone on a pedestal in the center of a massive cathedral hall

a small white cow in sunglasses soaring in space under the stars

Three “portraits” of Steve Jobs with slightly limited requests

stable diffusion – an incredibly powerful, flexible neural network, whose open source allows you to quickly evolve and fine-tune individually for those who wish. I think that over time, she or her followers will overshadow almost all existing neural networks.

The current version is not perfect. DALL-E 2 and Midjourney give better looking results on a range of documents. But Stable Diffusion is already capable of more than any other similar neural network with a possible code. And this is just the beginning…

PS If instead of a picture you have a picture of Rick Astley (the same “rickroll”), you have entered an approximation NSFW-request. Such are rejected by the neural network. The Discord project has a tip for disabling censorship. But then you show your fear and risk, and I will not bear responsibility.

Source: Iphones RU