“Although deep neural networks are promising, they are far from ideal computational models of human vision,” said the author of a new scientific study.

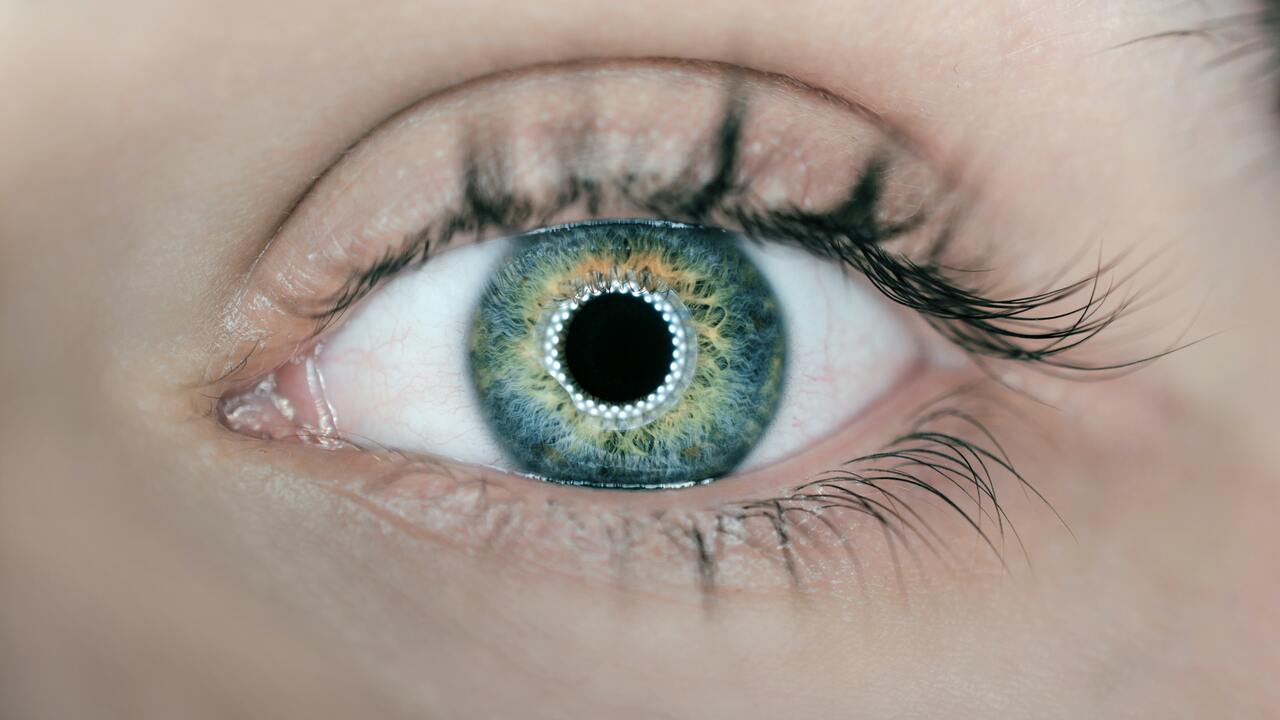

The team used a non-invasive medical test called magnetoencephalography (MEG), which measures magnetic fields produced by the brain’s electrical currents. Using MEG data, the scientists found that easily named parts of objects, such as “eye”, “wheel” and “face”, could explain the distribution of human neural dynamics beyond what deep learning can provide.

“These results suggest that deep neural networks and humans can partially rely on various object properties for visual recognition,” said the author.

The study shows that deep neural networks cannot fully explain the neural responses measured in human observers.

Source: Ferra

I am a professional journalist and content creator with extensive experience writing for news websites. I currently work as an author at Gadget Onus, where I specialize in covering hot news topics. My written pieces have been published on some of the biggest media outlets around the world, including The Guardian and BBC News.