Sometimes it can be helpful to download a complete website. The HTTrack tool is ideal for this. But think before you start because it generates a lot of data traffic on the provider of the site you are downloading from.

Downloading a website is helpful in that you always have it at hand locally. In our modern regions, this problem no longer exists. Gone are the days of dial-up modems and slow, unreliable connections. But when you go on vacation or on a business trip to distant places, a reliable internet connection is much less obvious. This may be a reason to download a particular site that you absolutely need for work or education in terms of reference material before you leave.

A much more interesting scenario is to download orphaned, historically interesting websites with unique content. More and more of these are disappearing for good. For example, because the owner has died, a landlord removes old ‘garbage’ etc.

History is slowly disappearing. Do you have a particular hobby where you use sites that you have noticed hasn’t changed over the years, then the chances of it disappearing unexpectedly increase. Such a scenario justifies downloading.

ideal for the nose

In principle, do not randomly download up-to-date and well-maintained websites. This costs the provider a lot of data traffic. Also, the downloaded material is then just a snapshot and gets old very quickly. Focus on those truly special sites, or those rare moments when you need such a snapshot in an area with no internet.

The free tool for downloading a site – not to mention a long time veteran – HTTrack comes in handy. It is available in a variant for Linux and Windows. Especially the Linux variant is very interesting. Downloading a large site can take days, sometimes more than a week. Speeds are very often limited by the website.

This means that the Windows variant is a bit clumsy because you have to leave one computer on for days and nights at a time. Not only is it a waste of energy consumption, but also the probability of restarting Windows between companies due to some updates is quite high.

For example, you’d better install a virtual Linux system on your NAS (if you have this option) and install HTTrack on it. This thing is usually on 24/7, so a good job won’t cost you any extra energy. Or consider a Raspberry Pi with a slightly more generous size of SD storage.

For example, Debian

We’ll start with the Linux variant (but if you’re using Windows you can join!), a Debian machine running as a VM on a Synology NAS. Launch the application to install HTTrack Software (click Activities ltop left and then on the open dock in the shopping bag). In the window that opens, click the magnifying glass in the upper left and enter the search term in the appropriate field web trace†

click WebHTTrack Website Copier and install the tool using the appropriate button on the page that opens. You can close the virtual software store again.

The Linux version can then be found in your software overview, which you can access on Debian by clicking the multi-dot button at the bottom of the dock. Your browser will then launch. Exactly this is the main difference between Windows and Linux version. The Windows version is a traditional .exe program, the Linux version is displayed in your browser via a local web server.

However, the functionality is the same, the only downside being that the Windows version is a bit ‘historic’ in appearance. But in both cases it works flawlessly and of course that’s what counts. press button Next – at the bottom right of the screen – to get started.

To work

As an example, let’s take a copy https://www.landley.net† Don’t mirror this site locally, this is just an example. In any case, a site with a lot of historically interesting information, especially Computer History†

Fill to get started New project name (The Dutch language module is not completely error-free) enter the description of your project. This could – logically – be the name of the site, possibly with the keyword in question. Basically you can leave the other settings as they are. click Next†

Copy the URL of the website to be mirrored locally and URL-block. then click Define settings† There are many options, followed by recommendations on settings that lead to the best results.

Optimal settings

settings below Links, Structure, MIME types, Browser ID, Filters, Protocol, Directory, Cache and proxy leave it at default values. You can tamper here only if you have very specific requests, but this will rarely or never be necessary. Are we going Expert† Experience shows that – under Global Advance Mode – choice Stay in the same domain stay at the same address (default† If you don’t get the entire site (or just the part you want), this is where you can experiment a bit.

maximum attention

At the top, tap power control, here also in principle it is best to leave the settings as they are. You can optionally enter the number of connections. N# connections raise something. It might make mirroring a little faster, but it puts more strain on the server the site is on.

Let’s try to keep things organized without filling in something like ‘100’ here… limits and put the option here Maximum outer depth on 0† If you don’t do this, you risk downloading the entire internet. Not only will this take a long time, but – understatement – it will take up a lot of local storage.

this Maximum transfer rate you can increase it at your discretion, for example, half of your available download bandwidth. Take a look at the other settings, you may find something to your liking there.

On very large and complex sites, not wise Maximum depth ilimit it a little, for example, to five levels. You may not get the entire site with this, but you will get the most important things. It saves download time and especially storage space. By the way, a field with no value means ‘unlimited’.

Robot

Many, if not all, websites these days have a built-in text file that indicates that the site may not be copied by robots. This is to prevent all kinds of automated tools and browsers from downloading all sites and completely cutting bandwidth. In principle, HTTrack also follows this rule. The result is predictable: nothing or almost nothing is downloaded from the site you want to copy.

In other words: now we have to be very rude and ignore that rule (wish). click spider and select below Follow the rules in robot.txt choice No robots.txt line† In principle, you are now ready in terms of settings; click OK To make all the above adjustments at once.

Handy index page

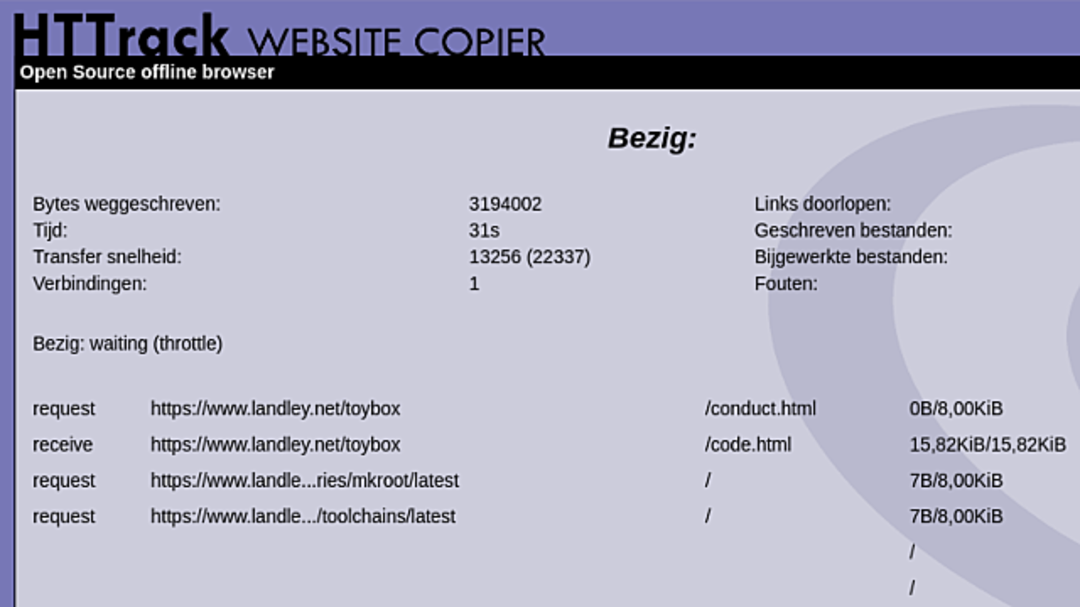

click Next and then Start, after which download from the site (local mirroring) will start. As mentioned, it can take quite a bit of time on larger sites, so sticking with the site often yields little benefit. You will automatically see a notification when mirroring is complete and the browser window can be closed.

You will find the site in the folder selected in the first step, under Debian this is the main folder by default (personal folder) associated with your Debian account. You will find the websites folder with downloaded sites in it.

It’s nice that HTTrack creates a handy index page. Double click the file index.html in the websites folder and you will see links to all the underlying download sites appear there.

Source: Computer Totaal